In 2024, artificial intelligence will continue taking markets by storm. The global AI market is expected to reach $407 billion by 2027, growing at a compound annual growth rate (CAGR) of 36.2% from 2022 (Source ). According to McKinsey, 55% of organizations now use AI in at least one business unit or function, up from 50% in 2022 and just 20% in 2017 (Source ). Finance, healthcare, retail, and manufacturing are some industries where AI has been used in every aspect.

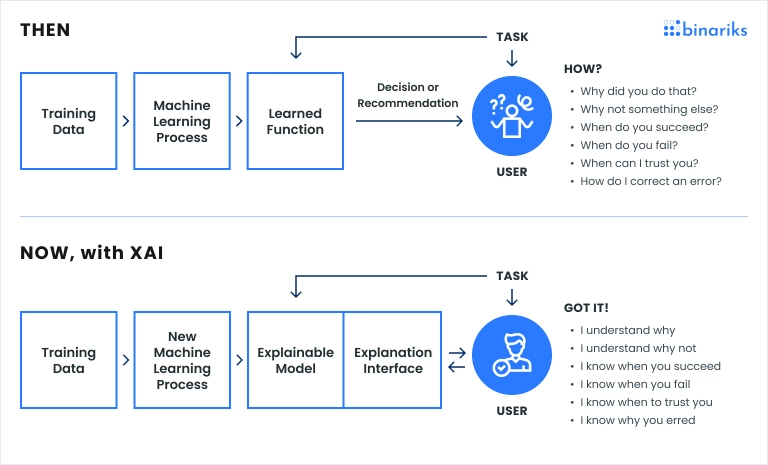

However, traditional AI systems have significant transparency limitations, as they cannot explain their decision-making to users. The rise of explainable AI—a subtype of AI capable of explaining its decision-making—is addressing this issue.

This article researches decision-making with explainable AI: how it differs from traditional AI, its core principles, benefits, techniques, and use cases in various industries.

What is explainable AI?

Explainable AI (XAI) refers to artificial intelligence systems designed so that humans can understand and interpret their decision-making processes. XAI aims to make the inner workings of AI models transparent and comprehensible so that humans understand how the technology arrives at its outputs. Explainable AI is made possible through design principles and adding transparency to AI algorithms.

There are four approaches to the explainability of AI models:

- Explaining the AI model's pedigree involves describing how the model was trained, what data was used, identifying possible biases, and explaining how to mitigate them.

- Proxy modeling uses a simpler model, like a decision tree, to approximate the more complex AI model.

- Design for interpretability involves making the AI model simple and easy to explain.

- Model interpretability focuses on making the decision-making process easy to understand through clear explanations and visualizations.

Here's how explainable AI differs from the traditional AI models:

- Traditional AI is often referred to as a "black-box" model, as these systems make decisions in ways that humans cannot easily interpret. Examples of such adaptive AI include deep learning models and complex ensemble methods. In contrast, explainable AI solutions focus on creating models that provide clear and understandable explanations for their decisions.

- Traditional AI requires specialized knowledge to understand the model's process. Explainable AI aims to make AI understandable to a broader audience, including stakeholders without deep technical expertise.

- Traditional AI is often used in areas where high accuracy is critical and interpretability is less important, like image recognition or game playing. Explainable AI is preferred when decision-making is crucial, such as medical diagnosis , credit scoring, and legal judgments.

Core principles of explainable AI

The US National Institute of Standards and Technology (NIST) issued the four explainable AI principles. To be truly considered explainable AI, the system has to:

1. Explain its output with supporting evidence.

Some of the possible types of explanations covered by this principle:

- Explanations that benefit the end-user. Example: A medical AI tool explaining a diagnosis to a doctor by highlighting the key symptoms and test results that led to the conclusion.

- Explanations that help trust the system. Example: A financial AI model providing an overview of how it assesses risk to build trust with financial advisors and clients.

- Explanations of how AI follows regulations. Example: An AI system used in hiring practices explains how exactly it complies with employment regulations and anti-discrimination laws, or an AI system explains how it follows legal regulations on AI.

- The explanation can help with algorithm development and maintenance. Example: Based on sensor data, the AI model can predict when equipment is likely to fail in a predictive maintenance system for industrial machinery.

- Explanations that benefit the model's owner, such as movie recommendation engines or the model for personal marketing strategizing.

2. The explanation has to be fully meaningful, allowing the users to complete their tasks based on it.

The system must also provide different explanations to different user groups depending on their skills and perceptions.

Example: An AI-powered diagnostic tool might offer simple explanations for patients (e.g., "You are at risk for diabetes due to high blood sugar levels") and more detailed insights for doctors (e.g., "The risk assessment is based on your blood sugar level of X, which is above the threshold of Y, along with other factors such as BMI and age").

3. The explanation must be clear and accurate (not to be confused with the accuracy of the output itself).

Example: An AI system used for loan approvals must clearly and accurately explain why a loan was approved or denied, such as detailing specific credit score criteria and income requirements.

4. The system has to operate within its designed knowledge limits.

AI systems must be aware of their limitations and operate within the boundaries of their designed knowledge to ensure reasonable outcomes.

Example: An AI chatbot providing customer support should know when to escalate an issue to a human representative if it encounters a problem outside its training scope.

The need for transparency in AI

Transparency of explainable AI is the critical factor that makes it different from traditional types of artificial intelligence.

Transparency is so essential because:

- Transparency in AI helps users and stakeholders build trust in artificial technologies. When users see how decisions are made and understand their rationale, there is no longer mystery surrounding AI and no longer any distrust. Users are more likely to engage with and accept AI systems if they understand their work and feel confident in their reliability. For example, in healthcare, doctors and patients are more likely to trust an AI diagnostic tool if they can understand how it arrives at its conclusions.

- Transparent AI systems enable accountability by clarifying who is responsible for the AI's decisions. This is important for legal and ethical reasons.

- Transparency helps stakeholders understand the strengths and limitations of AI systems, leading to better decision-making and more informed use of AI.

- Transparency is also essential in following legal regulations and standards . For example, the GDPR includes provisions for the "right to explanation," which mandates that individuals have the right to understand decisions made by automated systems. In the future, even more legal regulations will demand transparency from artificial intelligence.

However, despite these benefits, explainable AI solutions may sacrifice accuracy for the sake of explainability, which can be a problem in many implementations.

Benefits of explainable AI in decision-making

Explainable AI approaches have inherent benefits compared to traditional AI. They are better at helping professionals with decision-making that involves significant financial risks and even life-and-death situations in healthcare. Let's look into the benefits of implementing XAI:

Enhanced accountability

Explainable AI solutions enable users to track back the decisions of AI systems to specific inputs. This plays a crucial role in identifying errors and biases in system performance. Transparent AI systems enable accountability by clarifying who is responsible for the AI's decisions. This is important for the legal implications of AI decision-making. If the decision can be traced back, the users can fully see its legal and ethical implications.

Explainable AI principles also help eliminate bias. When you see a biased explanation made by XAI, you can override it. This corrects the algorithm against making similar decisions in the future.

Improved decision quality

By providing insights into how decisions are made, XAI helps improve the quality of decisions, especially when professionals implement explainable AI software.

For example, if the developers know what is wrong with the system, they can easily fix it on the spot. In general, the explainability of AI may empower all the participants in a situation to act in different situations, such as mortgage granting.

Increased user adoption

Users who understand how an AI system makes decisions are more likely to trust and adopt it. This could lead to more people using explainable AI software. For example, a loan officer can more easily trust an explainable AI solution on a declined loan application if he understands how XAI made that decision.

Professionals can also act upon the system output. For example, if an explainable AI solution predicts that a customer will not renew their subscription, a customer support representative may interfere with a discount.

Real-world applications of explainable AI

Now, let's look into some real-world applications of explainable AI (XAI) in healthcare, finance, and legal and compliance sectors.

Explainable AI adoption in healthcare

Medical image processing and cancer screening

- Explainable AI examples include computer processing of medical images through the implementation of computer vision techniques. AI algorithms can also process lab results and patient records.

- Explainable AI implementation has a proven track record of detecting cancers like renal cell carcinoma, non-small cell lung cancer, and metastases to lymph nodes due to advanced breast cancer.

- Unlike traditional artificial intelligence, XAI allows healthcare professionals to understand how an AI model arrived at a diagnosis or treatment recommendation. For example, an AI system diagnosing cancer can highlight the specific features in a medical image that led to the diagnosis, helping doctors validate and trust the AI's suggestions.

- The doctor then uses the information obtained from explainable AI software to find suspicious patterns in an image and make a diagnosis. Final results still require significant attention and qualifications from a medical professional. Whole slide imaging of human tissues with XAI is the most accurate technology for image screening. It highlights high-risk areas and is very helpful for a doctor in making a final diagnosis. For example, IBM Watson Health uses XAI to provide transparent and interpretable insights in oncology.

Patient risk prediction

- Predictive models assess patient risk for heart disease, diabetes, or potential readmission.

- XAI enables clinicians to understand the factors contributing to the predicted risk, such as age, medical history, lifestyle factors, and lab results. This helps in making informed decisions and personalized care plans. The explainable AI software can set an alert when the patient is at risk of developing a specific condition or specific implication.

- For example, the Mayo Clinic uses explainable AI tools to predict real-time patient deterioration. These tools analyze vast amounts of patient data, such as vital signs, lab results, and medical history, to identify patterns that may indicate a decline in a patient's condition. They have also been used to analyze ECGs to predict heart failure and detect conditions like atrial fibrillation before symptoms appear (Source ). Overall, the Mayo Clinic has been the world's pioneer in adopting explainable AI approaches.

Explainable AI adoption in finance

Credit scoring and lending decisions

- Explainable AI for operations management in finance evaluates creditworthiness and makes lending decisions by analyzing financial data, credit history, and other relevant factors. They can also assess insurance claims and optimize investment portfolios.

- XAI provides transparency in how credit scores are determined. This increases trust in the system and ensures fairness. Explainable AI gives financial professionals detailed explanations of why loans are granted or not granted. This helps both decision-makers and customers who can change their approach to their credit score based on intricate feedback.

- For example, ZestFinance uses explainable AI to offer transparent credit scoring. This helps financial institutions see which variables influence credit decisions and why certain customers are approved or denied. In the realm of wealth management, AI in wealth management is revolutionizing how financial advisors manage and optimize their clients' portfolios.

Fraud detection

- AI systems detect fraudulent activities by analyzing transaction patterns and user behavior.

- XAI helps investigators understand the reasoning behind fraud alerts. This saves time when identifying false positives and understanding fraud patterns.

- For instance, one of the explainable AI examples in fraud detection is FICO. It uses explainable AI in its fraud detection systems to explain why certain transactions are flagged as suspicious.

Financial news mining

- Another interesting case is using financial news texts and fundamental analysis to forecast stock values. A prime example is "trading on Elon Musk's tweets," which dramatically affected the value of several cryptocurrencies (Dogecoin and Bitcoin) and the value of his own company.

- Explainable AI algorithms can help proceed thousands of news headlines and social media posts simultaneously and analyze the context to help users decide on purchases and sales of financial assets.

Explainable AI adoption in legal and compliance

Legal document review

- AI tools assist in reviewing and analyzing legal documents, contracts, and case law to identify relevant information and make legal predictions.

- XAI ensures that legal professionals can understand how AI tools interpret and analyze legal texts.

- One example of explainable AI software is LexPredict. The application uses explainable AI to analyze and summarize large volumes of legal documents, providing lawyers with clear explanations of the key points and decisions derived from the texts.

Regulatory compliance

- AI systems ensure compliance with regulations by monitoring activities, transactions, and communications to ensure adherence to legal standards.

- XAI clarifies how compliance decisions are made and helps organizations understand and correct non-compliant behaviors.

- An illustration of this is IBM's OpenPages with Watson, which uses explainable AI to help organizations manage risk and compliance by offering transparent explanations for risk assessments and compliance checks.

Take your software to new heights with tailored AI/ML solutions

Techniques for achieving explainable AI

In this section, let's discuss how explainable AI algorithms actually work with the help of particular techniques.

Post-hoc explanation methods

Post-hoc explanation methods provide explanations after the AI model has made its decision. These methods do not alter the original model but offer insights into how the decisions were made.

- LIME (Local Interpretable Model-agnostic Explanations)

LIME approximates the model locally by creating interpretable models for individual predictions. It perturbs the input data and observes the changes in the output to determine which features are most influential.

Example: In a medical diagnosis system, LIME can highlight which symptoms and test results were most influential in predicting a disease.

- SHAP (SHapley Additive exPlanations)

SHAP values are based on cooperative game theory and provide a unified measure of feature importance. They explain the output of any machine learning model by calculating the contribution of each feature to the prediction.

Example: In a credit scoring model, SHAP values can show how each feature (income, credit history, and employment status) contributed to the final credit score.

- Anchor explanations

Anchor explanations provide high-precision rules that capture the model's behavior for a particular prediction. These rules act as "anchors" that describe conditions under which the model makes a specific decision.

Example: For a customer churn prediction model, anchor explanations can provide conditions like "If the customer has called customer service more than five times in the last month, they are likely to churn."

Intrinsic explainability

Intrinsic explainability involves building inherently interpretable models that provide explanations as part of their design. These models are simpler and designed to be understandable by humans.

- Decision trees

Decision trees split data into branches based on feature values, leading to a tree-like structure where each leaf represents a decision outcome. The path from root to leaf shows the decision process.

Example: In a loan approval system, a decision tree can show the step-by-step criteria (like credit score, income, and existing debts) used to approve or deny a loan.

- Rule-based systems

Rule-based systems use a set of "if-then" rules to make decisions. These rules are explicitly defined and easy to follow.

Example: In a fraud detection system, rules such as "If the transaction amount is greater than $10,000 and the location is different from the usual, flag as potential fraud" can be used.

- Linear models

Linear models, such as linear regression, are straightforward and provide clear insights into how input features contribute to the output.

Example: In real estate pricing, a linear model can show how factors like location, square footage, and number of bedrooms influence the predicted house price.

Visualization tools

Visualization tools enhance understanding by providing graphical representations of AI decision processes. These tools help users see and interpret complex model behaviors and results.

- Partial Dependence Plots (PDP)

PDPs show the relationship between a subset of features and the predicted outcome while marginalizing the values of all other features.

Example: In a marketing model predicting customer purchase likelihood, PDPs can illustrate how changes in advertisement spending affect the probability of purchase.

- Feature importance charts

These charts rank features based on their importance to the model's predictions, clearly indicating which features most influence the outcome.

Example: In a healthcare model predicting patient outcomes, a feature importance chart can highlight the most critical factors, such as age, medical history, and treatment type.

- Confusion matrix

A confusion matrix visualizes the performance of a classification model by showing the true positives, false positives, true negatives, and false negatives.

Example: For a spam detection model, a confusion matrix can show how often emails are correctly or incorrectly classified as spam or not.

- Model-specific visualization tools

TensorFlow's What-If Tool allows users to explore model behavior interactively, examining how changes in input features affect predictions and identifying potential biases.

Challenges in implementing explainable AI

Complexity of AI models

Modern AI models, especially deep learning models, are highly complex, with millions of parameters and intricate architectures. This complexity makes it challenging to understand and interpret their decision-making processes.

It can be difficult to prioritize explainability in AI if multiple other things are being prioritized. Besides, the complexity makes understanding and interpreting their decision-making processes difficult.

For example, Convolutional neural networks (CNNs) used for image recognition have multiple layers of convolutions, activations, and pooling operations that create a black-box effect, which makes it hard to explain how a specific image is classified.

AI models can be intentionally created without transparency to protect corporate interests. In less intentional cases, developers may simply not account for AI explainability down the road. Finally, making explanations that are not too technical and can be comprehended by a vast pool of end users is simply difficult.

Technical considerations

Implementing XAI requires advanced technical expertise and resources. Developing and integrating explanation methods, maintaining model performance, and ensuring scalability can be technically challenging.

For example, applying SHAP values to a large-scale deep learning model requires significant computational resources and machine learning and cooperative game theory expertise. Organizations may lack the technical infrastructure and skilled personnel to effectively implement and maintain XAI systems.

Balancing accuracy and explainability

There is often a trade-off between an AI model's accuracy and its explainability. Highly accurate models, such as deep neural networks, are usually less interpretable, while simpler, more interpretable models, like linear regression, may not achieve the same level of performance.

For example, a random forest model might provide better predictive performance for a financial forecasting task than a decision tree, but the decision tree offers more straightforward explanations.

In any case, finding the right balance between accuracy and explainability is difficult. Compromising too much on either side can lead to suboptimal outcomes. For example, doctors may find it challenging to blindly trust black-box AI applications that they do not understand, especially in life-and-death situations like spotting tumors.

Navigating bias and mistakes

Just like other AI solutions, explainable AI tools can make mistakes. The mistakes are inherently related to algorithms, and explainability does not eliminate these mistakes' presence. Moreover, AI services may have an inherent bias.

Luckily, the interaction of professionals with AI systems via feedback can help eliminate these biases. Examples of such biases include the recent lawsuit that involved the Apple Card lowering credit scores of women, which was deemed sexist by US regulating bodies (Source ). In another example, there are biases to implementing XAI in the judicial system. There are bodies of research that prove biases towards ethnic groups in decision-making with explainable XAI.

More advanced XAI algorithms that are excellent at justifying their decisions can help solve these biases. It also requires good judgment from human professionals who can give intricate feedback about XAI outputs.

Conclusion

To conclude, the importance of explainable AI for better decision-making is evident. Explainable AI improves data-driven decision-making by making the AI process clearer, more verifiable, and more trustworthy. It opens up the "black box" of traditional AI, allowing us to see and understand how decisions are made.

This AI can do more than offer suggestions depending on how it's implemented. Tracing an AI's decision-making steps allows us to discover new solutions to our problems or explore alternative options. Naturally, there are limitations to explainable AI, including biases and sacrificing accuracy. However, they can be addressed by professionals' good decision-making when interacting with AI.

If you are a business considering explainable AI implementation, we challenge you to consider how you might integrate Explainable AI (XAI) into your own AI systems or decision-making processes. If you need help implementing XAI, Binariks is here to help.

We can:

- Assess areas where explainable AI (XAI) can be implemented

- Develop a strategic plan to integrate explainable AI (XAI) into existing AI systems.

- Develop custom XAI models

- Integrate XAI capabilities into existing AI platforms

- Help ensure legal compliance

Share