Computer vision today is a practical tool transforming industries through computer vision for medical imaging , fraud detection in fintech, automated inspection in manufacturing, and countless other applications.

As CV is rapidly moving from a niche capability into a foundational technology across industries, leaders must understand how these systems are built, what risks they carry, and which practices increase the chances of success. The implementation of computer vision becomes both a technical and an organizational challenge.

What you will learn here:

- Technical strategies to build resilient systems

- Step-by-step structure of a computer vision project

- Key obstacles and how to address them

- Real examples of successful applications across industries

- Actionable advice on starting and scaling CV adoption

Keep reading to see how to approach computer vision strategically, avoid costly missteps, and set up your projects for long-term success.

What is computer vision?

Computer vision is a branch of artificial intelligence that enables machines to analyze and interpret visual data. These systems can recognize objects, detect anomalies, or track movements across industries requiring precision and speed.

Hospitals use it for diagnostics, insurance companies for car damage detection , retailers for shelf analytics, and urban planners for traffic control.

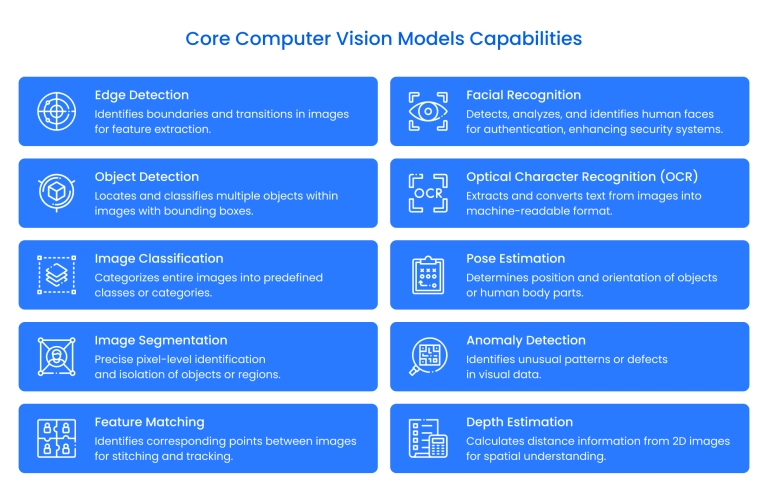

The scope of computer vision projects is wide, yet most applications fall into several core categories:

- Image classification – labeling entire images based on their content, such as identifying whether an X-ray shows signs of disease.

- Object detection – locating and classifying multiple items within a frame, for example, spotting vehicles and pedestrians in autonomous driving.

- Segmentation – dividing images into pixel-level regions to understand detailed structures, often applied in medical imaging.

- Facial recognition – matching or verifying identities from visual data, used in security and fintech services.

- Anomaly detection – highlighting unusual patterns, such as detecting defects in manufacturing or fraud attempts in banking.

Technical strategies for robust real-world CV systems

Scaling computer vision from prototypes to production requires strategies that keep systems stable, accurate, and cost-efficient.

1. Data augmentation for environmental variability

Training data rarely covers every scenario. By augmenting datasets with shifts in brightness, angle, noise, or background, models become more adaptable. This approach minimizes failures when deployed in environments with inconsistent lighting or camera quality.

2. Model architecture choices for production

Selecting the right neural network is a trade-off. Lightweight architectures like MobileNet ensure fast inference on resource-constrained devices, while deeper networks are used where maximum precision is required.

For specialized tasks like human pose estimation in workplace safety applications , frameworks like PyTorch with MMPOSE provide the architectural flexibility needed for accurate joint detection across diverse environments. Matching architecture to context keeps performance balanced.

3. Infrastructure and scaling considerations

Large-scale computer vision relies on the proper infrastructure. Cloud deployments handle massive image streams at scale, whereas edge devices offer real-time processing for latency-sensitive use cases. A well-designed infrastructure plan also includes monitoring pipelines to track drift and optimize cost.

4. Monitoring and maintenance strategies

Deployed models require continuous monitoring to detect performance degradation over time. Model drift occurs when real-world data differs from training datasets, while infrastructure monitoring ensures system reliability.

Establishing feedback loops and retraining schedules keeps computer vision systems performing optimally as conditions change.

Taken together, these strategies form the backbone of reliable CV implementation.

Ready to build computer vision systems that actually work in real-world conditions? Let's partner up.

Steps to implement a computer vision project

Building a computer vision system requires a disciplined step-by-step process. Below is a structured roadmap showing how to implement computer vision effectively, moving from prototype to production in a way that ensures long-term scalability.

Problem definition

Every effort starts with defining the business challenge. For instance, in retail environments, the goal could be to automatically track inventory levels and detect product placement issues through visual monitoring. A clear definition ensures the team can implement a computer vision project with measurable KPIs.

Data collection & annotation

Gathering diverse datasets and annotating them correctly is crucial. Poor labeling leads to inaccurate predictions, while balanced data prevents bias. In healthcare projects, for instance, images must represent different devices, lighting, and patient demographics to guarantee robust outcomes.

Model selection & training

Choosing an architecture is context-driven. CNNs remain strong for classification, while transformer-based networks are advancing in multi-task performance. Training involves multiple iterations, with hyperparameter tuning and cross-validation. Using pre-trained models often accelerates computer vision project implementation while cutting costs.

Evaluation & optimization

Testing methodologies must reflect real-world scenarios. This includes running pilots under different conditions to see how models handle noise, blur, or environmental variability. Optimization through pruning and quantization allows deployment on devices with limited computational power.

Deployment & integration

Knowing a computer vision deployment at scale means thinking beyond the model itself. Integration with existing infrastructure, performance monitoring, and model drift detection are all essential.

By moving step by step, from problem framing to integration, organizations can implement computer vision projects that deliver consistent performance, scale efficiently, and evolve.

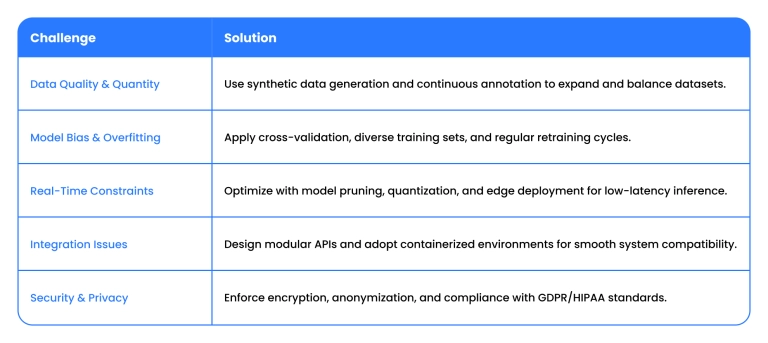

Common challenges in computer vision

When moving from prototypes to production, teams encounter several recurring issues. These computer vision problems can impact accuracy, scalability, and security if not addressed early. Below are the most common challenges in computer vision:

- Data quality and quantity – insufficient or poorly labeled datasets reduce accuracy and limit generalization.

- Model generalization & bias – overfitting to training data creates biased outcomes in real-world use.

- Real-time processing constraints – high latency prevents reliable deployment in time-sensitive scenarios like autonomous driving.

- Integration with legacy infrastructure – connecting new CV systems to existing workflows and software stacks often proves complex.

- Security and privacy risks – handling sensitive images, such as medical scans or facial data, raises compliance and ethical concerns.

Practical solutions to overcome these challenges

Best practices can help organizations bypass the most frequent roadblocks. The table below outlines computer vision solutions mapped directly to typical challenges:

Real-world examples of Binariks' computer vision projects

Binariks has successfully delivered advanced computer vision solutions across diverse industries, transforming complex business challenges into automated, scalable systems.

Here are just two examples of comprehensive case studies that demonstrate Binariks' technical expertise and strategic approach to AI implementation.

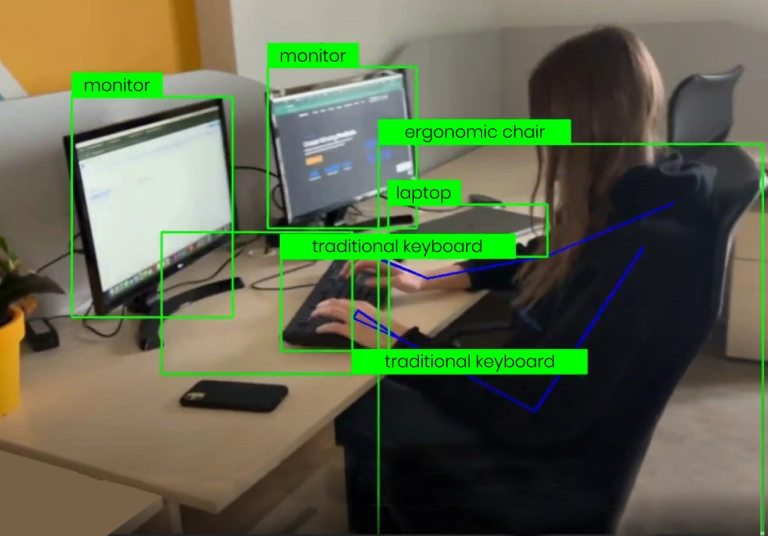

AI-powered workplace ergonomics assessment

Our client was a US-based occupational health company employing over 200 specialists dedicated to improving workplace safety and reducing long-term musculoskeletal risks. The company helps organizations across facilities, warehouses, and offices adopt healthier, more ergonomic practices through comprehensive assessment solutions.

Traditional professional ergonomic assessments were effective but proved too resource-intensive and time-consuming for large-scale implementation. While virtual assessments offered some improvement, they still required significant professional oversight for data collection and analysis, limiting scalability.

The company's attempt to address this through employee self-assessments revealed new challenges: these evaluations were highly inconsistent and prone to human error across diverse office environments.

The challenge

The client faced a complex set of scalability and accuracy issues:

- Professional assessments: Highly accurate but too resource-intensive and slow for large-scale deployment

- Self-assessments: Fast but inconsistent and prone to human error

- Complex environments: Diverse office setups with variable lighting, furniture layouts, and device configurations

The company needed an AI-driven solution to automate posture and workspace evaluations while maintaining professional-grade accuracy and enabling seamless scaling across different organizational environments.

Binariks' solution

Core computer vision technologies:

- Deep learning framework: PyTorch with MMPOSE integration

- Pose estimation models: Custom-trained for office environments

- Object detection: Office furniture and equipment recognition

- Cloud infrastructure: AWS SageMaker for scalable training and deployment

Key technical components:

- Custom pose detection models optimized for complex office environments

- Real-time video and image processing for immediate assessment feedback

- Event-driven AWS architecture using Lambda functions and DynamoDB

- Label Studio integration for efficient dataset creation and annotation

- Multi-environment adaptation handling diverse lighting and spatial conditions

Dual-functionality design:

- Employee self-assessment: Automated evaluation with pre-filled data based on AI analysis

- Professional review mode: Structured data capture for expert ergonomist analysis

Measurable impact

Business outcomes:

- Process automation: Eliminated manual bottlenecks in ergonomic evaluations

- Scalability achievement: Enabled company-wide assessments without proportional resource increase

- Competitive advantage: Modernized service offering with AI-first approach

- Data quality: Consistent, structured assessments across diverse office environments

Technical success:

- High-accuracy pose detection in challenging office lighting conditions

- Seamless integration with existing client platforms

- Full ownership transfer of AI/ML models and intellectual property

- Future-ready architecture supporting 3D spatial risk analysis expansion

Real-time surgical scene recognition for OR optimization

A London-based health tech company has built a leading global healthcare platform integrating telepresence, content management, and data insights.

This platform now serves over 800 hospitals across 50+ countries, supporting over 16,000 users and collaborating with 40 medical device organizations. Modern operating rooms represent hospitals' most valuable and resource-intensive assets, yet inefficiencies in surgical scheduling and room turnover continue to plague healthcare facilities.

Many hospitals struggle with tracking OR availability in real-time, resulting in underutilized facilities, prolonged patient wait times, and significant resource waste. The client recognized this challenge and saw an opportunity to leverage AI-powered video analysis to transform OR utilization.

The challenge

Operating room inefficiencies created multiple pain points across the healthcare system:

- Scheduling delays: Inability to track OR availability in real-time

- Resource waste: Underutilized facilities due to poor turnover visibility

- Patient impact: Prolonged wait times affecting care quality

- Data gaps: Limited insights into Operating Room Effectiveness (ORE)

The client needed AI-powered video recognition to identify critical surgical milestones and optimize OR utilization across their extensive hospital network.

Binariks' solution

Advanced computer vision architecture:

- Model selection: TimeSformer (selected after comprehensive evaluation of MoviNets, TSM, 3DCNN alternatives)

- Training data: 500+ hours of annotated surgical videos

- Real-time processing: GStreamer integration for live inference

- Cloud deployment: AWS SageMaker with EC2 experimentation environment

Research-driven development process:

- Comprehensive model evaluation: Systematic comparison of state-of-the-art video recognition architectures

- Custom data pipeline: Specialized preprocessing for surgical video analysis

- Scalable deployment: Cloud-native architecture for a global hospital network

Event recognition capabilities:

Measurable impact

Proof of concept success:

- 82% accuracy achieved in surgical event recognition

- Real-time performance enabling immediate scheduling optimization

Business value delivered:

- Operational efficiency: Automated OR status tracking reduces downtime

- Data-driven insights: Precise ORE metrics identify optimization opportunities

- Scalable solution: Cloud-native design supports global deployment across 800+ hospitals

- Future growth foundation: Established groundwork for predictive analytics and deeper AI-driven process optimization

For detailed technical specifications, implementation timelines, and additional project insights, explore the complete case studies: AI-powered workplace ergonomics assessment and Real-time surgical scene recognition solution .

How to start your computer vision implementation

The first step for companies considering computer vision adoption is to identify a precise business case and clear expected outcomes. Without this foundation, most problems appear later in deployment.

Successful projects also require the right infrastructure strategy. Cloud services enable scalability, while edge deployments reduce latency for real-time use cases. Planning ahead for model monitoring, retraining, and cost control is essential, since even the most accurate models drift over time.

Key steps to start your implementation:

- Define the use case: Identify a business problem where vision systems add measurable value (e.g., fraud detection, medical diagnostics).

- Secure quality data: Collect and annotate diverse datasets that mirror real-world conditions to reduce bias and ensure generalization.

- Select infrastructure: Decide between cloud, edge, or hybrid deployment models depending on latency, cost, and scalability needs.

- Plan for lifecycle management: Include monitoring, retraining workflows, and drift detection to keep models accurate over time.

- Prioritize integration: Ensure CV solutions fit seamlessly into existing enterprise systems and workflows.

- Leverage technical expertise: Partner with specialists who provide end-to-end support, from early prototyping to enterprise-grade deployment.

Working with a full-cycle partner accelerates progress and minimizes risks. An experienced provider offers guidance across all stages, from prototyping to deployment, monitoring, and long-term optimization.

Contact Binariks to discuss how we can help you implement computer vision solutions that deliver measurable results.

Share