Healthcare organizations face a stark reality: the average cost of a data breach climbed to $10.93 million in 2023. Patient trust and hefty regulatory penalties are always on the line.

Now, with AI and large language models (LLMs) gaining traction in clinical workflows, HIPAA compliance isn't just a formality – it's a business necessity. These AI tools process huge amounts of private data, introduce new risks, and call for stronger safeguards, all while regulators scramble to keep up.

This guide offers clear steps for rolling out HIPAA-compliant AI systems, from getting your patient data ready to picking vendors that put privacy first. We'll walk through practical scenarios and smart tactics to help you avoid breaches, connect AI with old-school IT, and set your team up for secure, ethical results.

What you'll learn:

- Real-world risks and trends in healthcare AI privacy

- How to evaluate vendors and negotiate Business Associate Agreements (BAAs)

- Strategies for rolling out AI: self-hosted, cloud, and healthcare-specific vendors

- Best practices in data preparation, security, and how to handle breaches

- Change management tips for ethical, transparent AI adoption

HIPAA compliance and regulatory landscape in healthcare AI

Let's be honest: rushing into AI in healthcare feels risky. You're juggling sensitive patient data and strict regulations – one slip-up could cost you deeply. That's why HIPAA compliance matters even more when you introduce AI and LLMs.

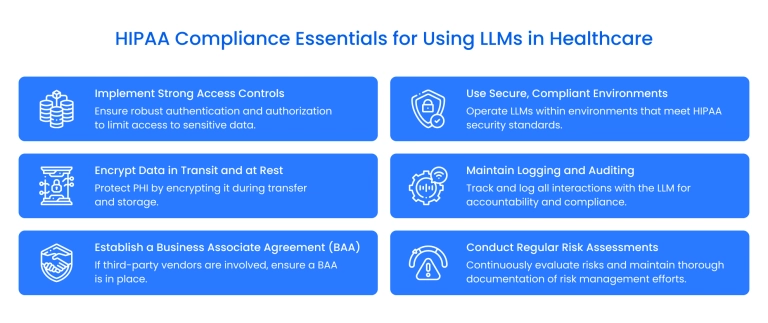

What HIPAA really means for AI and LLMs

Protecting patient privacy isn't up for debate. HIPAA sets the rules for handling Protected Health Information (PHI), and any AI tool dealing with patient records, treatments, or clinical notes must stay compliant. Using AI introduces new challenges: these systems sift through massive text, images, and even live conversations. They can surface patterns, but can just as easily leak private details if not tightly managed.

You need systems in place to de-identify (using HIPAA's Safe Harbor method to remove 18 specific identifiers), audit, and control access at every stage – training, running, storing, and sharing AI data. HIPAA violations can cost up to $50,000 for each incident. Breaches damage reputations and careers. Meeting HIPAA standards is the starting point; protecting your patients and brand means going beyond the bare minimum.

Risks: PHI exposure, data breaches, and re-identification

AI offers smarter diagnostics, streamlined workflows, and personalized care. But with every new tool, new risks emerge. Here's what you need to watch:

PHI exposure:

AI models need large volumes of training data, often pulled directly from patient records. If data isn't properly de-identified, private info can sneak into AI responses. LLMs can reproduce patterns from training data through training data leakage – when models output verbatim text from their training datasets – rather than literally "remembering" information.

Scenario: Let's say you're using an LLM to summarize doctor notes. Inadequate de-identification during training could result in patient identifiers appearing in model outputs through training data leakage patterns.

Data breaches:

Healthcare tops the list for data breaches. Attackers target AI pipelines because they connect various systems – one weak spot could expose everything.

Re-identification threats:

Even data that looks anonymous isn't always safe. Advanced re-identification techniques include linkage attacks (combining de-identified datasets with external data sources), model inversion attacks (extracting training data from AI models), and membership inference attacks (determining if specific patient data was used in training). AI models, by design, are good at spotting patterns, making this risk even higher.

Bottom line: Never assume your data is safe just because you removed a name or ID. Regular reviews, strong encryption, and limiting exposure every step of the way are key.

Changing regulatory requirements

Regulators know AI is changing healthcare fast. While HIPAA leads the way regarding privacy, new updates are coming.

What's current:

- The Office for Civil Rights (OCR) regularly expands HIPAA safeguards to address AI risks ([HHS OCR Guidance](https://www.hhs.gov/hipaa/for-professionals/security/guidance/index.html)).

- The FDA now reviews AI/ML-based Software as Medical Device (SaMD) with specific requirements for predetermined change control plans, algorithm change protocols, and real-world performance monitoring.

- States like California have added new rules (CPRA) for algorithm transparency and consumer rights.

What's ahead:

- Get ready to show how your AI makes decisions – "Explainable AI " is moving from a buzzword to a requirement.

- Federal proposals may soon require full "impact assessments" for any AI handling PHI.

- Vendors will need to prove they have reliable audit trails and emergency plans in place.

- Tightening rules means acting now could save a lot of trouble later.

Vendor evaluation and signing BAAs

Picking an AI vendor isn't easy. Between shiny marketing promises and real risks, you must make sure you're choosing a trustworthy partner.

Checklist for vendor vetting:

- Security policies: Demand clear details on encryption, access, and their track record with breaches.

- Proof of compliance: Ask to see SOC 2 or ISO 27001 certifications, HIPAA audits, and a map of how they handle PHI.

- Staff training: Confirm that privacy is part of their training, not just a technical afterthought.

- Business Associate Agreements (BAAs):

If your vendor works with PHI, you need a BAA. The BAA should lay out who's responsible for what, how data is handled, and what happens if something goes wrong. Double-check:

- Clear lines on PHI usage

- Strict security protocols

- Detailed breach notification steps

- Controls for any subcontractors

Remember, BAAs aren't a magic shield. You still need to audit and review regularly.

Protecting against algorithmic bias and ensuring transparency

AI isn't neutral. Data and model choices can lead to real-world bias – think misdiagnosis or uneven care. Here's how to keep AI fair:

- Review training data: Make sure it's broad, clean, and strips out stereotypes. Bring outside specialists if needed.

- Insist on transparency: Know which inputs the AI uses and exactly how it reaches decisions.

- Test for bias regularly: Don't just check once; set up ongoing reviews using stats or trusted people.

- User control: Doctors and staff should always be able to see, question, and override what AI suggests.

If AI in healthcare is on your radar, we want to be your long-term partner – not just a quick fix.

Binariks helps you build HIPAA-ready solutions and set up ongoing reviews so you stay ahead of changing rules. You get expert advice, reliable security, and the support you need, focused on growth and your reputation. Ready to move forward? We'll go carefully, together.

Need AI/ML experts who understand your industry challenges?

Deployment strategies for HIPAA-compliant AI solutions

Rolling out an AI system that fits your healthcare practice – without creating compliance headaches – is a tough puzzle. Maybe you're choosing between cloud platforms and running models on your own hardware. Maybe you're stuck on costs, value, or integrating with existing systems.

Let's break down your real options, what's working for healthcare today, and how to find the best return on your investment.

Self-hosted, cloud, or specialized vendors – which model fits?

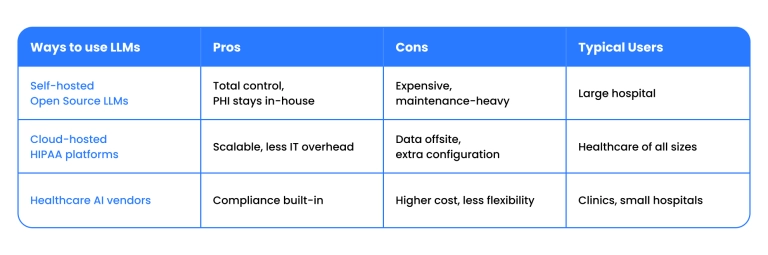

Self-hosted open-source LLMs

If privacy and control top your list, use open-source models on your own gear. Your data never leaves your site, which is great for HIPAA. The downside? You have to handle everything – hardware, updates, hiring tech experts, and scaling as your needs grow. This route fits big organizations with solid IT teams who can front the cost.

Cloud-hosted HIPAA-capable platforms

Cloud giants like AWS and Google Cloud offer environments that meet HIPAA standards. You get easy scaling, managed services, and strong infrastructure. However, you need a BAA, must use HIPAA-approved resources, and must manage access carefully. It's less technical work for you, but some organizations still worry about sharing PHI offsite.

Specialized healthcare vendors

If you don't have deep IT resources, healthcare-specific vendors bring ready-to-integrate, HIPAA-certifiable solutions designed for clinical use. They handle the compliance side and workflow setup. You'll need to double-check their certifications, but most of the complexity is handled.

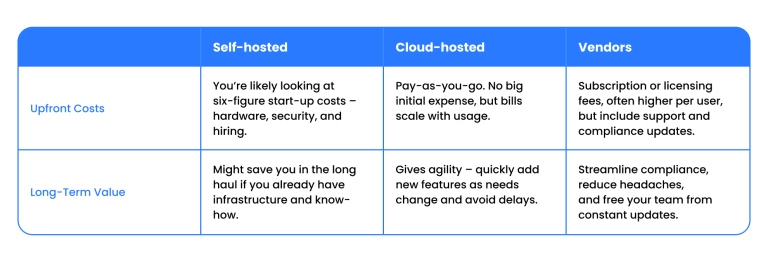

Cost-benefit and ROI

Budgets are tight, and every tech dollar should count.

Your best choice depends on your organization and current resources.

- Big hospitals may save over five years with self-hosted solutions, especially if they’re already invested in infrastructure.

- Regional clinics and growing practices often see faster deployment and less regulatory risk with vendor platforms.

Making AI Work with Legacy IT

Old EMRs, billing systems, and clinical tools don't play nice with new AI tech. Here’s how real teams get past roadblocks:

- Start with standards: Use HL7, FHIR , or familiar APIs – less risk than custom builds.

- Middleware tools: Solutions like Mirth Connect or Redox can smooth the gap between older systems and modern AI.

- Pilot first: Try AI in a single department. Lessons from small rollouts save big headaches later.

- Staff training: Upskilling everyone helps boost adoption rates. Run workshops and share cheat sheets for new tasks.

- Tap vendor help early: Lean on your vendor’s support team – they’ve seen the same problems before and can save you weeks.

Staggered, parallel launches with backup data copies are the safest way to test, spot bugs, and protect patient care.

Rolling out HIPAA-compliant AI isn't just about picking the flashiest tech. You need to match tools to your team, workflows, and risk tolerance. Whether you want hands-on control or prefer a service bundle, prioritize flexibility and tight data protection. Your choice sets the framework for smarter, safer care.

Keeping data safe: Preparation, privacy, and security

Healthcare data holds huge promise for AI – and big risks. If you're worried about privacy lapses or unsure if your tech is secure, you're absolutely right to look for real answers. We get it and are here to help you shore up data protection with practical, ongoing support.

Data preparation: Scrubbing, organizing, classifying

Before AI can work, your data must be in order. Healthcare info comes in many forms – appointments, insurance, prescriptions, even handwritten notes. Careful preparation means no surprises down the road.

- De-identification and data scrubbing: Use HIPAA's Safe Harbor method to remove 18 specific identifiers (names, addresses, birth dates, medical record numbers, etc.). Note that this creates de-identified data, not truly anonymous data – re-identification may still be possible through sophisticated linkage attacks.

- Labeling and classification: Tag PHI and other sensitive details so only the right staff – and systems – can access them. This helps decide who gets to see what and which data needs extra locks.

Prepped, clean data lets your team relax, knowing privacy isn't just luck – it's baked in.

Technical safeguards: The "lock every door" approach

After prepping, it's time to protect the data. Our toolkit includes:

- Encryption: Whether data is stored or in transit, it stays scrambled – using standards like AES-256 so even well-equipped attackers get nothing readable.

- Role-based access (RBAC): Only authorized people get access, and only as much as needed. Lock things down by department, role, and need.

- Audit logging: Every touch is recorded. These logs help trace mistakes, prove compliance, and close the loop when something goes wrong.

- Advanced privacy techniques: Consider differential privacy during model training, federated learning for multi-site deployments, and homomorphic encryption for computation on encrypted data.

We walk through these controls with your team, so privacy becomes second nature.

Risk assessments: Routine checks and fixes

No system is perfect. Finding issues early is critical.

- Regular reviews: Network scans, code checks, and password audits prevent vulnerabilities from becoming full-blown breaches.

- Practical fixes: Each check is followed by clear action items your team can handle quickly.

- Ongoing learning: Every review builds your team’s knowledge, driving constant progress.

- Model-specific assessments: Evaluate different AI architectures for varying risk profiles – fine-tuned models have higher memorization risks, while RAG systems require vector database security.

Monitoring: Setting metrics that matter

You can't protect what you can't see. We set up dashboards that make issues visible:

- Non-compliance alerts: Spot unauthorized access, failed logins, or violated policies instantly.

- KPIs for security: Track how quickly incidents get fixed, numbers of breached records, and who's up-to-date on privacy training.

- Automated alerts: Get real-time warnings so you act right away, not just after quarterly reviews.

We show you which numbers matter, so you focus on effective oversight.

PHI and breach management: Being ready for anything

Breaches happen. Being prepared separates successful teams from regrettable headlines.

- Limit exposure: Systems show PHI only when absolutely needed and store it in ways that block re-identification.

- Incident response plans: Lay out clear steps – contain, investigate, notify, fix, and review.

- Staff training: Human mistakes make up much of the problem. Ongoing education keeps privacy sharp.

- Vetting vendors: Only let partners with tight privacy controls handle your data.

Steps for breach response

- Contain: Stop the spread, cut risky connections.

- Investigate: Dive into audit logs to understand what happened.

- Notify: Let impacted staff, patients, and regulators know.

- Remediate: Patch holes, update protocols, double-check fixes.

- Improve: Learn from every incident and update your safeguards.

You're not alone – Binariks is your privacy ally.

We work side by side with your team, building strong safeguards and ongoing training so your organization stays prepared for every twist and turn. Our partnership gives you more than compliance – it's persistent protection you can count on. If you want a hands-on review or just a fresh look at your current risks, reach out. Your data deserves this level of care.

Operational integration, ethics, and change management

Bringing AI into healthcare isn't just a technical update – it's a human challenge. How you slot new systems into your old workflows, keep patient trust, and help staff feel confident matters just as much as the tech itself.

Making AI fit with legacy systems

You've spent years building out EMRs, billing, scheduling, and workflow apps. Adding AI can feel like swapping engines in a classic car – it's powerful but needs careful tuning. Integration works best when you build "bridges" instead of starting from scratch.

Our team looks at how your current workflows overlap with your new AI touchpoints. We're not after dramatic overhauls – just smart connectors that let familiar tools run alongside new features. Think of it as adding parallel train tracks so daily business continues without delay.

What works:

- Syncing data between old EMRs and AI dashboards

- Automating follow-ups so staff aren't bogged down

- Spotting workflow slowdowns before they become problems

Testing happens in real-life conditions, not just in the lab. That way, your team can rely on the results.

Ethics and patient consent

AI in healthcare means handling deeply personal data. Skipping due diligence here isn't just risky – it wrecks trust.

Patient consent should be clear and easy. Every request or access is logged – patients get straight-to-the-point opt-ins, never a mess of legalese. Staff understand the "Why" behind every rule, and there's a full audit trail for oversight teams.

Our focus:

- Training staff on new data rules and ethics

- Creating simple tools for patient data control

- Building solid records for every access and request

We make sure your team is ready for hard questions on fairness and transparency.

Getting your whole team on board

Rolling out AI is less about software and more about people. Some staff will welcome change; others may worry or resist.

Success means listening and supporting everyone. We start with candid, anonymous surveys to see how people feel and what worries them. Training focuses on what's needed, not generic tech talk. Support is ongoing, with follow-ups and troubleshooting as issues arise.

Steps we follow:

- Gauge feelings: Honest feedback from the team

- Targeted training: Focus on real-world needs and new skills

- Continuous help: Fast answers and regular check-ins for any hiccup

The best AI launch is one where everyone – from front desk to physicians – feels confident and included.

Private AI deployments: Maximum control

Cloud platforms have benefits, but sometimes you need tighter control. Private AI means data stays inside your organization, with access locked down and customization at your fingertips.

We help you:

- Keep data and models on your own servers

- Set custom rules and features specific to your workflow

- Guarantee data sovereignty for strict regulations

If strict local rules or extra privacy worries keep you up at night, private AI deployments offer peace of mind.

Risk management: Keeping compliance in check

Regulatory rules can change fast, and missing a requirement creates real risks. We help you stay ahead with:

- Automated audits: Catch privacy slip-ups so you can act right away

- Scenario planning: Practice responses for worst-case situations, from breaches to random inspections

Keep a compliance champion on your team – we'll help train them so every new feature and upgrade gets careful review.

Final thoughts

AI in healthcare should open doors, not headaches. By working together – with honest communication and practical fixes – you can roll out smart systems, protect what matters, and help everyone win. Questions, worries, or just getting started? Contact Binariks and let's build your roadmap.

Bringing AI into your healthcare organization is more than just a technical upgrade. It means building systems that respect patient trust, keep pace with AI regulations , and empower your team to succeed.

With the right strategies for compliance, privacy, and smooth adoption, you'll be ready to move ahead confidently. If you want to explore safe, smart AI – or you need a partner for the journey – let's make secure innovation happen together.

Share