Generative AI is now a core part of how organizations create, analyze, and communicate. It writes code, generates content, and supports decision-making, but it also opens new attack surfaces that traditional cybersecurity tools cannot fully protect.

Prompt injection, data poisoning, and model manipulation can expose confidential data or distort AI outputs, creating risks that extend far beyond technical flaws. This is where generative AI security becomes essential, introducing methods and policies to safeguard not only code but also the models and data that drive AI systems.

The rise of GenAI security reflects a shift in how companies approach protection. It combines access control, model governance, and continuous monitoring to secure AI throughout its lifecycle.

As more enterprises embed intelligent tools into their workflows, as described in generative AI in business , CISOs and developers must learn to build security directly into every prompt, dataset, and model output rather than adding it after deployment.

In this article, you'll learn:

- What GenAI security means and how it applies to modern AI systems

- Why conventional cybersecurity methods fall short for generative models

- Key risks CISOs must address before scaling AI initiatives

- Best practices for securing AI pipelines and user interactions

Understanding this emerging security layer is key to deploying AI responsibly and maintaining user trust.

What is generative AI security?

Generative AI security refers to a new approach that protects systems built on large language models and other generative technologies.

Unlike conventional cybersecurity, which focuses on network perimeters and code vulnerabilities, this field addresses risks specific to model behavior, data inputs, and AI-generated outputs. Its goal is to safeguard how models learn, respond, and interact with users — preventing threats such as data leakage, prompt injection, and model manipulation.

According to IBM's X-Force Threat Intelligence Index 2024 , "the rush to adopt GenAI is currently outpacing the industry's ability to understand the security risks these new capabilities will introduce". That warning highlights the urgency of creating dedicated defenses before large-scale attacks begin.

IBM's researchers predict that once generative AI platforms consolidate and dominate enterprise markets, organized cybercriminals will actively target them, just as they did with Windows Server and Microsoft 365 in the past.

Modern generative AI cybersecurity measures now extend beyond infrastructure to include prompt filtering, model validation, and continuous monitoring across the entire AI lifecycle.

Forward-thinking generative AI companies are already adopting these frameworks to build generative AI security solutions that maintain data integrity, protect intellectual property, and ensure user trust.

How does generative AI security work?

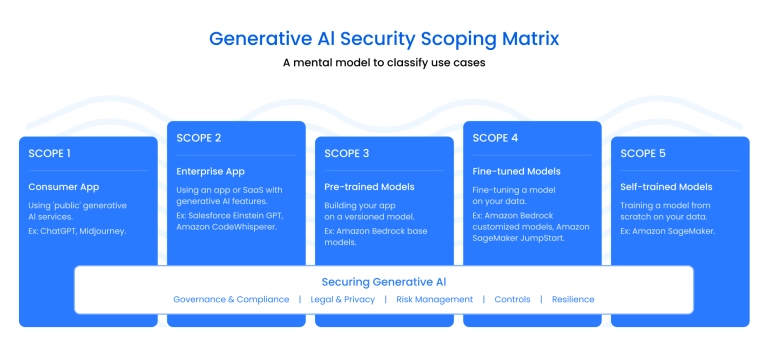

The visual above illustrates how security for generative AI spans the entire model lifecycle, from data collection and training to deployment and real-time interaction. Each layer represents a critical point of control, where companies must monitor how the model learns, responds, and generates content.

Traditional cybersecurity focuses on infrastructure and code, but generative systems require active oversight of data inputs, prompts, and outputs to prevent misuse or manipulation.

A reliable generative AI model security framework includes several key components:

- Input filtering and validation, to intercept malicious or misleading prompts before they reach the model.

- Output moderation to ensure the system doesn't generate or expose sensitive data.

- Data governance and encryption, protecting both training and inference data from breaches.

- Continuous threat modeling, adapting defenses as models evolve and face new attack types.

Forward-thinking organizations are now adopting AI-driven security strategies to automate these defenses. By combining AI monitoring with human oversight, companies can detect unusual model behavior early, prevent data exposure, and keep generative systems both productive and safe.

The importance of GenAI security

Generative models work with sensitive data, produce autonomous outputs, and influence business decisions, which makes security in generative AI a core requirement rather than a technical enhancement.

Without proper safeguards, models can reveal confidential information, generate misleading content, or be manipulated through crafted prompts. These failures lead to financial losses, compliance violations, and long-term damage to brand credibility.

Companies adopting advanced tools or scaling AI development services face these risks even more directly because AI becomes embedded in products, workflows, and customer interactions.

For CISOs, generative AI protection is now a policy and governance responsibility. They must define how training data is handled, who can access models, how outputs are monitored, and what controls prevent model abuse. This includes documenting risk thresholds, establishing review processes, and preparing incident response plans for AI-specific threats.

As adoption accelerates, organizations also turn to generative AI for security to detect anomalies in behavior, support compliance monitoring, and identify signs of manipulation earlier than manual review alone.

GenAI security matters because it preserves trust, protects data integrity, and ensures that AI-driven decisions remain reliable under real operational pressures.

The different types of GenAI security

Below is a refined overview of the core layers that shape generative AI application security. These areas help companies build safer AI systems as they expand with enterprise-grade tools supported by AI development services .

- Data security and governance

Manages how training and inference data is collected, anonymized, encrypted, and accessed. Proper controls prevent leakage and reduce the risk of corrupted datasets.

- Model security

Focuses on integrity checks, version control, and adversarial testing. This is a central part of generative AI and security, ensuring the model cannot be silently altered or manipulated.

- Prompt and input security

Filters and validates user input before it reaches the model. These controls block prompt injection attempts and reduce the risk of harmful or misleading instructions.

- Output monitoring and verification

Evaluates generated responses for hallucinations, sensitive information, and policy violations. This keeps model outputs safe, predictable, and compliant.

- Access control and identity management

Defines who can interact with datasets, internal tools, and model endpoints. Role-based access and authentication limit high-risk or unauthorized activity.

- Operational security

Includes runtime monitoring, anomaly detection, and incident response workflows. This protects live systems from suspicious behavior during real usage.

- Infrastructure and deployment security

Secures cloud environments, containers, pipelines, and compute resources. Even a well-trained model becomes vulnerable if its hosting environment is not protected.

- Governance and compliance

Establishes policies, audits, and risk documentation to align AI operations with legal and organizational requirements.

- Content integrity and anti-manipulation

Detects falsified or manipulated outputs, preventing downstream misuse and protecting decision-making processes.

- Abuse and misuse monitoring

Tracks activity patterns to identify probing, unauthorized testing, or boundary-pushing attempts. This layer reinforces long-term security of generative AI applications and helps systems adapt to emerging attack methods.

These components, together, form a clear, practical framework for building resilient, trustworthy generative AI protection across enterprise environments.

Why traditional security models fall short for generative AI

Traditional cybersecurity focuses on networks, code, endpoints, and user access. These models assume that threats target systems from the outside and that vulnerabilities can be patched or isolated.

Generative models behave differently. They learn from vast datasets, respond dynamically to prompts, and generate outputs that can be influenced or manipulated. This creates internal and behavioral risks that conventional tools cannot detect, because the attack surface shifts from software flaws to the model's interpretation and output.

New vulnerabilities also arise from how generative models interact with users. Attackers can exploit blind spots such as prompt injection or hidden instructions. These are not code-level problems but logic-level weaknesses.

As a result, companies need a security framework specifically built for generative AI, one that monitors prompts, model behavior, and output reliability rather than relying solely on perimeter defenses.

Key gaps include:

- Traditional tools cannot identify prompt-based manipulation or hidden intent within natural language.

- Code scanning does not reveal poisoning or bias within training datasets.

- Infrastructure security cannot prevent a model from generating harmful or confidential content.

- Incident response workflows are not designed for threats that originate within the model's reasoning process.

- Conventional monitoring ignores output quality, making hallucinations or synthetic manipulation hard to detect.

Emerging generative AI threats that CISOs can't ignore

Generative models create new risks that traditional tools often fail to detect. CISOs now face threats that target model behavior rather than infrastructure, making them harder to trace and monitor.

These attacks exploit how the model interprets prompts, learns from data, or generates outputs, which means conventional defenses rarely catch them early.

Key emerging threats include:

- Prompt injection that bypasses safety rules

- Data poisoning that alters long-term model behavior

- Model inversion used to extract sensitive training data

- Synthetic content attacks that mimic trusted information

- Slow manipulation attempts that shift model responses over time

For companies and CISOs, this creates a clear reality: securing generative models means protecting not just the system, but the logic inside it.

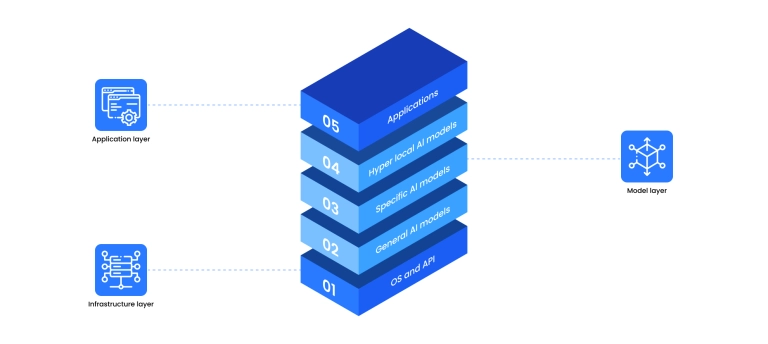

The dual-layer AI security model

The visual breaks generative AI into three main parts: infrastructure at the bottom, models in the middle, and applications on top.

This structure also reflects the dual-layer security approach. The first layer protects the technical environment around the model, while the second focuses on the model’s internal behavior.

Both are needed because generative systems introduce risks that traditional tools cannot fully cover.

- Layer 1 – Traditional development security

This corresponds to the infrastructure layer in the image. It includes secure coding, API protection, access control, encryption, and hardened cloud or on-prem environments. These controls keep the system stable so attackers cannot compromise the platform that hosts AI workloads.

- Layer 2 – Generative AI security

This layer aligns with the model layer. It focuses on how the model processes prompts, learns from data, and generates responses. Key safeguards include input filtering, output monitoring, data governance, and behavior tracking.

This layer addresses AI-specific threats such as prompt manipulation, data leakage, and hallucinations — risks that infrastructure security alone cannot handle.

Building protection at the prompt layer

Security at the prompt layer focuses on controlling and evaluating user inputs before they ever reach the model. This is the first point where manipulation, unsafe intent, or hidden instructions can be intercepted.

By enforcing strict guardrails here, companies prevent a large share of prompt-based attacks and reduce the risk that the model will generate harmful or confidential outputs.

Key protection methods include:

- Prompt filtering, which screens inputs for toxic language, hidden instructions, jailbreak attempts, or patterns known to trigger unsafe behavior.

- Prompt validation, where the system checks user intent, allowed actions, and compliance rules before forwarding the request to the model.

- Prompt rewriting, which reformats or neutralizes risky elements in user prompts to keep interactions within safe boundaries.

- Contextual policy checks, ensuring inputs match authorization levels, and preventing users from requesting information they shouldn't access.

- Guardrail models, lightweight classifiers that evaluate prompts in real time and block or modify anything that falls outside predefined safety thresholds.

How to secure generative AI: Best practices

- Establish strict data governance: Use anonymization, access control, and data-quality rules to prevent sensitive information from entering or leaking through the model. Clear policies also reduce the risk of attackers manipulating training data.

- Validate and filter all user prompts: Input controls block harmful instructions, injection attempts, and hidden intent before they reach the model. This reduces the chance of unsafe behavior triggered by adversarial prompts.

- Monitor model outputs continuously: Automated checks and human reviews help detect hallucinations, policy violations, and confidential disclosures. This prevents risky content from reaching users or internal systems.

- Use role-based access control for model endpoints: Restricting access to authorized users limits misuse and internal threats. It also makes suspicious activity easier to identify and contain.

- Apply adversarial testing and red-teaming: Regular stress tests reveal how attackers might manipulate model behavior. They also help teams understand weaknesses that traditional QA cannot detect.

- Protect training pipelines from poisoning: Secure data sources and validate datasets to prevent corrupted samples from altering model behavior. Continuous integrity checks help spot tampering early.

- Implement environment and infrastructure hardening: Secure cloud settings, APIs, and containers to eliminate backdoor paths to the model. Even a well-trained model becomes vulnerable if its environment is exposed.

- Add guardrail models or classifiers: Lightweight filters can evaluate prompts and outputs in real time. They block or adjust content that violates safety rules or contains high-risk patterns.

- Track model drift and behavior changes: Ongoing evaluation ensures the model stays predictable as it processes new data or interacts with users. Detecting drift early helps prevent unexpected or unsafe responses.

- Create AI-specific incident response procedures: Traditional IR plans rarely cover model manipulation or harmful outputs. Dedicated playbooks allow teams to respond faster when AI-related incidents occur.

These best practices create a layered defense that protects data, inputs, model behavior, and infrastructure. When applied together, they help teams prevent manipulation, reduce leakage risks, and keep generative systems reliable under real-world conditions.

How Binariks helps secure generative AI solutions

Binariks helps companies build and deploy generative AI with a security-first approach. Our teams design protected data pipelines, implement guardrails for prompts and outputs, and set up monitoring to detect model drift, unsafe behavior, or anomalous activity early. This ensures every solution remains stable, compliant, and safe under real-world conditions.

We also support organizations in strengthening governance, improving access controls, and integrating security checks across the full AI lifecycle. If your team is scaling generative capabilities, Binariks can help you build secure, reliable systems that meet enterprise requirements.

Share