The EU AI Act is the first comprehensive legislation designed to regulate artificial intelligence , establishing clear rules for safety, transparency, and accountability. By categorizing AI systems based on risk levels, it sets out requirements that developers and users must follow to ensure ethical and secure AI applications.

For businesses, preparing for the EU AI Act is essential — even for those outside Europe. This regulation will shape global AI markets and standards, making compliance necessary for companies aiming to maintain access to EU markets or align with evolving international expectations.

According to Forbes, Fortune 500 companies have already spent $7.8 billion on systems and processes to meet stringent compliance demands (Source ). The financial and operational stakes are high, but preparation offers an opportunity to build stronger, more reliable AI practices.

In this article, you'll find:

- The impact of the EU AI Act on AI development and deployment.

- A breakdown of the Act's risk-based classification system.

- Common compliance challenges and practical ways to address them.

- Actionable steps to align your business with the regulation.

- Why quality data and effective risk management are crucial for compliance.

Understanding the EU AI Act is not just about avoiding penalties; it's about aligning with a framework that will influence the future of AI globally. Read on to find out the details.

What are the implications of the AI Act for AI development?

The EU AI Act sets a new standard for AI development , introducing strict requirements that impact how businesses create and implement artificial intelligence systems. Its primary focus is protecting user rights, ensuring transparency, and mitigating risks, all of which demand significant changes to current AI practices.

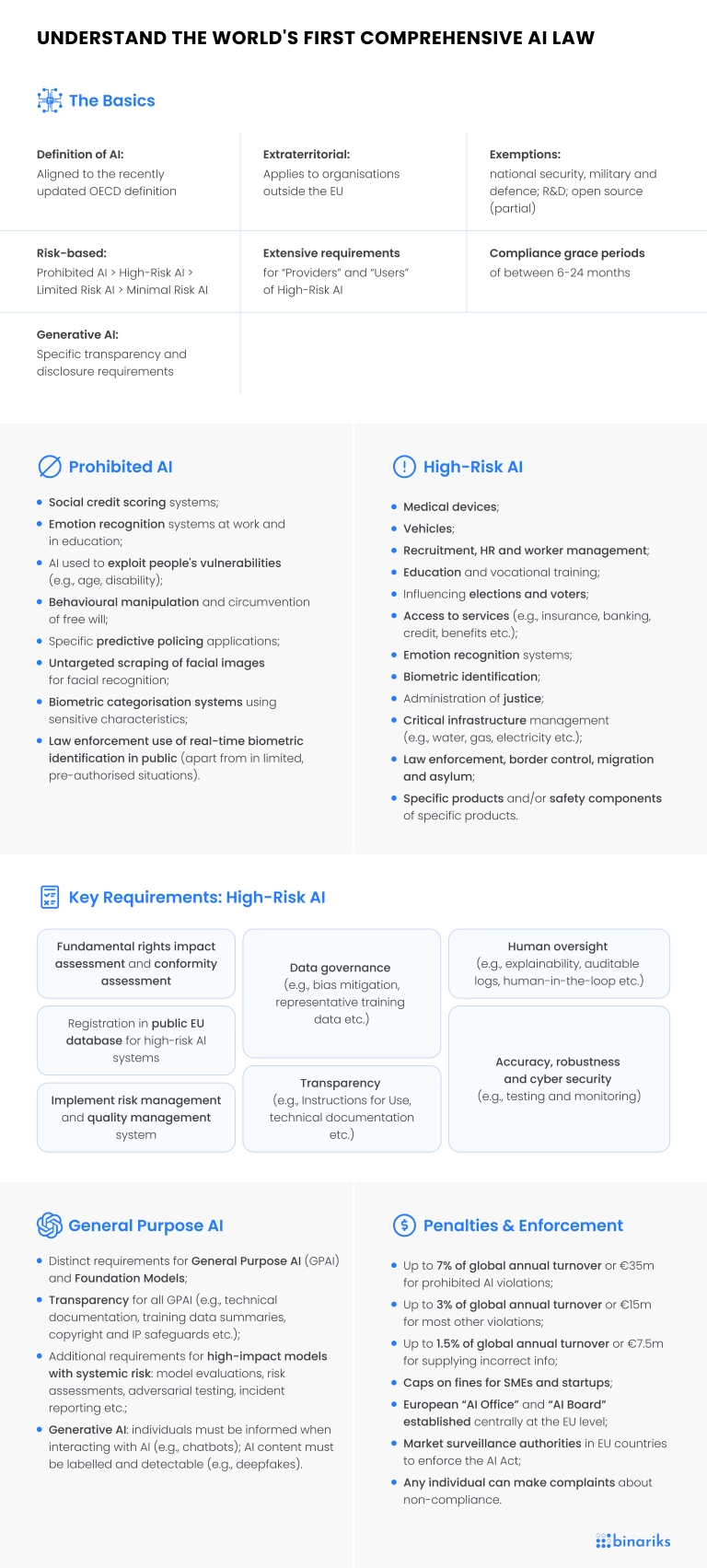

Here is a short cheat sheet for better understanding:

Key requirements

- Protecting user rights: AI systems must adhere to privacy laws, ensure security, and eliminate discriminatory practices, especially concerning sensitive personal data or automated decision-making.

- Ensuring transparency: Companies must disclose when AI is being used and provide clear documentation of how decisions are made, particularly in high-stakes scenarios.

- Controlling risks: Businesses must conduct detailed risk assessments, implement mitigation measures, and maintain extensive records to ensure compliance with the Act's standards.

Meeting these demands requires careful planning and robust technical capabilities. Companies investing in EU AI Act preparation must prioritize compliance to avoid penalties and align with ethical standards.

For organizations navigating these challenges, seeking professional support can be invaluable. Providers of AI development services can help businesses build solutions that align with the Act's requirements. Whether you're developing AI tools or refining existing systems, preparing for the AI Act ensures compliance and a stronger foundation for future innovation.

If you're unsure how to prepare for the EU AI Act, acting early and adopting best practices can save time, reduce risks, and help your business stay competitive.

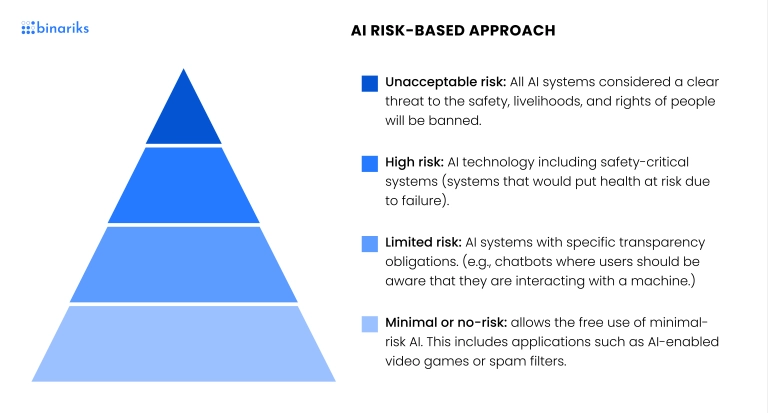

AI Act's risk classification explained

The AI Act's impact on business is substantial, introducing a risk-based framework that categorizes AI systems into four tiers: unacceptable, high, limited, and minimal risk. This classification ensures regulatory measures are proportional to the potential harm, requiring businesses to adopt tailored strategies for compliance. Let's get into the details.

Unacceptable risk

This category includes AI systems deemed harmful to fundamental rights or public safety and strictly prohibited.

Examples:

- Social scoring systems based on personal behavior.

- Real-time biometric surveillance in public areas, except under narrowly defined conditions.

Such applications are banned outright to protect democratic freedoms and individual rights.

High risk

High-risk AI systems are subject to strict requirements due to their potential to affect safety and fundamental rights significantly.

Examples:

- Healthcare: Diagnostic tools or robotic surgery assistants that impact patient outcomes.

- Education: AI used for admissions or automated grading that must avoid bias.

- Law Enforcement: Predictive policing or facial recognition technologies used by authorities.

Businesses deploying these systems must prioritize preparing for EU AI laws by conducting risk assessments, maintaining detailed documentation, and ensuring transparency.

Limited risk

AI systems in this category pose moderate risks and primarily require transparency measures.

Examples:

- Chatbots in customer service.

- Recommendation algorithms used in e-commerce or media platforms.

Organizations must inform users when AI is in use and provide avenues for recourse if necessary.

Minimal risk

Minimal-risk AI systems account for most AI applications and are mainly exempt from compliance obligations.

Examples:

- Spam filters in email systems.

- Simple automation tools for internal business operations.

While compliance is not mandatory, voluntary ethical standards can enhance user trust.

Tailoring compliance efforts to the specific risk category ensures adherence to regulations while maintaining operational efficiency. For businesses, navigating these challenges and understanding the AI Act early is essential to avoid disruptions and maintain competitiveness.

Compliance challenges with the AI Act

Adapting to the AI Act presents several challenges for businesses, including:

- Comprehensive risk assessments: Identifying and addressing potential risks in high-risk AI systems can be time-consuming and complex.

- Data quality and traceability: Ensuring datasets are accurate, unbiased, and fully traceable requires significant resources.

- Transparency requirements: Implementing algorithms that are explainable to users and regulators can be technically challenging.

- Documentation and monitoring: High-risk AI systems demand extensive compliance documentation and continuous performance monitoring.

- Regulatory uncertainty: The evolving nature of the AI Act may lead to ambiguity in meeting standards, especially for emerging technologies.

Our team provides expert support to address these challenges. We offer compliance audits, risk management consultations, and guidance on developing transparent, accountable algorithms. With our help, businesses can streamline AI Act adaptation and focus on delivering ethical and innovative AI solutions.

Steps to prepare for the AI Act

Achieving EU AI Act readiness requires a clear and structured approach. Below is a step-by-step plan to help businesses align with the regulation's requirements:

- Analyze current AI solutions

Review all existing AI systems to identify potential compliance gaps. This analysis sets the foundation for adequate AI regulation preparation and highlights areas needing immediate attention.

- Classify risks

Evaluate each AI application's risk level (unacceptable, high, limited, or minimal) based on the AI Act's framework. Accurate risk classification is critical for determining the compliance measures required.

- Ensure data quality

Audit and refine the datasets used for AI training. Ensure they are accurate, unbiased, and traceable to meet legal standards. This step is essential for high-risk AI systems.

- Develop risk management strategies

Create comprehensive risk management plans tailored to each risk category. Include safeguards to address potential harm and outline procedures for regular system monitoring.

- Implement transparent and explainable algorithms

Ensure that your AI systems provide clear, interpretable outputs. Transparency builds trust and is a regulatory requirement, especially for high-risk applications.

- Train your team

Educate your team on the AI Act's requirements and integrate compliance practices into every stage of your development lifecycle . Awareness across departments ensures sustained adherence.

By following these steps, businesses can upgrade their compliance efforts and build a robust foundation for adapting to the evolving landscape of AI regulation.

The role of high-quality data and risk management in AI Act compliance

High-quality data is the cornerstone of EU AI Act compliance. Accurate, unbiased, and traceable data ensures that AI systems perform reliably and ethically, particularly in high-risk applications. Poor-quality data can lead to biased outcomes, erode user trust, and increase the likelihood of non-compliance penalties. For AI models, especially EU AI Act generative AI systems, the quality and diversity of training data directly affect their ability to produce safe and transparent outputs.

Risk management plays an equally critical role. Businesses must implement robust frameworks for continuous monitoring, regular risk assessments, and the enforcement of tailored policies. According to the European Commission, a proactive risk management approach is the key to maintaining compliance while ensuring AI systems remain adaptive and effective (Source ).

Conclusion

The EU AI Act establishes a new era for ethical and transparent AI development, requiring businesses to align with its rigorous standards. Organizations can proactively mitigate risks, avoid penalties, and lead responsible AI innovation by addressing compliance proactively.

At Binariks, we offer comprehensive support for every stage of the AI lifecycle:

AI/ML developers:

- Build compliant, secure-by-design AI systems.

- Mitigate risks like data poisoning and monitor system performance.

Quality assurance specialists:

- Conduct rigorous testing and ensure alignment with EU regulations.

- May collaborate with compliance officers for QMS and audits.

Business analysts:

- Map AI system risk classifications and align with client needs.

- Document data governance practices to prevent bias.

Project managers:

- Coordinate teams, establish compliance timelines, and manage documentation.

- Coordinate with compliance and legal teams to facilitate communication with regulators.

Whether you develop, deploy, or monitor AI solutions, we can assist you in achieving compliance with the EU AI Act. Contact us to streamline your compliance process and create AI systems that are reliable and ready for regulation.

Share