When we got familiar with MIT's recent study on enterprise AI adoption , we had to read the headline twice. Despite $30-40 billion in enterprise investment into GenAI, this report uncovers a surprising result in that 95% of organizations are getting zero return.

Ninety-five percent? We were genuinely puzzled.

This wasn't about us being exceptional but about understanding a significant disconnect. In our work with healthcare, fintech, and insurance clients, we regularly see AI projects reach production and deliver measurable business impact.

Our recent agentic AI system for a healthcare platform developer , for instance, automated clinical workflow communications and task management.

The deployed system delivered a 30% time savings on follow-up documentation tasks and doubled task completion compliance for scheduled check-ins and reminders – demonstrating concrete operational improvements in a live healthcare environment.

So why does the average enterprise see nothing in return on their Gen AI investments, what's causing this massive failure rate?

The real problem: Treating AI like a traditional software suite

The issue isn't technological; it's methodological. Most organizations treat AI as if it were Excel – an advanced calculator, but only when someone feeds it data and formulas. However, just like Excel, most companies end up using only 10% of its capabilities because they never rethink their processes or train their people properly.

The same pattern is now repeating with AI. Companies drop a model into an existing workflow and expect transformation. Without redesigned processes, proper training, or systems that can adapt and learn, the outcome is predictable: most pilots stall. We see that 60% of organizations evaluate enterprise AI tools, but only 5% ever reach production.

What needs to be changed here is the mindset. Too many enterprises still see AI as a technical add-on, "another tool" or "a piece of Python code," instead of treating it as a lever to reimagine how work gets done.

The few organizations crossing what MIT calls the GenAI Divide approach AI differently: they implement it as a business transformation process, not a software installation.

Industry reality check

MIT's research shows that most industries are experimenting with GenAI, but few are seeing transformation. This is especially visible in regulated sectors like healthcare, insurance, and finance.

For example, the research used a composite disruption index to see how much GenAI has actually reshaped industries. They scored each sector on five signals – market share shifts, growth of AI-native firms, new business models, changes in user behavior, and leadership turnover linked to AI.

The results say a lot about how AI is really being used:

- Technology and Media rank high because AI drives visible new products, challenger firms, and workflow shifts.

- Healthcare and Financial Services rank low because usage is limited to narrow pilots (documentation in hospitals, back-office automation in banks, etc.) without real structural change.

For us, this is the key insight: most industries aren't being transformed by AI. They're running small pilots that nibble at efficiency but don't touch the core processes that define value.

These aren't simply signs of slow adoption – they're symptoms of both a fundamental mismatch between generic AI solutions and industry-specific realities, and the high-stakes regulatory environment that defines many sectors.

In heavily regulated industries, every decision carries potential legal and financial liability. Unlike less regulated domains where AI errors might mean embarrassing content or missed opportunities, here, a single misinterpretation can trigger lawsuits, criminal penalties, or regulatory sanctions.

Since AI systems can't be held legally accountable, human professionals remain personally responsible for outcomes – creating a risk calculation that often favors traditional methods over AI innovation, regardless of efficiency gains.

Binariks' experience with enterprise clients confirms this dynamic. From our perspective, enterprises in these sectors aren't interested in another tool. They already pay for numerous software solutions with disappointing returns – otherwise, they wouldn't explore alternatives.

Yet another chatbot that can make phone calls but can't adapt to specific regulatory requirements delivers a brief wow-effect, then becomes just another piece of software covering 20-30% of scenarios while requiring manual workarounds for everything else.

Take financial services:

A generic AI tool might flag transactions that look suspicious. However, real risk assessment requires understanding nuance: why a $9,500 transfer to one jurisdiction triggers compliance, while the same transfer elsewhere does not; or how transaction timing relative to market events can change its meaning entirely.

The same goes for healthcare:

Automating documentation is nice, but if the system doesn't align with HIPAA rules or hospital workflows, it creates more problems than it solves.

At Binariks, we've seen this pattern enough to build industry-specific expertise into our AI Center of Excellence. Our teams don't just code models; they understand healthcare compliance, insurance frameworks, and fintech regulations. Without that domain knowledge, AI adoption stalls. With it, the system can truly change how work gets done.

Work with teams who understand both compliance requirements and cutting-edge AI

What actually works: The learning-first approach

The mistake most enterprises make is assuming that installing AI equals transformation. They buy or build a model, wire up an API, wrap it in a UI, and expect results. Instead, they end up with yet another tool nobody fully uses.

The right way to think about AI is as a digital junior employee. Useful on day one, but far more valuable once it learns your processes, adapts to your workflows, and grows with feedback.

To make that possible, four principles separate failed pilots from successful systems:

1. Process before technology

Don't ask, "Where can we plug AI in?" Ask, "Which process is slow, expensive, or error-prone, and how can AI help us redesign it end-to-end?"

- Example: Instead of auto-tagging insurance claims, rethink the full intake → validation → settlement workflow.

2. Learning-capable systems

Static models plateau fast. Systems that learn from real usage improve continuously.

- Example: A contract bot that gradually adapts to how your lawyers phrase clauses instead of repeating demo templates.

3. Domain expertise at the core

Engineers alone can't define compliance thresholds or interpret regulatory risks. Subject matter experts (SMEs) are essential to design systems that survive real-world complexity.

4. Continuous improvement cycles

AI isn't "finished" at deployment. The strongest systems run on feedback loops – guardrails in code, SME checkpoints, and retraining cycles that keep accuracy high as processes evolve.

Transform your business with generative AI

Unlock AI insights and strategies with our comprehensive whitepaper

Where agents fit in

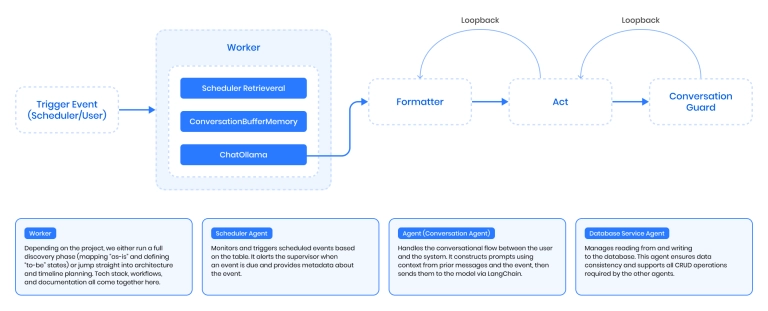

This divide between "simple tasks AI handles well" and "complex processes where humans still dominate" comes down to memory, adaptability, and learning. That's where Agentic AI comes in.

Unlike current systems that forget everything once a session ends, agentic systems embed persistent memory, learn from interactions, and can orchestrate multi-step workflows.

Early enterprise experiments show what this looks like in practice:

- Customer service agents resolve full inquiries end-to-end without handoffs.

- Financial processing agents continuously monitor and approve routine transactions.

- Sales pipeline agents track engagement across channels and adapt follow-ups.

These systems aren't just automating single tasks – they're evolving within the workflow. Done right, they become digital teammates that grow more effective the longer they're in use.

Yet the same rules apply: Without process alignment, SME oversight, and proper feedback loops, even advanced agents risk becoming shelfware. With the correct methodology, however, they can be the bridge across the GenAI Divide.

Binariks' differentiated approach

The four principles above aren't just theory – they're what we apply with clients every day. To make them repeatable, we built our AI Center of Excellence (CoE): a structured methodology that keeps projects out of the 95% failure zone and moves them into the 5% that succeed.

Where the four pillars define what makes AI work, our CoE defines how to put it into practice.

Our phased approach to AI looks like this:

1. Evaluate & align

- Map processes with SMEs

- Identify where AI creates measurable ROI, not just "productivity gains"

- Balance business value vs. implementation cost

2. Explore & prototype

- Rapid pilots that integrate into existing workflows

- Prove usability before scaling

3. Implement & integrate

- Add AI as copilots into legacy systems if needed (not every client can rip-and-replace)

- Build QA and compliance checks directly into workflows

4. Optimize & evolve

- Train staff to use AI agents effectively

- Keep systems learning with structured feedback loops

- Regularly re-evaluate ROI

This way, clients don't just "adopt AI." They embed it in their operations in a way that scales and survives beyond the initial demo.

Key differences in our practice:

- Trust: Every system has QA checkpoints and SME reviews. No blind automation.

- Legacy integration: If a 20-year-old claims system can't be touched, we'll build an AI sidecar that works with it instead.

- People enablement: Training and handover are mandatory. A tool nobody knows how to use is a failed project.

- Cost-value balance: We prioritize processes where AI's impact compounds – where automating one step unlocks value across the chain.

This approach ensures AI isn't "just another tool." It becomes part of the business itself – scalable, compliant, and measurable in real ROI.

Practical takeaways

If you're considering AI for your enterprise, here's what matters:

Three questions to ask any vendor:

- How will this system adapt to new processes and evolving business requirements if needed?

- What's the plan for training my people to use it effectively?

- What specific operational improvements can the system demonstrate?

Metrics that actually matter:

- Reduced spend on BPOs or agencies

- Faster compliance cycles and fewer errors

- Clear impact on revenue retention or risk reduction

The bottom line: AI adoption fails when treated as a tool. It succeeds when treated as a business transformation.

Ready to transform your AI experiments into business results? Contact Binariks to build systems that actually work.

Share