With the development of AI , there is a growing demand for AI regulation in healthcare. 2023 was a groundbreaking year for AI, with over 35% of companies now using it daily.

AI regulations in healthcare are crucial for ensuring patient safety, as they mandate rigorous testing and validation of AI systems to prevent harm and misdiagnoses. They protect patient privacy and data security, guarding sensitive health information against unauthorized access and misuse.

Additionally, these regulations help maintain ethical standards in care, ensuring AI applications do not perpetuate biases and are accessible and equitable to all patients. In this article, we will focus on the current and upcoming healthcare AI regulations, including US regulations for AI in healthcare and those in the EU.

Challenges and risks

Regulating AI in healthcare comes with challenges. Legislation can help reach progress in solving most problems outlined below. However, this requires the creation of extended healthcare regulations beyond what exists today.

Many challenges and risks are still not covered by existing legislative acts. Addressing the issues requires comprehensive legislative acts like the upcoming EU AI Act.

Data privacy and security

Handling sensitive medical data requires special security measures to protect against breaches and ensure patient confidentiality.

Clinically irrelevant performance metrics

The measures typically used in AI models are not always transferrable to clinical settings. Solving this problem requires collaboration between clinicians and developers of AI platforms.

Bias and inequality

AI systems are only as good as the data they are trained on. If the data is biased, the AI's decisions can be skewed, leading to unequal treatment of different demographic groups.

Lack of transparency

AI algorithms, especially deep learning models, can be 'black boxes,' making it difficult to understand how they arrive at certain decisions. This lack of transparency can be a significant issue in healthcare.

Regulatory and ethical issues

There are numerous regulatory and ethical considerations in applying AI to healthcare, including questions about responsibility for AI decisions and ensuring equitable access to AI-enhanced treatments.

Integration challenges

Integrating AI into existing healthcare systems can be complex and costly, requiring significant workflow changes and potentially disrupting established practices.

Dependence and skill erosion

Overreliance on AI could lead to a decrease in specific skills among healthcare professionals as machines take over tasks that were previously human-led.

Misdiagnosis and errors

While AI can potentially reduce human error, it is not infallible. Incorrect or biased data, programming errors, or other issues can lead to misdiagnosis or inappropriate treatment recommendations.

Healthcare AI regulations in the USA

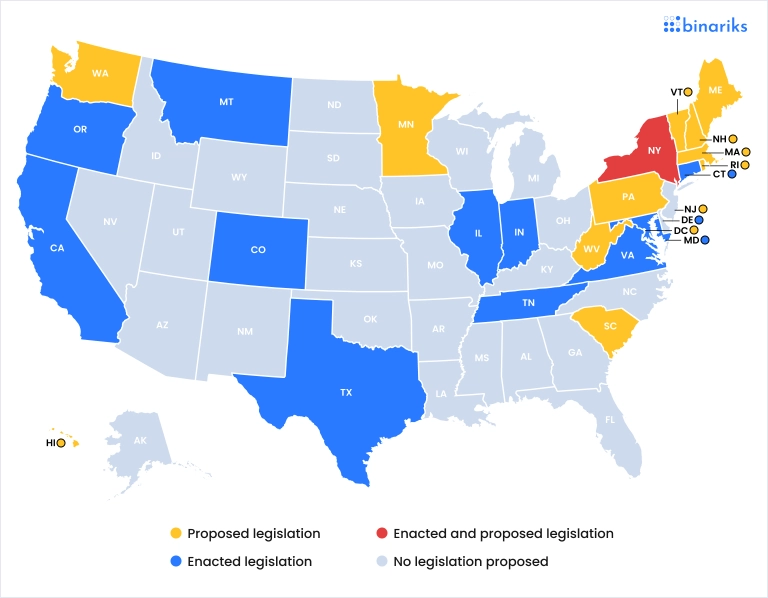

AI regulations in the USA are still developing, and the country is still finding a regulatory approach. There is still no single act of legislation in sight, unlike with AI regulation in the EU.

As of 2023, some states and cities are passing laws limiting the use of AI. A big part of the existing EU regulations belongs to the FDA. Overall, the US approach is more decentralized, revealing common trends with US legislation.

Food and Drug Administration (FDA)

- FDA is the regulatory body responsible for enforcing the Federal Food, Drug, and Cosmetic Act (FDCA), which regulates the safety and effectiveness of AI healthcare clinical systems.

- The Food and Drug Administration (FDA) has a specific focus on AI- and machine learning-based (ML) software as a medical device (SaMD) (Source ). They classify these technologies based on risk and intended use (Source ).

- For high-risk AI applications, the FDA requires rigorous pre-market testing and approval. This process involves demonstrating the safety and effectiveness of the AI system through clinical trials or validated studies.

- The FDA also monitors AI-based medical devices after they are in the market to ensure ongoing safety and effectiveness. This is particularly important for AI, as these systems can evolve and learn over time.

Health Insurance Portability and Accountability Act (HIPAA)

- HIPAA sets standards for the proper handling of patient data and privacy in healthcare (Source ). This includes requirements for encryption, access controls, and breach notification protocols.

- HIPAA also gives patients rights over their health information, including the right to access their data and obtain copies. AI developers must ensure their systems comply with these rights .

Centers for Medicare & Medicaid Services (CMS)

- CMS determines whether a new AI-based healthcare technology will be covered under Medicare and Medicaid based on the technology's effectiveness (Source ).

Federal Trade Commission (FTC)

- The FTC ensures that any claims about AI-based health products are not deceptive or misleading (Source ). This is crucial for consumer trust and safety.

- The FTC enforces laws related to data breaches and consumer privacy, which are pertinent to AI applications handling personal health information.

Office for Civil Rights (OCR)

- OCR investigates complaints and conducts compliance reviews to ensure adherence to HIPAA rules (Source ). The complaints include ones about AI-related products as well. OCR also guides entities in understanding and fulfilling their HIPAA obligations.

The President's Executive Order (EO) on Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (14110)

The new executive order established principles for using and developing AI in federal agencies (Source ). Here are some of the aspects of this executive order:

- It requires all AI systems to share safety test results with the government.

- It oversees the development of tests and tools for AI safety.

- The order supports privacy-preserving research and technologies in AI for medical research.

- The order promotes ethical practices for AI in healthcare.

- The order directs federal agencies to consider these principles in implementing AI applications and technologies.

- Agencies must develop and share action plans on how they will implement the principles of the EO.

- The OMB oversees the implementation of this EO across federal agencies.

FHIR and being HIPAA compliant

FHIR implementation guide

Healthcare AI regulations in the EU

The EU has a more comprehensive framework of AI regulations in healthcare compared to the US, making AI regulations in the EU more interesting from the legislative development standpoint.

In particular, the EU is actively working on the first comprehensive AI Act, which has no analogs worldwide. Here is the list of AI regulations in the EU:

General Data Protection Regulation (GDPR)

- The General Data Protection Regulation (GDPR) is a regulatory framework established by the European Union to protect information privacy within the European Union (Source ).

- GDPR is a crucial regulation for AI in healthcare due to its strict data protection and privacy rules. It applies to any AI system that processes EU citizens' personal data, including most healthcare applications. Under GDPR, explicit consent is required for processing sensitive health data. AI applications must ensure transparency in how they process personal data. Individuals have the right to obtain explanations of AI-driven decisions that significantly impact them, which is particularly relevant in healthcare settings.

Medical Device Regulation (MDR)

- The EU's MDR, which came into full application in May 2021, includes regulations for AI-based software when used as a medical device (Source ). This regulation focuses on the safety and performance of these devices. Under MDR, AI applications in healthcare are subject to a classification system based on their risk level, and they must undergo a conformity assessment process before being placed on the market.

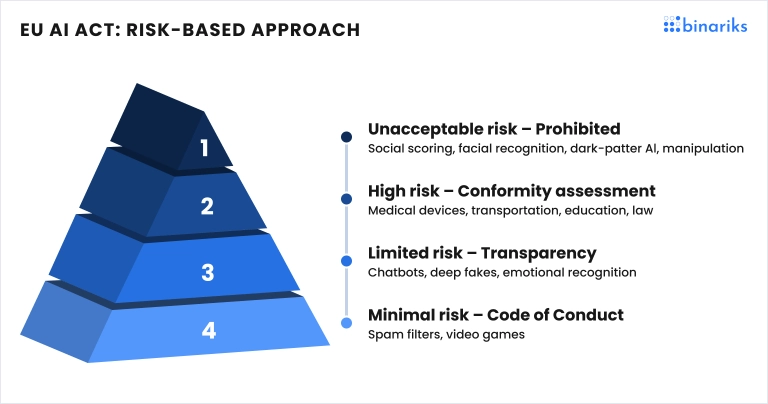

Upcoming EU AI Act by the European Commission

- The forthcoming EU AI Act is the first legislation dedicated to AI regulations. The act offers a risk-based approach. According to the show, high-risk AI systems, including those used in healthcare, are subject to strict obligations before being put on the market.

- According to the AI Act, all AI will be categorized into unacceptable risk, high risk, limited risk, and low risk based on the safety of the population (Source ). Unacceptable-risk AI will be subject to a ban; high-risk AI will face vigorous regulations; limited-risk AI will face limited regulations; and low-risk AI will face no regulations.

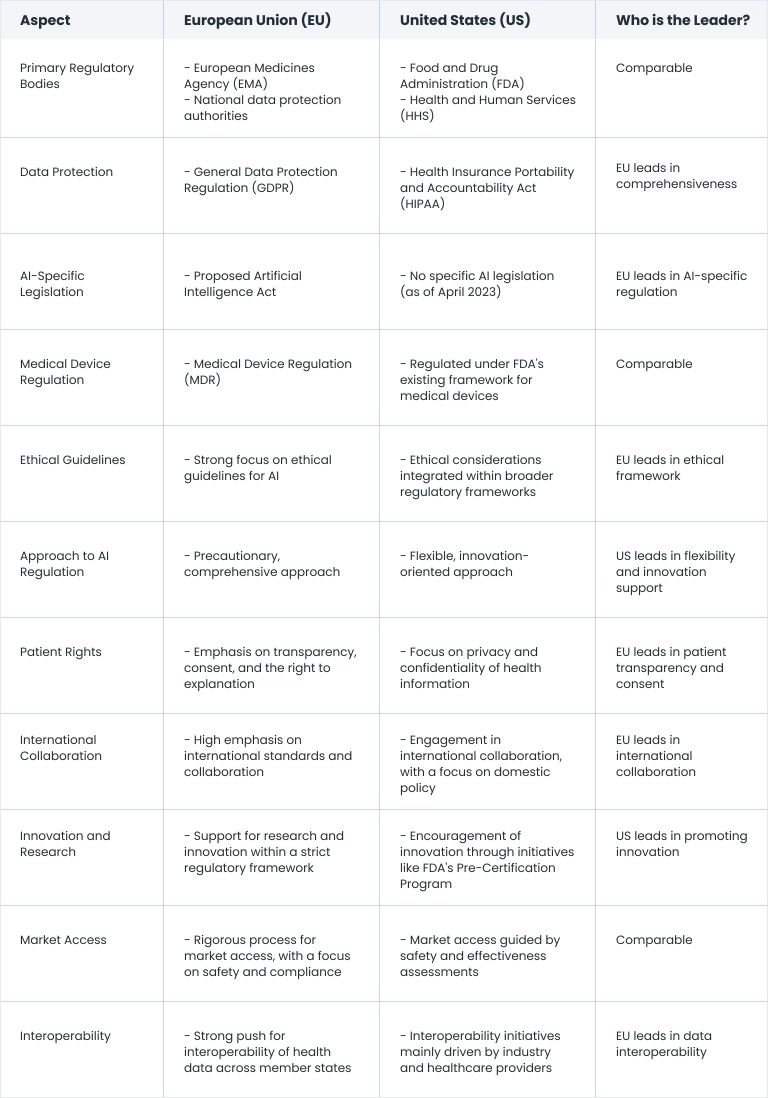

Comparison of EU and US regulations

Many healthcare AI regulations in the United States and the European Union are comparable, as the frameworks are still being created. As of 2023, the EU leads in most aspects of AI regulation in healthcare, including ethical aspects and data interoperability.

One leading aspect of AI regulation in the USA is the support of innovation and flexibility. Regulating AI in healthcare in the US is far less centralized than in the EU.

Future expectations

- With the acceptance of the EU AI Act for regulating AI in healthcare in the EU, there is a pathway for creating more comprehensive AI regulations in healthcare in all countries.

- As AI becomes more integral to various sectors, there will likely be a push towards more standardized global regulations.

- With growing awareness of the potential biases in AI systems, future regulations will likely emphasize ethical AI development.

- Regulations may require AI systems to be more transparent and explainable, especially in critical areas like healthcare, criminal justice, and finance.

- The global nature of AI technology may lead to international collaborations for setting standards. However, there might also be conflicts, as different countries may have varying priorities and ethical standards.

- In the United States, the Department of Health and Human Services (HHS) proposes a rule requiring electronic health record systems that use AI and algorithms to explain to users how these technologies operate.

- At Binariks, we can help you develop compliant AI systems, as well as mitigate risks associated with AI regulations in healthcare.

COVID-19 Testing App

We helped to create a COVID-19 testing app and expanded its abilities to ensure HIPAA compliance.

In conclusion

Both AI regulation in the EU and AI regulation in the US are in the process of development as of late 2023, as legislators are yet to figure out how healthcare AI regulations will reflect the reality of AI implementation.

It is not yet clear whether the United States will pursue a single act containing all AI regulations. Still, the EU AI Act sets a precedent for other countries to clear their AI regulations into a single piece of legislation.

In the future, we can expect regulating AI in healthcare to focus more on privacy, transparency, and accountability.

Share