Maintaining AI models is as crucial as building them. While the initial deployment often garners the most attention, the real challenge lies in ensuring these models perform reliably over time. Model maintenance isn't just a technical task — it's a vital part of sustaining the value of AI systems in production.

Data and conditions change constantly, and AI model maintenance faces unique challenges. From model drift to the need for regular retraining, ensuring that AI systems stay accurate and reliable is no small feat. These issues can erode trust in AI solutions if not addressed effectively.

Here's what you'll learn in this article:

- The key challenges of AI model maintenance

- The role of MLOps in effective maintenance

- How to tackle model drift and retraining

- Ensuring data quality and validation processes

- Essential tools and mechanisms for maintaining AI models

Read on to discover how to overcome these challenges and ensure your AI models remain trustworthy and effective over time.

Challenges of AI model maintenance

Maintaining AI models goes beyond initial deployment, requiring ongoing attention to ensure they continue to perform accurately. Key challenges include model drift, retraining schedules, and managing overall AI maintenance. Ignoring these can lead to performance issues and a loss of trust in AI systems.

- Need to retrain models: Determining when to retrain models is critical. Retraining too often wastes resources, while delays can result in inaccurate predictions. For instance, AI-based credit scoring might need retraining as economic conditions change. Strategic monitoring ensures timely and efficient retraining.

- Data quality issues: High data quality is essential for reliable AI models. Issues like missing or incorrect data can degrade performance. Continuous data validation and cleaning help maintain accuracy and prevent model failures, ensuring long-term reliability.

- Scalability: Scaling AI models across different environments introduces challenges in maintaining consistent performance. Diverse data sources and deployment environments can lead to inconsistencies. Careful planning and monitoring are necessary to ensure models scale effectively without losing accuracy.

- Interpretability and explainability: As AI models grow more complex, making their decisions understandable becomes harder. In sectors like healthcare, this is crucial. Balancing performance with interpretability ensures models remain explainable, fostering trust and regulatory compliance.

- Security and privacy concerns: AI models are vulnerable to adversarial attacks and must comply with privacy regulations like GDPR and other data protection or AI regulations. Implementing strong security measures and maintaining data privacy are ongoing challenges in AI maintenance.

- Resource management: AI maintenance demands significant resources — computational power, storage, and expertise. Efficient resource management is vital to keep maintenance processes cost-effective without sacrificing performance. This balance is critical to sustaining AI systems in the long term.

By addressing these challenges, organizations can ensure their AI systems remain robust, reliable, and effective in a dynamic environment.

MLOps and AI maintenance

MLOps, or Machine Learning Operations , are important for maintaining AI systems and ensuring they remain effective and reliable. By merging DevOps practices with machine learning, MLOps provides a framework that supports continuous integration and deployment (CI/CD) of AI models. This approach is crucial for keeping models updated and functioning optimally.

Continuous integration and deployment (CI/CD)

MLOps excels in facilitating the continuous integration and deployment of AI models, ensuring they stay relevant as environments change. Through automated updates, MLOps addresses the need for timely model retraining, allowing AI systems to adapt to new data quickly. This automation enhances the reliability and accuracy of AI systems.

Addressing AI maintenance challenges with MLOps

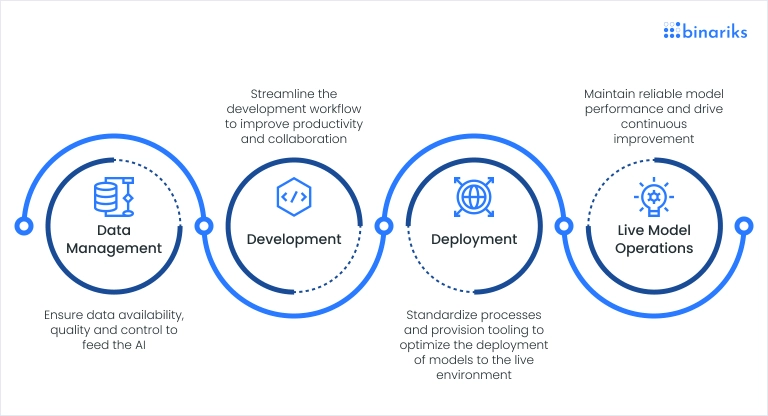

MLOps, much like traditional IT operations management, is crucial for optimizing the performance and maintenance of AI models. It involves creating pipelines that streamline processes, enhance productivity, and reduce risks. Unlike traditional software development, MLOps requires continuous operation and monitoring of models in production, with a focus on managing not just code but also data and model versions.

Here are the key challenges MLOps can handle:

- Model drift: MLOps automates the detection of model drift, enabling quick responses to changes in data patterns and maintaining model accuracy.

- Retraining models: MLOps streamlines retraining models by automating triggers for retraining, ensuring models remain aligned with current data.

- Data quality: MLOps integrates data validation into the CI/CD pipeline, ensuring high-quality data is used for model updates and preventing performance degradation.

- Scalability: MLOps enables efficient scaling of AI models across different environments, ensuring consistent performance.

- Interpretability and explainability: MLOps supports reproducibility and documentation, maintaining model interpretability.

- Security and privacy: MLOps includes mechanisms to secure models and ensure compliance with privacy regulations, mitigating risks.

- Resource management: By automating tasks, MLOps optimizes resource use, making model maintenance both effective and efficient.

In summary, MLOps is critical for the ongoing operation of AI models, ensuring AI robustness and reliability.

Validation and verification of AI models

The validation and verification of AI models are crucial processes in maintaining AI models and ensuring their long-term integrity. These steps involve thoroughly testing and evaluating models to confirm that they perform as expected and produce reliable results. Proper validation and verification help to catch errors, prevent model drift, and ensure that models remain aligned with their intended objectives.

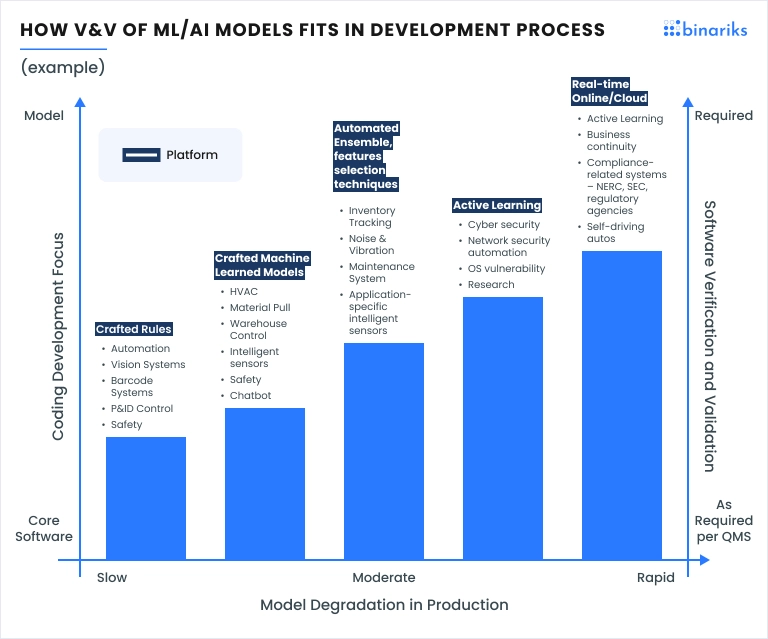

The chart below illustrates the model's vulnerability to degradation across applications. To reduce risks and ensure model certification, focus on two areas: 1) the platform running the model, which affects costs, and 2) verification and validation, which aligns with ethical engineering, quality standards , and values like those in the Montreal Declaration for Responsible AI Development (Source ).

Model validation is essential in confirming that an AI model functions correctly on unseen data. It ensures the model's predictions are accurate and can generalize well to real-world scenarios.

Verification, however, focuses on checking whether the model was built correctly according to the specifications and whether it meets the business and technical requirements. Together, these processes are vital aspects of ML model management, helping to maintain the trustworthiness and effectiveness of AI systems.

Manual vs. automated validation methods

- Manual validation: Involves human experts reviewing the model's performance, testing it with specific scenarios, and assessing its behavior in different conditions. Manual validation allows for in-depth analysis and understanding of the model's decisions, making it possible to identify subtle issues that automated methods might miss. However, manual validation can be time-consuming and may not scale well, especially for large models or frequent updates.

- Automated validation: Uses algorithms and scripts to test the model across a wide range of conditions systematically. Automated methods are fast, scalable, and can cover many scenarios, making them ideal for continuous monitoring and frequent model updates. However, they might overlook context-specific issues that require human judgment to detect.

While automated methods are efficient and indispensable for ongoing monitoring, manual validation provides the depth and nuance needed to catch complex issues.

Addressing data quality issues in model retraining

Poor-quality data is a significant challenge regarding model retraining in MLOps. When inaccurate or irrelevant data enters the system during retraining, it can lead to incorrect predictions and degrade the model's overall performance. Ensuring that only high-quality data is used in the retraining process is crucial for maintaining the reliability and accuracy of AI models.

The challenge of data quality

One of the most daunting tasks in retraining an AI model effectively is the ability to discern between correct and incorrect data, especially when dealing with large datasets.

In a vast pool of data, errors can be subtle and challenging to identify, yet they can have a significant impact on the model's performance. For instance, mislabeled data, outliers, or data that no longer reflects the current environment can all lead to a model that produces inaccurate results after retraining.

Solutions for ensuring data quality

To mitigate the risks associated with poor-quality data, several strategies can be employed:

- Data preprocessing: Implement thorough data preprocessing steps before retraining. This includes cleaning the data, removing outliers, correcting labels, and normalizing the data. Preprocessing ensures that the data fed into the model is as accurate and relevant as possible.

- Automated data validation: Incorporate automated data validation checks within the MLOps pipeline to flag and remove poor-quality data before it reaches the model. Automated tools can help in identifying anomalies or patterns that deviate from expected norms, reducing the likelihood of introducing bad data into the retraining process.

- Human review: For critical applications, consider incorporating a manual review of data, especially when new datasets are introduced. Human judgment can help identify issues that automated systems might miss, ensuring that the data used for retraining is both accurate and contextually relevant.

- Continuous monitoring: After retraining an AI model, implement continuous monitoring to track the model's performance. This helps quickly identify if the newly retrained model produces unexpected results, which could indicate the presence of poor-quality data in the training set.

Transform your business with generative AI

Unlock AI insights and strategies with our comprehensive whitepaper

Meet the challenges of tomorrow with confidence

MLOps tools and mechanisms for AI model management

Final thoughts

Having a robust AI support strategy is not just important — it's a cornerstone of maintaining AI systems' long-term reliability and effectiveness. As AI drives innovation across industries, ensuring these models remain accurate, trustworthy, and up-to-date is crucial. MLOps and continuous model management play a pivotal role in this process, offering the tools and frameworks needed to keep AI systems performing at their best over time.

At Binariks, we understand the complexities involved in AI model maintenance and the critical importance of AI trustworthiness. With our extensive experience in MLOps, AI model management, and advanced AI solutions, we help organizations build and maintain AI systems that are not only powerful but also reliable and scalable. Our team is equipped to implement best practices in model retraining, data validation, and performance monitoring, ensuring that your AI models continue to deliver accurate and actionable insights.

Partner with Binariks to harness the full potential of your AI systems. We create tailored solutions that address the unique challenges of AI maintenance, ensuring your models remain robust, reliable, and aligned with your business goals.

Share