Cyber attacks today can drain billions from organizations – yet those investing in AI and automation cut their breach costs by an average of USD 2 million , according to IBM.

The pressure is mounting in finance , healthcare, and insurance. You're not just protecting sensitive data; you're juggling strict regulations and adapting to new risks. Forget chasing the latest buzz. Switching to AI-driven cybersecurity is a proven way to spot threats in real time, automate response, and protect your systems and reputation.

You might face endless false alarms, legacy challenges, or skill gaps within your team. Sorting out the noise takes clarity and hands-on expertise. Below, you'll find practical steps, genuine examples, and simple advice to help you use AI for security, compliance, and staying a step ahead.

- How AI-powered platforms bring smarter threat detection

- Ways to blend AI security with legacy systems and compliance needs

- How predictive analytics and behavioral AI stop attacks before they start

- Steps for human-AI teamwork, defending against attacks, and training your team

Core concepts and the evolution of AI-driven cybersecurity

If your industry is on regulators' radar, you're under pressure – no way around it. Threats have outgrown old-school firewalls and static rules. Trust in security feels like a moving target. Here's why AI-driven cybersecurity is changing the way regulated industries stay protected.

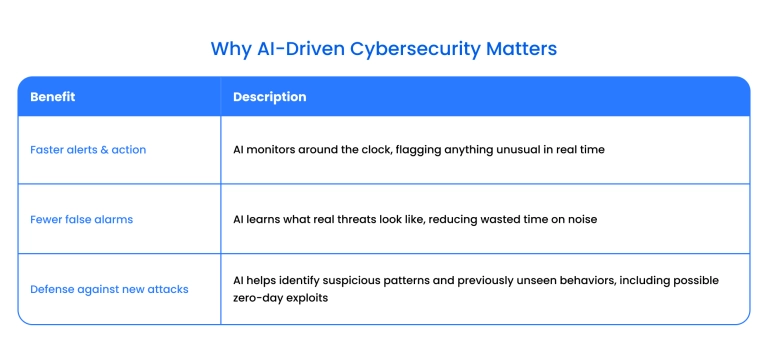

What does AI-driven cybersecurity do differently?

In finance, healthcare, and insurance , security isn't just putting up walls. Basic tools – antivirus or simple intrusion detection – cover familiar attacks. But modern threats keep changing. You need security that learns and adapts.

AI-driven cybersecurity uses smart systems like machine learning (ML) , deep learning, and clever algorithms to spot and stop cyber threats. Rather than following static rules, these tools scan huge amounts of data, find patterns, and flag trouble you might miss.

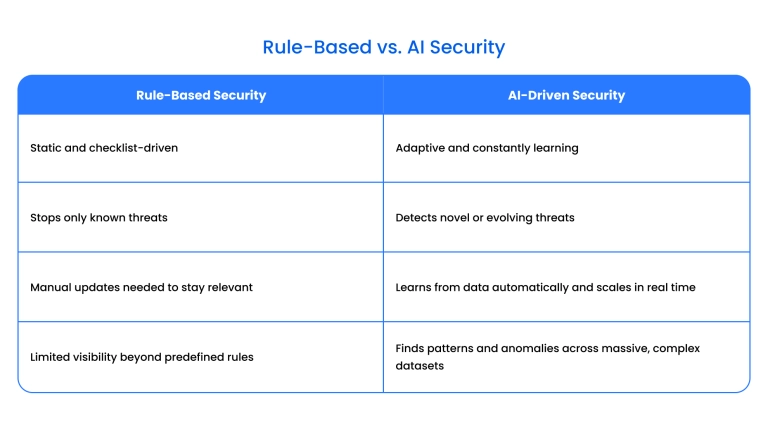

Rule-based defenses vs. AI security – what's the difference?

Picture a security guard with a checklist versus a detective putting clues together. Rule-based defenses only stop what's listed in the manual. If hackers try something new, they slip through the cracks.

AI models for financial services, healthcare, and insurance analyze massive datasets, ranging from login activity to user histories. They pick out what's abnormal.

IBM found that security teams adopt AI at the same rate as other business functions. 77% were adopting these technologies on par with (43%) or more advanced than (34%) their wider organization.

How AI shifts the balance:

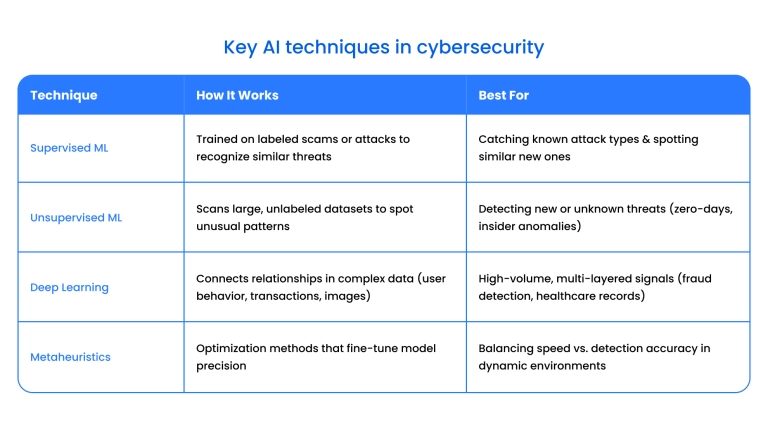

Advanced threat detection using ML, DL, and metaheuristics

Spotting threats now takes more than luck. ML models are like security dogs trained on countless attack types. Deep learning peels through data layers – user behavior, traffic histories, or patient records – finding signals no filter would catch.

Metaheuristics are optimization methods that can fine-tune detection models, improving their adaptability against evolving attack patterns. This combo sharpens detection for insurance, healthcare, and high-risk financial operations.

Bringing AI to zero trust and classic defenses

You don't have to toss out your old tools. Most regulated industries wisely build on what works: firewalls, anti-malware, and network filters. The key is layering AI into these stacks.

Zero trust is now a must for high-risk sectors. Every device and user needs verification, every time. AI steps up by catching subtle signals that might mean a breach – even when everything else seems fine.

Practical steps for blending AI:

- Review your systems – See what works, what's lagging, and where data crosses platforms.

- Insert AI where it matters – Place AI detection at critical checkpoints, like logins and sensitive records.

- Automate the basics – Let AI block low-risk events automatically; save real emergencies for your team.

- Track model accuracy – Watch for "drift" – models can get out of tune. Regular feedback keeps them sharp.

- Mix new with old – Audit and compliance should work hand in hand with AI, giving regulators clear proof of security.

Zero trust and adaptability, as well as smart security, aren't just trending terms – they actually protect your business and check every regulatory box.

Backbone of AI security: Datasets, testing, and benchmarks

AI security improves with data. The best systems are trained on millions of real and simulated threats. MIT's Lincoln Lab and others have open repositories used to benchmark new models.

Benchmarking is your scorecard. It proves your AI catches the right threats, avoids false alarms, and works quickly. In regulated industries, you need that clarity.

Steps for solid benchmarking:

- Use trusted datasets from reputable sources.

- Run controlled simulations – test speed and accuracy against real attacks.

- Compare results against standard benchmarks.

- Document testing methods and adjust systems based on feedback.

If you manage cyber risks, knowing your AI is proven and documented builds confidence and satisfies regulators.

Innovative AI threat detection: Smart wins for regulated industries

Struggle to keep up with new threats and ever-changing compliance rules? You're not alone. Organizations in regulated industries face mounting pressure from evolving threats and increasingly complex compliance requirements.

Traditional security approaches often struggle to keep pace with sophisticated attack vectors while maintaining regulatory adherence. AI-driven security platforms offer promising solutions for enhancing threat detection, incident response, and overall security posture.

Real-time detection and automated response – AI never sleeps

Manual security monitoring has inherent limitations in detecting sophisticated threats that can evade traditional rule-based systems. AI-based security platforms provide continuous monitoring capabilities, analyzing network traffic, user behavior, email communications, and system activities using machine learning algorithms.

These systems can identify patterns indicative of phishing attempts, ransomware deployment, or unauthorized access attempts more rapidly than manual analysis. When properly configured, automated response systems can execute predetermined actions such as network isolation, access revocation, or alert escalation based on threat severity levels.

Here's what you gain:

- Faster threat detection and response times

- Reduced manual analysis workload for security teams

- Automated compliance reporting and audit trail generation

Predictive threat intelligence – spot risks before they strike

Advanced AI systems can analyze global threat intelligence feeds, historical attack data, and emerging threat patterns to identify potential security risks before they materialize into active threats. Machine learning models trained on large datasets can recognize subtle indicators of compromise that might precede actual attacks.

For organizations handling sensitive data in banking, healthcare, and insurance sectors, early warning systems can flag anomalous activities such as unusual login patterns, unexpected data access requests, or irregular file transfers. This proactive approach enables security teams to investigate and mitigate risks before they escalate.

According to IBM's Cost of a Data Breach Report, organizations using AI and automation experienced significantly lower breach costs than those relying solely on manual security processes, with average savings of USD 1.9 million .

Behavioral analytics for insider threat detection

Insider threats, whether malicious or inadvertent, present unique challenges for traditional security systems. Behavioral analytics platforms use machine learning to establish baseline user behavior patterns and identify deviations that may indicate security risks.

To create behavioral profiles, these systems analyze access patterns, data usage, geographic locations, and system interactions. When significant deviations occur – such as unusual data downloads, access attempts from unexpected locations, or privilege escalation requests – the system can generate alerts for security team review.

Key capabilities include:

- Detection of anomalous user behavior patterns

- Identification of potential privilege abuse or fraudulent activities

- Context-aware alerting to reduce false positives and improve response efficiency

Protecting AI systems from tampering

AI security systems themselves require protection against various attack vectors. Adversarial attacks can compromise machine learning models through data poisoning, model evasion techniques, or training data manipulation.

Organizations implementing AI-based security solutions should consider:

- Implementing robust data validation and sanitization processes

- Conducting regular adversarial testing to identify model vulnerabilities

- Monitoring for model drift that can degrade detection accuracy over time

- Establishing strict access controls and change management for AI systems

AI and regulatory compliance considerations

Regulated industries face complex compliance requirements across HIPAA , SOX, PCI DSS, and GDPR frameworks. AI systems can support compliance efforts through several mechanisms:

- Data protection and encryption: AI can help identify and classify sensitive data, ensuring appropriate protection measures are applied consistently across systems.

- Advanced pattern recognition: Machine learning algorithms can detect suspicious activities that may indicate fraud, data breaches, or other compliance violations.

- Audit trail automation: AI systems can generate comprehensive, tamper-evident logs that support regulatory reporting requirements.

Integration with existing infrastructure

Many organizations operate hybrid environments combining legacy systems with modern security tools.

AI-powered security solutions can often integrate with existing infrastructure through APIs and standard protocols, enabling enhanced capabilities without requiring complete system replacements.

Building reliable audit capabilities

Compliance requirements often mandate detailed audit trails and reporting capabilities. Advanced AI systems can be designed to work with immutable logging systems or blockchain-based record-keeping to ensure audit trail integrity.

These approaches provide:

- Automated, tamper-resistant log generation

- Streamlined audit preparation and reporting

- Enhanced stakeholder confidence in data integrity

Implementation considerations

When evaluating AI-powered security solutions, organizations should consider factors such as:

- Integration complexity with existing systems

- Staff training requirements for new technologies

- Ongoing maintenance and model updating needs

- Compliance with industry-specific regulations

- Total cost of ownership, including implementation and operational costs

AI-driven threat detection represents a significant advancement in cybersecurity capabilities for regulated industries, offering improved detection accuracy, faster response times, and enhanced compliance support when properly implemented and maintained.

Facing future challenges and making human-AI teams stronger

In highly regulated industries like finance and healthcare, security pressures grow yearly. AI won't solve everything, but it makes security smarter, more adaptive, and more manageable.

Looking ahead, organizations must be prepared for new categories of risks:

- Adversarial attacks: Hackers manipulate inputs to trick AI models into misclassifying threats.

- Data poisoning: Malicious actors inject false data into training pipelines to sabotage models.

- Bias and fairness: Poor-quality or unbalanced datasets can lead to unsafe or unfair decisions.

Ethics and trust in AI

Bias and transparency aren't optional — they're legal and operational requirements. AI systems used in financial or health contexts must offer explainability so teams and auditors understand why decisions are made. Regular reviews of training data are key to preventing bias and ensuring fair outcomes.

Defending AI models

Attackers constantly probe for weaknesses. Organizations can strengthen defenses by:

- Running regular audits of AI models.

- Training only on clean, verified data.

- Combining AI with skilled human oversight.

- Using red-teaming exercises to test resilience.

- Updating models and policies as threats evolve.

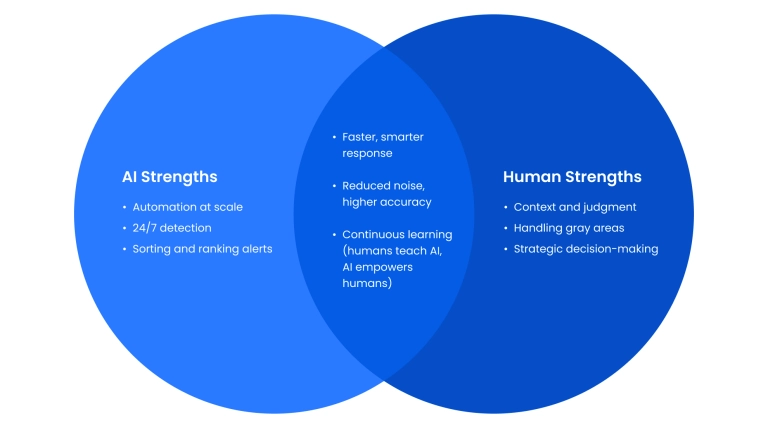

Human–AI collaboration

AI isn't a replacement for people — it's a force multiplier. AI handles large-scale monitoring and repetitive tasks, while humans bring judgment, context, and ethical oversight. Effective collaboration means:

- Dashboards that give humans real-time visibility.

- AI sorting and ranking alerts, with humans making the final calls.

- Feedback loops where human expertise improves AI models.

Upskilling teams

AI literacy is essential. Security staff need more than tools — they need training in how AI works, its limitations, and how to validate its decisions. Workshops, simple reference guides, and ongoing exercises keep teams adaptive and confident.

Final thoughts

At the end of the day, cybersecurity is no longer human or AI — it's both, working together. The future belongs to organizations that combine smart automation, strong oversight, and continuous learning.

If your industry is racing toward smarter, AI-driven security, remember: partnership is the real game plan. Technology and expertise together beat both hype and hackers. Now's the time to invest in tools and learning, reach out, and make cybersecurity an ongoing journey built on clarity and teamwork.

Share