The rapid development of AI technologies and machine learning is changing the business landscape of all industries. Sources suggest that more than 90% of companies worldwide admit to using, or at least exploring, AI.

Small companies that use AI for generic office tasks can continue to use it casually. However, big companies with multiple teams experimenting with AI who want to scale need to organize their AI usage. The AI center of excellence is a powerful solution for centralizing AI strategy, especially for businesses in highly regulated industries or companies building AI features into customer-facing products.

This article reveals how to set up an AI center of excellence from scratch, with best practices in place, and undergo a tangible enterprise AI transformation.

What is an AI Center of Excellence (AI CoE)?

An AI Centre of Excellence (AI CoE) is a central team within a company that plans and coordinates all AI efforts across various departments.

According to AIMultiple , an AI CoE provides:

- Strategic alignment between business goals and AI capabilities

- Shared best practices, governance, and compliance standards

- A centralized repository of models, tools, and reusable components

- AI-specific infrastructure, talent, and methodologies

Microsoft's Cloud Adoption Framework (CAF) defines an AI CoE as a means to accelerate AI adoption by leveraging reusable patterns, clarifying roles, and improving organizational readiness.

In particular, the Artificial Intelligence Center of Excellence is responsible for:

- Identifying machine learning and AI use cases that fit the business strategy

- Identifying typical patterns in AI use across different business units.

- Guiding a company-wide AI strategy

- Choosing appropriate software and hardware, and aligning the workloads.

- Establishing the AI Centre of Excellence's best practices related to every stage of technical and business AI implementation

- Resolving technical issues that occur throughout the development process

- Mitigating risks associated with AI strategy implementation

There are three common terms used to describe the AI center of excellence for enterprise: AI CoE, ML CoE, and Generative AI CoE.

- AI CoE is an umbrella-level term that covers all AI-related strategy, governance, infrastructure, and initiatives. It often includes both ML and GenAI as subdomains.

- ML CoE refers to a subdomain that exclusively covers ML expertise and data science. It works on use cases such as prediction, classification, and regression, and relies heavily on data scientists and ML engineers.

- Generative AI CoE is a subdomain that focuses on LLMs and prompt engineering.

An AI CoE is preferable to a decentralized approach when different teams focus on distinct AI tasks without a shared strategy or set of tools, as it establishes unified standards related to AI strategy. This is a key to creating AI solutions that are actually scalable.

A centralized repository of tools and reusable components is a precursor to AI projects not failing. Moreover, it increases time-to-value. The AI CoE is there to ensure that AI initiatives support broader business goals, rather than just local departmental needs. Ultimately, CoE teams are better equipped to manage ethical, legal, and security risks.

Why enterprises need an AI CoE: Key business drivers

The artificial intelligence center of excellence solves a spectrum of AI-related issues, both technical and business. Here is the list of everything it helps with:

1. Lack of strategic alignment

Decentralized AI initiatives don’t support business goals or deliver measurable impact.

CoE solves it by:

- Establishing a centralized AI roadmap tied to business OKRs and enterprise strategy

- Running use case intake and prioritization processes with scoring criteria (value, feasibility, risk)

- Assigning business sponsors to each AI initiative for responsibility and strategic ownership

- Defining core roles: ML engineers, MLOps, LLM architects, AI PMs, AI compliance leads

- Maintaining a skills matrix and capability maturity model

2. Siloed and duplicated efforts

Decentralized teams work in isolation with inconsistent tools. More often than not, this leads to wasted resources. Teams create one-off processes with inconsistent quality and outcomes, and pull in different directions with unclear goals.

CoE:

- Creates a shared repository of resources

- Defines an approved tech stack and platform standards for ML and GenAI

- Facilitates cross-functional collaboration

- Establishes a delivery lifecycle (Intake → POC → Pilot → Production → Monitor → Retire)

- Publishes standard operating procedures (SOPs) and onboarding guides

- Defines a clear AI vision and charter, endorsed by senior leadership

- Creates communication channels (dashboards, town halls, stakeholder syncs)

- Uses shared documentation and OKR alignment to maintain transparency and coherence

3. Stalled AI pilots

When companies launch AI pilot projects (small-scale experiments to test a concept), many never make it into real use across the organization because it is unclear who is responsible for maintaining the project after the pilot is done, and there is no infrastructure to handle it.

CoE:

- Provides ready-to-use MLOps/LLMOps pipelines and DevOps support

- Publishes deployment playbooks and model promotion criteria

- Assigns delivery lead pilots, who are expected to support the project in full adoption.

4. Fragmented AI governance and compliance risks

AI models are susceptible to regulatory violations.

CoE:

- Enforces Responsible AI policies (aligned with frameworks like NIST AI RMF or ISO 42001)

- Implements model review boards for high-risk use cases (esp. GenAI)

- Ensures every model has documentation (model cards, data sheets, bias/fairness assessments)

5. Poor return on AI/GenAI investments

It is a common situation with decentralized AI projects: the company buys the tools, but it does not result in anything clear, with the business impact unclear or never realized.

CoE:

- Links every use case to KPIs and ROI metrics

- Facilitates post-deployment reviews and ROI reporting

- Works with product owners to design solutions around real user workflows, not just tech

6. Lack of model monitoring and lifecycle management

After a model is deployed, its performance can gradually decline if there is no system in place to monitor it.

CoE:

- Implements centralized monitoring tools

- Maintains a model registry with version control and usage logs

7. Scalability roadblocks

Even partially successful pilots may not scale across regions or business units without proper planning, as they often lack the components needed for universal applicability.

CoE:

- Standardizes architecture patterns (microservices, containerization, serverless)

- Allows multi-tenant AI models or APIs with clear SLAs

- Provides reusable pipelines and deployment scripts to replicate success

8. Over-reliance on vendors or external partners

Many organizations outsource AI and fail to develop internal capabilities to manage it, which is a common practice. What is not is that they end up regretting it because of how AI is embedded in other business processes.

CoE:

- Builds internal capability via mentoring and gradual handoff

- Codifies best practices into internal playbooks and frameworks

- Establishes a knowledge transfer plan during all vendor-led implementations

Operating model for an AI Center of Excellence

The operating model of the AI Centre of Excellence outlines how the center operates. The mission of the AI center of excellence is set by the executive sponsor together with business executives. It includes tangible strategic objectives, along with KPIs that indicate whether they are met. Some of these KPIs are revenue, cost savings, and time-to-market, etc.

Key roles

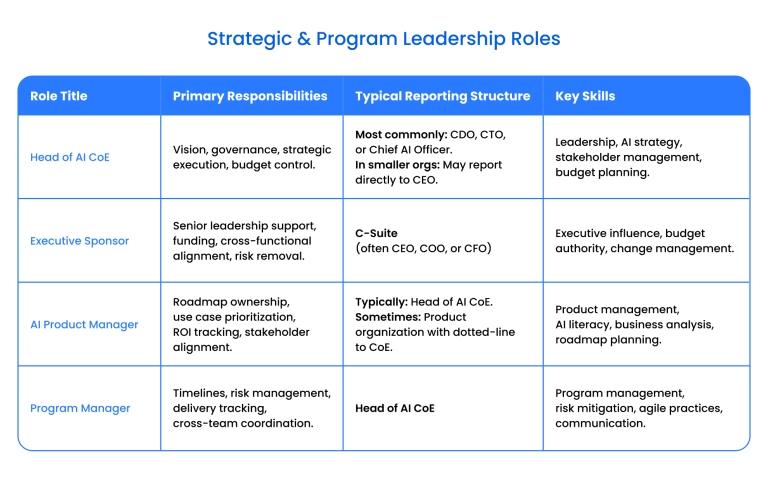

An effective AI CoE is built around a cross-functional, multidisciplinary team with clearly defined responsibilities. This team blends strategic, technical, operational, and domain-specific expertise. The tables below outline core roles across different functional areas, with typical reporting structures that may vary based on organizational size, maturity, and governance model.

Note on organizational structure: The reporting relationships shown reflect common patterns across mid-sized to large enterprises. In practice, CoE structures vary significantly based on organizational maturity, size, and industry.

Smaller organizations often combine roles (e.g., one person handling both ML Engineering and MLOps), while larger enterprises maintain specialized teams with dedicated leads. Federated models (where business units co-own resources with the CoE) are increasingly common, resulting in matrixed reporting where individuals have both functional and CoE leadership.

These leadership roles establish the strategic direction and ensure alignment between AI initiatives and business objectives.

- The Executive Sponsor provides top-down support, while the Head of AI CoE translates vision into operational execution.

- Product and Program Managers bridge strategy with day-to-day delivery.

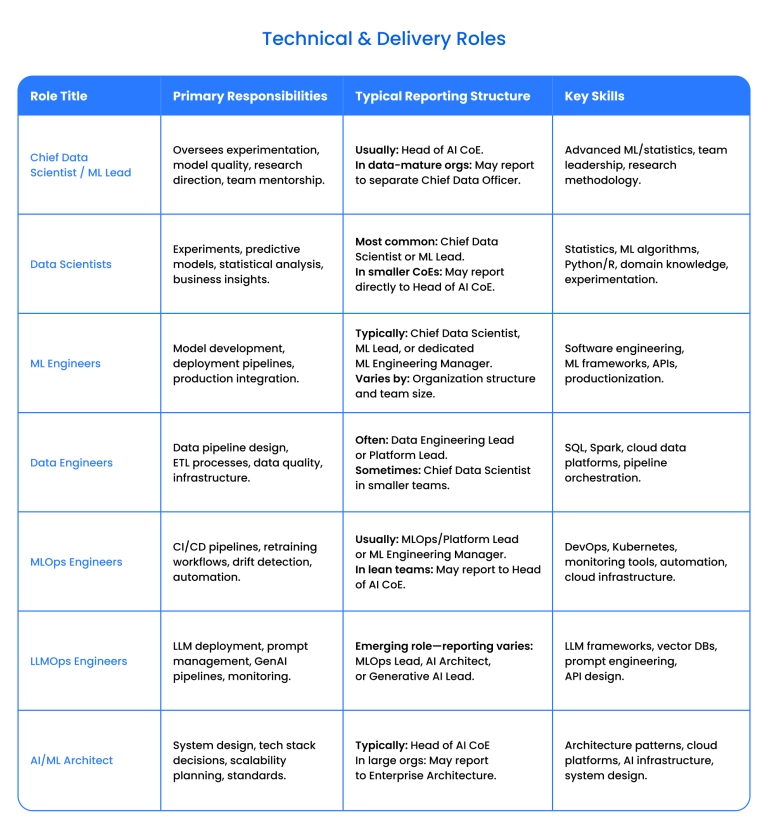

Technical roles form the core engine of the AI CoE, responsible for building, deploying, and maintaining AI/ML systems. This group includes both research-oriented positions (Data Scientists) and engineering-focused roles (ML Engineers, MLOps).

The distinction between Data Scientists and ML Engineers is critical:

- Data Scientists focus on experimentation and model development, while ML Engineers specialize in productionizing models and ensuring they run reliably at scale.

- LLMOps Engineers represent an emerging specialization within MLOps, specifically focused on the unique requirements of large language models and generative AI systems.

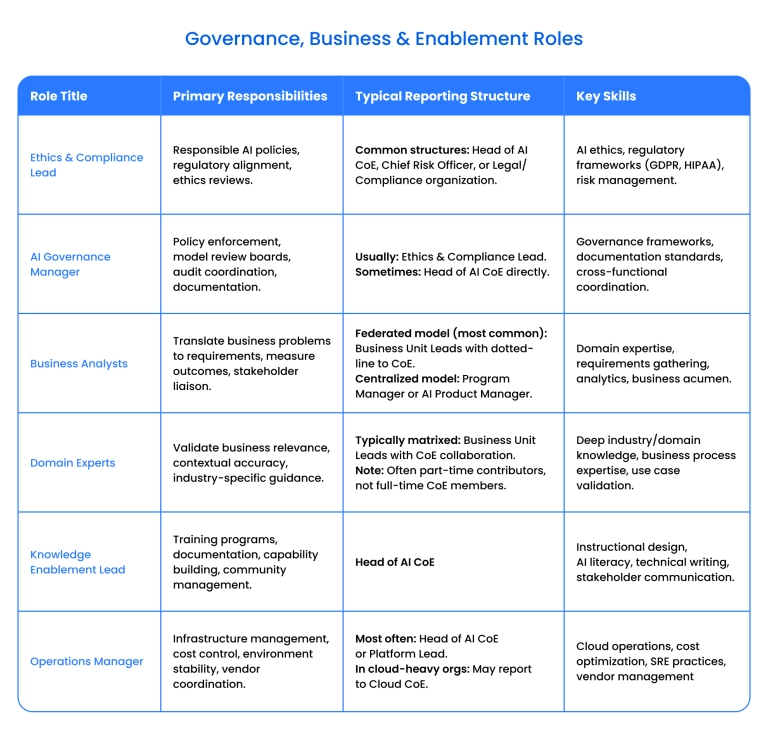

Governance and enablement roles ensure that AI initiatives remain compliant, ethical, and aligned with business needs.

- Ethics & Compliance Leads are particularly critical in regulated industries (healthcare, finance, government) where AI systems must meet strict regulatory requirements.

- Business Analysts and Domain Experts often operate in federated models – they maintain primary reporting relationships within their business units while collaborating closely with the CoE on AI initiatives.

This matrixed structure ensures AI solutions address real business problems with appropriate domain context.

- Knowledge Enablement Leads democratize AI capabilities across the organization, build internal competency, and reduce dependency on external expertise.

Staffing considerations: Not every CoE requires all these roles from day one.

- Start with a core team consisting of the Head of AI CoE, Executive Sponsor, Chief Data Scientist (or equivalent technical lead), an MLOps/LLMOps engineer, a data engineer, and an Ethics & Compliance Lead.

- Expand the team incrementally as use cases scale and organizational maturity increases. Many successful CoEs begin with 5-8 core members and grow to 15-25+ over 12-18 months, depending on the organization's AI ambitions and resource availability.

Decision rights & ownership model

A successful CoE strikes a balance between centralized oversight and decentralized execution. Key components include:

- Centralized governance with defined standards, infrastructure, and rules.

- Federated execution means that business units co-own specific use cases but follow CoE processes and toolchains.

- Clear RACI (Responsible–Accountable–Consulted–Informed) matrix: For example, the CoE owns model validation and data pipeline standards; business units own domain-specific data and problem statements.

Development & deployment standards

The CoE creates standardized templates and toolchains for model development:

- Shared data platforms, notebooks, and ML pipelines (e.g., MLFlow, Vertex AI, SageMaker)

- CI/CD and infrastructure-as-code for models (MLOps)

- Version control and reproducibility practices

- Model cards and documentation templates

Tooling & infrastructure stack

The CoE maintains a central tech stack to reduce fragmentation:

- Approved tools for each stage: labelling, modelling, deployment, monitoring

- Guidelines for cloud usage (e.g., Azure ML, AWS SageMaker, GCP Vertex AI)

- Shared APIs for internal model access

- Cost and usage monitoring dashboards

- Computing resources

- Cloud platforms

- Developing tools

- Large-scale data processing tools

- Generative AI tools

- Documentation for all tools

Governance framework for AI, ML, and Generative AI CoE

Once the AI center of excellence is set up, it requires a governance framework to operate at the required standard. A cross-functional AI governance council composed of all team leads is responsible for governing the AI center of excellence for the enterprise.

Here is the governance framework broken into smaller frameworks for better understanding:

Use case evaluation framework

This is a structured intake and prioritization process that helps organizations decide which AI/ML/GenAI projects are worth pursuing.

Key components:

- A Value–Feasibility–Risk matrix is a 3-axis evaluation model used to assess and compare AI/ML/GenAI use cases across three criteria: value (business impact), technical feasibility, and risk. The team should use AI strategy maps and project scoring templates to systematically assess each of these axes.

- Stage gates are a formal review mechanism where use cases must pass through checkpoints before moving to the next phase in the AI lifecycle (Ideation → Validation → POC → Pilot → Production).

- Business alignment means that the use case must solve a real business problem and map to strategic goals. Each project should have a clear path to ROI.

- The ownership model means that every project should have a defined cross-functional leadership team consisting of at least a business sponsor, tech lead, and compliance reviewer.

Ethics & compliance framework

This is a policy and review structure to make sure that AI initiatives adhere to ethical principles, legal requirements, and the internal standards of the AI center of excellence.

Key elements:

- Responsible AI principles are fairness, transparency, accountability, inclusivity, privacy, and safety.

- Policies should align with global regulations (e.g., GDPR, HIPAA) and enterprise risk frameworks.

- The use of every model should be documented. One of the AI Center of Excellence's best practices is to use model cards and data sheets to document everything, including the intended use and the known limitations.

- For high-risk AI/GenAI projects, there should be approval from the ethics review board. You can also use risk-tiering frameworks and use case–specific ethical checklists to classify AI projects as low, medium, or high risk.

Risk management & MLOps / LLMOps controls

This outlines how the team manages risks at every stage of the AI Centre of Excellence. There is a differentiation in the risks associated with the machine learning center of excellence and the generative AI center of excellence.

For general risk management across the domains, you should:

- Apply risk tiering & classification

- Perform compliance reviews at each lifecycle stage

- Analyze the impact of AI models

- Implement human-in-the-loop feedback

For MLOps across SDLC for AI/ML projects :

- Drift detection

- Automated CI/CD controls

- Audit trails

- Access control

For LLMOps:

- Prompt governance

- Output monitoring with filters or classifiers

- Fine-tuning safeguards should restrict training on sensitive or unverified data.

- Rejection pipelines: problematic outputs should be reviewed by humans and, if necessary, blocklisted.

Data governance & access control

AI systems must be trained on consistent, high-quality data.

Key features:

- All data has to be catalogued.

- RBAC or ABAC are applied for access governance of sensitive data.

- Domain teams should manage their data in accordance with CoE-defined standards. The best practice for this is federated stewardship, which is assigning enterprise data stewards to oversee data governance.

- Another best practice is creating a data landing zone with data classification and access controls already in place.

Azure Monitor can be used to monitor ML pipelines throughout the

Model lifecycle management

This aspect focuses on managing the model from production to depreciation.

Core components:

- Model registry

- Performance monitoring

- Scheduled audits every 6–12 months or after a significant environmental change.

- End-of-life protocols that define when and how models are deprecated or retrained.

There are different ways to approach lifecycle monitoring. Azure Monitor can be used to monitor ML pipelines throughout the lifecycle. You can also embed model lifecycle controls into broader cloud governance disciplines (e.g., cost control, data residency, policy enforcement) so it is governed at the level of cloud strategy. Lifecycle gates can also be embedded in CI/CD pipelines.

Cross-functional governance council

A formal governing body responsible for enforcing policies and reviewing high-risk AI projects should consist of:

- Business leaders and sponsors

- AI/ML CoE leads

- Legal and compliance officers

- Cybersecurity and IT risk professionals

- DEI and ethics officers

- CIO/CTO representation

The responsibilities of the council include:

- Reviewing and approving sensitive or impactful use cases

- Monitoring risk KPIs and incident reports

- Enforcing responsible AI principles across domains

- Updating governance playbooks and risk thresholds

Capability building: Skills, training, talent strategy

The team's capacity is the most effective precursor to the success of an AI Centre of Excellence (CoE), so it is crucial to hire the right people for the job and properly train them.

Skills matrix for AI CoE

Core skill areas for AI CoE are:

- AI/ML Engineering

- Data Engineering

- MLOps/LLMOps

- GenAI Expertise

- Cloud & Infra

- AI Governance & Risk

- Business Domain Knowledge

- Full-Stack Software Development

- JavaScript and/or Python

- Prompt Engineering

- Analytical/ problem-solving skills

- Willingness to learn and improve

- Effective communication with technical and non-technical stakeholders

- Effective leadership for leading roles

Upskilling strategy

The CoE must support continuous learning, particularly in fast-evolving areas like GenAI. Knowledge refresh cycles should ideally happen every 6–12 months.

Analysts, product managers, and engineers within the company should be given a pipeline for growth as part of the AI center of excellence.

- The upskilling strategy begins with a baseline assessment of current skill levels across departments for pre-start training.

- Tiered training tracks:

- For technical teams: LLMs, vector databases, RAG, AutoML, etc.

- For non-tech roles: AI literacy, ethical AI, business value of AI

- Role-based certifications: Encourage adoption of Microsoft Learn, Coursera, AWS Academy, or vendor-specific tracks

- Internal and external events: Monthly knowledge-sharing demos, AI Days, hackathons, and participation in industry forums.

Talent acquisition strategy

Attracting the right AI talent is key to CoE scalability and credibility.

Here is the recommended approach:

- First, build a core team with only critical roles. You do not need to hire everyone at the start of the project. Aside from the lead and sponsor, the first team can consist of an AI/ML architect, an MLOps/LLMOps engineer, a data steward, and a data engineer.

- Use hybrid staffing. Combine in-house experts, consulting partners, and contract specialists. This is done to manage the costs.

- Prioritize the skill matrix over the formal requirements for the role. The roles can evolve depending on the project's needs, but core skills are essential.

Community of practice

A Community of Practice (CoP) helps democratize AI knowledge beyond the core CoE team. CoP enhances AI literacy and mitigates resistance to change within the organization.

Here is a structure of CoP in AI CoE:

- Open membership: Include data-savvy employees from across departments. Their domain expertise helps build better use cases, and they can provide regulatory context.

- Knowledge hubs: Internal wiki, GenAI prompt library, best-practice templates

- Weekly or monthly syncs: Share lessons from use cases, failed pilots, experiments

- Ambassador programs: Identify AI champions in each business unit to bridge the CoE and local teams

- Innovation challenges: Run periodic AI use case ideation contests to surface hidden business pain points

Measurement framework for AI CoE success

The KPI's by which the success of an AI center of excellence for enterprise can be classified based on what they influence. Here are some key KPIs:

1. Strategic and business impact

- % of AI use cases aligned to business strategy: This is to ensure that AI use cases address real issues, rather than just produce pilots.

- Time-to-value per AI project

- Annual business value from AI (savings, revenue, efficiency)

- Use case success rate (POC → production): This is an outcome-measuring metric. It measures the percentage of AI pilots that successfully move from proof-of-concept to real, deployed production use. It counts the number of POCs completed versus the number actually deployed to production.

- Use case adoption rate (percentage of deployed models actually used)

2. Operational efficiency

These metrics help prevent overspending, among other things.

- Model deployment frequency: Indicates whether a particular model's performance is high or needs improvement.

- Average project cycle time

- Reusability index (% of reused components): Counts how many AI components (pipelines, datasets, modules) are reused across projects vs how many are built from scratch. It shows whether the CoE is actually creating reusable building blocks that save time and reduce duplicated effort, or if it wastes resources instead.

- Infrastructure cost per use case

- Time saved via automation

3. Capability building

- Percentage of CoE staff certified

- Number of upskilling hours per quarter

4. Model performance & risk

- Model accuracy/performance vs. baseline

- Model drift incidents detected

- Mean time to retrain after drift

- Percentage of models with complete documentation

- Percentage of AI initiatives that fail before POC or production

5. AI adoption

- Number of business units engaged with CoE

- Percentage of decisions supported by AI insights

How Binariks helps enterprises build an AI CoE

Organizations often require the assistance of AI development companies to build an AI Centre of Excellence (CoE), as it entails an entire operating model transformation for the company rather than just another technical project that can be easily handled internally.

A high-level consulting and implementation team, such as Binariks, assists in building an AI center of excellence from scratch and then hands it back to the company once all the processes are running as expected, and the internal team is complete and competent.

Building an AI center of excellence is a part of a broader scope of Binariks' AI & ML development services. Unlike other AI consulting companies , in our CoE-building, we combine both consulting and implementation to reduce the "build vs buy" burden for clients. Here is what we can do with your AI CoE:

Strategic consulting & CoE design

- Defining the vision and scope of your AI CoE, as well as the AI/ML/Gen-AI capabilities to include.

- Full business analysis to check AI solution readiness

- Use case evaluation

- Governance framework design

Building core infrastructure & tools, including:

- Data infrastructure

- AI & ML development

- MLOps (or LLMOps) pipelines

- Reusable AI/ML/LLM components

- Scalable architecture

- Data preparation and engineering foundations

- ML/AI solution quality assurance processes

Hands‑on AI/ML/Gen‑AI development & delivery

- Proof-of-concept and pilot development

- RAG/LLM application design and deployment

- Model integration, testing, and evaluation

- Knowledge transfer and documentation for your internal teams

- ML modeling, training, and parameter tuning

- Integration of ML/AI outputs into existing systems

Organizational capability building & knowledge transfer

- Training programs for key roles in the team

- Building a library of best practices and standards

- Operational procedures for governance, security, and model lifecycle

- Shadowing and gradual ownership transfer

Accelerating time-to-value & reducing risk

- Fast CoE setup using proven architectures and reusable components

- Avoiding trial-and-error through expert guidance

- Early governance and security guardrails to prevent compliance issues

- Clear ROI measurement and prioritization frameworks

Conclusion

The AI center of excellence is a strategic advancement that helps companies get ahead of the artificial intelligence and machine learning trends. Building an operating model for an AI center of excellence with a robust governance framework helps maximize the benefits of the AI CoE. The most critical component, however, is building a capable team.

Once your organization recognizes the growing impact of AI and the need for a structured, scalable approach, the next logical step is to understand where you stand today.

Organizations today need an AI CoE because AI has become a core business capability. This is especially true for those who rely on data-driven decisions, have customer-facing platforms, and deal with automation and digital products.

Ready to assess your AI maturity and build a clear development plan? Run an AI-readiness audit and roadmap session with Binariks , and get a fully defined trajectory for launching or scaling your AI Center of Excellence.

Share