As we are in the midst of the AI revolution in technology and business, traditional task-specific AI models are becoming a thing of the past. They are giving way to intelligent, flexible software that mimics human abilities to the best of its capacity. This is what agent architecture in AI is all about.

In this article, we reveal the process of building an AI agent architecture from scratch for the banking, insurance, and healthcare industries.

Stop losing time on manual tasks. Let Binariks build AI agents that handle 10x more work while your team focuses on strategy and growth.

Why single-purpose automation falls short

Traditional automation methods such as RPA bots, rule-based systems, and custom scripts were designed for repetitive, predictable tasks, but each comes with inherent limitations:

- RPA bots mimic human actions on screen, such as clicking buttons and entering data, but they rely heavily on fixed UI structures and break easily when layouts change.

- Rule-based systems follow predefined logic trees or "if-this-then-that" rules. They work well for simple decision-making, but can't handle ambiguity or exceptions that are not explicitly programmed. For example, a customer support bot built on rules will fail to recognize a request if the phrasing or keywords don't match its predefined triggers. The customer will be stuck in the loop of trying to explain what they mean and failing.

- Custom scripts are hand-coded programs written for specific automation needs, such as data extraction or integration, but they require technical upkeep and lack flexibility as business logic evolves.

Together, these methods offer short-term relief but quickly become costly and unscalable in dynamic enterprise environments. They are non-scalable, non-adaptable, have a high maintenance burden, are fragmented, and expensive to modify.

Without the AI agent architecture, it is within the realm of possibility for a customer support RPA bot to send a follow-up, unaware that the issue was already resolved in a parallel workflow, or for a company with dozens of custom scripts linked to CRM records to perform a week-long manual update after a minor field name change across the system.

What is an AI agent architecture?

AI agent architecture refers to the structural design and interaction model that defines how an AI agent perceives its environment and makes decisions. It describes what components the agent consists of, how these components interact, and how multiple agents can collaborate to solve complex tasks. Basically, agent architecture in AI is a blueprint for building agents with human-like capabilities. An AI agent is an intelligent system that performs tasks on behalf of a person or another system, either autonomously or semi-autonomously. It is usually based on several large language models (LLMs) or foundation models (FMs).

At its core, an AI agent is an autonomous entity capable of:

- Perception (receiving input from the environment),

- Reasoning or planning (making decisions),

- Acting (executing tasks or commands),

- And sometimes learning (adapting to new information),

- Autonomy (operating independently without constant instructions to a certain degree),

- Adaptability (adjusting behavior in response to new information),

- Goal‑oriented behavior (aligning actions with specific short‑ and long‑term objectives)

- Continuous learning (refining strategies and improving over time through interaction and feedback).

In most cases of enterprise workflow automation, having a single agent is too simple and insufficient. When AI agents operate together, they form multi-agent systems capable of agent-to-agent interactions.

In multi-agent systems, multiple agents can:

- Divide tasks. For example, one agent plans the workflow while another executes it.

- Pass intermediate results through shared memory or direct messages.

- Negotiate or coordinate to prevent duplication or conflicts.

- Work sequentially, concurrently, or in specialized roles, such as a retriever agent that gathers data and a summarizer agent that condenses it.

Agent-based systems are often compared to a standard pipeline approach. A pipeline is a fixed, linear sequence of steps where each stage processes input from the previous one and passes it forward. It works well for stable, repetitive workflows, but cannot adapt when conditions change.

Agent-based systems are more dynamic and flexible: agents can make decisions mid-process, adjust the flow, and coordinate with others in real time.

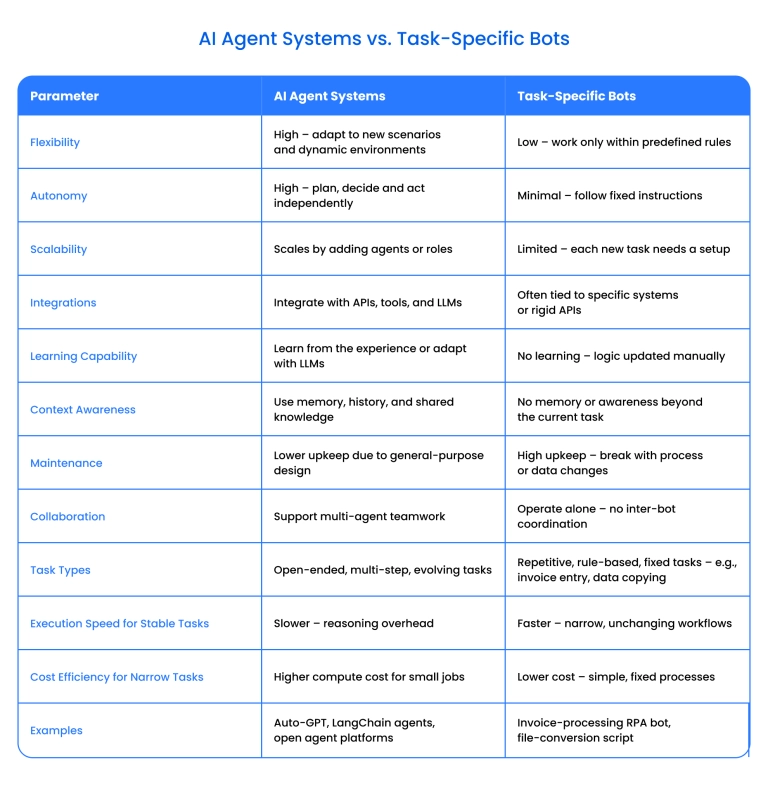

AI agent systems vs. task-specific bots

Architecture components of enterprise AI agent systems

An enterprise's AI agent architecture comprises components that work together to enable intelligent behavior. Here's a basic structure of agent-based architecture:

1. Agent layer

What it does: Hosts autonomous agents that perceive, reason, and act.

Key features:

- Goal‑oriented behavior (e.g., retrieve data, resolve tickets)

- Memory and context handling

- Dynamic decision‑making via rules, LLMs, or planning algorithms

- Task‑specific or general‑purpose roles

2. Perception module

What it does: Acts as the agent's sensory system to gather and interpret data.

Key features:

- Collects input from APIs, files, sensors, or user interaction

- Extracts features and recognizes entities via NLP, CV, or ML

- Prepares data for reasoning and action

3. Orchestration layer

What it does: Coordinates interactions among agents, tasks, and tools.

Key features:

- Agent‑to‑agent communication and coordination

- Task delegation and dependency resolution

- Multi‑agent planning and fallback logic

4. Memory & knowledge layer

What it does: Stores and retrieves knowledge for context and continuity.

Key features:

- Short‑term and long‑term memory

- Static or dynamic knowledge bases (vector DBs, graphs, docs)

- Integration with enterprise search and semantic retrieval

5. Cognitive/planning & decision‑making engine

What it does: Determines the agent's next move.

Key features:

- Goal representation and prioritization

- Strategy generation and plan creation

- Decision‑making using logic, heuristics, or LLM reasoning

6. Tools & skills layer

What it does: It gives agents the capabilities to interact with systems.

Key features:

- Connectors to APIs, CRMs, ERPs, and databases

- Agent‑accessible functions (send email, query SQL, create tickets)

- Tool registry or catalog for easy expansion

7. Action/execution module

What it does: Carries out chosen actions in the environment.

Key features:

- API calls, database queries, file updates, and content generation

- Physical or virtual actuators, depending on the use case

8. Interface layer

What it does: Connects agents with users and systems.

Key features:

- Multimodal I/O (text, voice, forms, dashboards)

- Integration with chat platforms and apps

- Feedback loop between users and agents

9. Communication/collaboration interface (multi‑agent)

What it does: Enables agents to work together toward shared goals.

Key features:

- Information sharing and task delegation

- Parallel or sequential workflows

- Conflict prevention and resolution mechanisms

10. Policy & governance layer

What it does: Ensures secure, compliant, responsible AI behavior.

Key features:

- Access controls, audit logs, and role‑based constraints

- Bias, safety, and hallucination mitigation

- SLA compliance and monitoring

11. Infrastructure & runtime layer

What it does: Provides computing and hosting for agents.

Key features:

- LLM hosting or API access

- Microservices orchestration (K8s, serverless)

- Scaling, fault tolerance, observability

Optional: Agent development & configuration toolkit

What it does: Supports building, testing, and refining agents.

Key features:

- No‑code/low‑code workflow builders

- Prompt templates and tuning tools

- Version control and test environments

From prototype to production: AI agent workflow

To build enterprise-ready AI agents, it's essential to map out how both automation and human oversight work together across the system's lifecycle.

Here is the entire process of an AI agent-based architecture workflow, including human-in-the-loop (HITL), where people are actively involved in the AI agent lifecycle.

1. Data collection & preprocessing

Goal: Gather and prepare the raw data that the agent will rely on.

Activities:

- Collect data from physical environments (images, audio, sensor feeds) and digital sources (structured databases, unstructured text, knowledge graphs).

- Clean and normalize the data, reduce noise, and prepare it for analysis using feature engineering or retrieval-augmented generation (RAG) techniques.

Human-in-the-loop:

Data engineers and domain experts ensure data quality, remove bias sources, and confirm that the dataset aligns with project goals.

2. Perception & feature extraction

Goal: Enable the agent to interpret and make sense of its environment.

Activities:

- Apply computer vision to extract features from images (edges, shapes, textures).

- Use NLP techniques to parse text and speech, extracting entities, keywords, or sentiment.

- Feed extracted features into the reasoning layer.

Human-in-the-loop:

Experts validate that feature extraction aligns with domain needs (e.g., detecting the right fields in an insurance form or the correct intent in a customer request).

3. Problem framing & use case selection

Goal: Identify the right problem where an AI agent can add value.

Activities:

- Define business objectives.

- Select tasks with repetitive, dynamic, or decision-heavy traits.

- Evaluate risks, constraints, and success metrics.

Human-in-the-loop:

Business stakeholders and domain experts define success, clarify edge cases, and prevent misaligned automation.

4. Prototype design & agent planning

Goal: Build a minimal viable agent (MVA) for experimentation.

Activities:

- Design task flows and define agent roles.

- Set up a basic LLM prompt chain or planner-reactor loop.

- Connect one or two tools (e.g., search, API call, summarization).

- Define the agent's objectives.

- Use algorithms (A*, Dijkstra, graph-based) or LLM-based planners to outline paths toward goals.

Human-in-the-loop:

AI engineers and prompt designers set boundaries and refine prompts or tool calls.

5. Feedback loop & evaluation

Goal: Test the prototype with real or synthetic data.

Activities:

- Run simulations or shadow-mode tasks.

- Collect performance data and identify failure cases.

- Evaluate agent reliability: reasoning quality and response diversity.

Human-in-the-loop:

Analysts and SMEs review results and annotate and restrict errors.

6. Refinement, decision-making & tool expansion

Goal: Improve reasoning, memory, and capabilities.

Activities:

- Add tools, memory modules, and fallback logic.

- Tune prompts or implement structured reasoning patterns.

- Introduce long-term memory or RAG pipelines.

- Use strategies like utility theory or reinforcement learning to select optimal actions based on goals and context.

Human-in-the-loop:

Business analysts and senior AI engineers validate high-stakes outputs and confirm correct tool usage.

7. Governance, safety & compliance checks

Goal: Prepare for secure, compliant deployment.

Activities:

- Implement access controls, audit logging, and content filtering.

- Align with GDPR, SOC2, HIPAA, or other regulations.

Human-in-the-loop:

Compliance officers review workflows and enforce ethical standards.

8. Pilot deployment & action execution

Goal: Test in a controlled, real-world environment.

Activities:

- Deploy to a small user group or limited scope.

- Monitor performance, user interactions, and failures.

- Carry out chosen actions. In digital environments, this could be sending messages, making transactions, or generating content; in physical systems, controlling actuators.

Human-in-the-loop:

Users provide real-time feedback and escalate unclear cases. Monitoring teams intervene in ambiguous or high-risk actions.

9. Full-scale production, learning & continuous improvement

Goal: Expand usage and enable ongoing adaptation.

Activities:

- Roll out to full enterprise or across domains.

- Monitor system health, retrain or fine-tune models as needed.

- Implement reinforcement, supervised, or unsupervised learning so the agent improves from experience.

Human-in-the-loop:

Trainers and SMEs provide labeled feedback, evaluate performance, and guide retraining cycles.

Use cases across industries

Agent architecture types manifest differently depending on the industry. This is an in-depth look into how they work for insurance, banking, and finance:

Insurance (document intelligence agents)

These AI agents for the architecture process large volumes of claims and risk assessments. They use OCR and retrieval‑augmented generation to extract key data points and generate citation‑backed insights for auditors and adjusters.

Multi‑agent collaboration example:

- Retriever agent – Locates and extracts relevant sections from claim documents and policy files.

- Summarizer agent – Condenses retrieved content into structured, human‑readable risk summaries.

- Compliance agent – Checks summaries against regulatory rules and flags non‑compliant findings.

Value: Cut document handling time, automate most claims processing, and improve customer satisfaction.

Learn more about comprehensive agentic AI strategies for insurance

Banking & finance (compliance & risk agents)

These agents verify KYC documents, monitor high‑value transactions, and detect suspicious activity patterns. They also scan contracts and financial reports for adherence to regulatory standards.

Multi‑agent collaboration example:

- Verification agent – Authenticates customer identity documents and matches them to account records.

- Monitoring agent – Tracks real‑time transactions, detecting anomalies and flagging potential fraud.

- Regulation agent – Reviews contracts and reports to ensure they meet compliance guidelines before approval.

Value: Reduce manual compliance checks, lower fraud exposure, and shorten approval cycles.

Explore advanced fintech automation workflows

Healthcare (care coordination agents)

These agents automate appointment scheduling, send follow‑up reminders, and prepare structured visit notes or discharge summaries. They can access EHR data to personalize interactions and ensure communication continuity.

Multi‑agent collaboration example:

- Scheduling agent – Books and adjusts patient appointments based on availability and urgency.

- Follow‑up agent – Sends reminders, follow‑up questionnaires, and wellness check prompts.

- Documentation agent – Structures visit notes into standardized medical records and updates the EHR.

Value: Reduce documentation time by 30-40% and save up to 15,000+ staff hours per month , while cutting claim denials by and billing staff overtime by at least a third.

Discover complete healthcare workflow automation

Mini case: Agent system for document-heavy workflow

AI agent architectures are especially great for document-heavy workflows as they can process unstructured data at scale and work with key information in that data. Here are the two cases of effective AI agent architecture: an AI agent for insurance document analysis and an agentic AI for hospital workflow . Both were achieved in collaboration with Binariks as a dedicated IT partner.

In the first case, a global insurer (50,000+ employees, $45–50B revenue) faced mounting inefficiencies in reviewing data, mostly unstructured PDFs and handwritten forms stored in SharePoint. The challenge was that the manual document review slowed decision-making and increased risk in a highly regulated insurance environment.

The goal was to:

- Automate the extraction of structured data from both scanned and digital documents.

- Identify risks and potential losses without human intervention.

- Provide audit-ready insights backed by citations and traceable logic.

Binariks built an AI agent architecture using:

- Azure OCR for document digitization.

- LangChain + LangGraph for agent coordination.

- OpenAI APIs for reasoning and content extraction.

Agents extracted structured data, flagged risk indicators, and provided citation-backed insights. Reflection agents also compared reasoning paths.

The achieved results were:

- Drastically reduced manual review time.

- Increased consistency and trust in risk evaluation.

- Created a scalable foundation for future AI use cases like fraud detection and compliance automation.

For a healthcare company, we automated scheduled conversations to unburden medical staff. A healthcare platform provider supporting mid-sized hospitals needed to reduce the repetitive workload of nurses and administrative staff, so they contacted us. High volumes of unstructured communications caused them to delay or even miss routine follow-ups.

For this reason, the client wanted to initiate and manage check-ins based on scheduled events automatically and capture structured records from those interactions. Everything also had to be seamlessly integrated with existing hospital infrastructure and workflows.

Binariks delivered a conversational agent MVP using:

- GPT-3.5, LangChain, and a FastAPI backend.

- APScheduler to trigger workflows from a PostgreSQL database.

- Predefined prompt templates with no training data.

Designing an AI agent architecture achieved:

- 30% time savings on documentation.

- 2× increase in scheduled task compliance.

- Freed up medical staff to focus more on direct patient care.

Key challenges and best practices

Agent architectures come with a set of common issues. While working on enterprise workflow automation, Binariks developed the best practices to resolve these issues. Let's look at what you should do with each specific problem:

1. Hallucinations and unreliable reasoning

Challenge:

AI agents powered by LLMs may produce plausible-sounding but incorrect or harmful responses, especially in open-ended tasks or with poorly scoped prompts.

Best practices:

- Sandbox testing: Run agents in a non-production environment with synthetic or anonymized data.

- Shadow mode: Deploy alongside humans to compare actions before going live.

- Tool invocation validation: Require confirmation before executing irreversible actions (e.g., sending emails, deleting data).

- Guardrail prompts: Add constraints and clarify expectations in system messages and tool wrappers.

2. Lack of version control and traceability

Challenge:

Without versioning, it's hard to trace which changes caused certain behaviors or roll back a broken configuration.

Best practices:

- Prompt versioning: Treat prompts and workflows like code.

- GitOps / AgentOps: Use repositories and CI/CD for configuration and tool setup.

- Metadata logging: Record prompt versions, tool calls, and outputs for every run.

3. Poor observability and debugging

Challenge:

It's often unclear why agents fail, especially with multi-step reasoning or external tools involved.

Best practices:

- Use full trace logs

- Structured output: Use JSON or chain-of-thought formats for auditability.

- Use real-time dashboards

4. Tool errors and misuse

Challenge:

Agents may misuse tools: sending incorrect parameters or calling incompatible APIs/scripts.

Best practices:

- Tool contracts: Define clear input/output schemas and error handling.

- Explicit action intent: Have the agent declare goals, check parameters, and confirm tool compatibility before execution.

- Test harnesses: Build automated tests for tool-call logic, especially for high-risk actions.

- Progressive rollouts: Start with read-only or dry-run actions before enabling writes.

- Repeatable execution process: Create a standard tool-use workflow with error handling to reduce repeat failures.

5. Security and compliance

Challenge:

Agents can leak sensitive data or trigger actions outside access control boundaries.

Best practices:

- Role-based execution policies: Limit tool and data access based on role/context.

- Data masking/redaction: Anonymize sensitive information before LLM use.

- Audit trails: Log every action with timestamps and responsible components.

- Approval gates: Require human approval for sensitive or customer-facing outputs.

6. Workflow drift and hidden failures at scale

Challenge:

As agents scale, behavior drift can cause invisible failures or inconsistencies if left unchecked.

Best practices:

- Continuous shadow mode: Run agents in parallel and compare outcomes.

- A/B testing: Evaluate performance differences across agent versions.

- Automated regression tests: Ensure updates don't break previously successful behaviors.

7. Vast solution space and lack of focus

Challenge:

When agents have too many possible actions, they may waste time exploring irrelevant options or fail to converge on optimal ones.

Best practices:

- Domain-specific heuristics: Embed business rules, priorities, and constraints to guide action selection.

- External guidance systems: Use rules engines or curated decision trees to filter options before reasoning.

- Contextual knowledge: Preload agents with relevant sources, formats, and validation steps for the domain.

Why Binariks: From architecture to enterprise integration

Building architectures for different AI agents is a complex process with many potential setbacks that call for top-notch professionals. Binariks is a reliable partner for implementing AI architectures thanks to our full-stack expertise, from designing scalable agentic architectures to integrating them into enterprise-grade environments.

Here is what we provide:

- Regulated industry expertise (healthcare, finance, insurance, and logistics)

- Security-first architecture

- Seamless enterprise integration with EHRs, CRMs, ERPs, or proprietary tools

- Advanced agent frameworks with multi-agent systems

- Proven delivery at scale

Conclusion

As AI agents evolve from experimental tools to enterprise-ready systems, they offer a strategic advantage in automating complex, document-heavy, and compliance-driven workflows. Looking ahead, next-gen agentic systems will feature greater autonomy, real-time collaboration across distributed environments, and seamless integration with IoT infrastructure.

Want to explore agent-based automation for your use case? Schedule a discovery session with Binariks to map out your journey.

Share