Frontline workers – nurses, warehouse pickers, field technicians, logistics staff, and others – operate in environments where attention, speed, and physical coordination matter more than polished dashboards. They rarely have the luxury of stopping to unlock a device and navigate menus.

That is where AI-driven voice interfaces shift from a convenience feature to a productivity enabler. Voice-activated enterprise workflows – AI-driven systems that convert spoken commands into structured business actions – allow workers to interact with enterprise systems without breaking their flow or compromising safety.

Traditional touch-based and visual interfaces were designed for desks, not shop floors or hospital corridors. When hands are busy, and eyes must stay on the task, every manual interaction becomes friction.

Voice-first workflow automation addresses this gap by turning spoken commands into structured system actions, reducing context switching, and keeping frontline teams focused on what actually matters.

In this article, you will learn:

- Why conventional UI patterns fail in hands-busy, eyes-busy frontline environments

- Where voice-driven business process automation delivers measurable efficiency gains

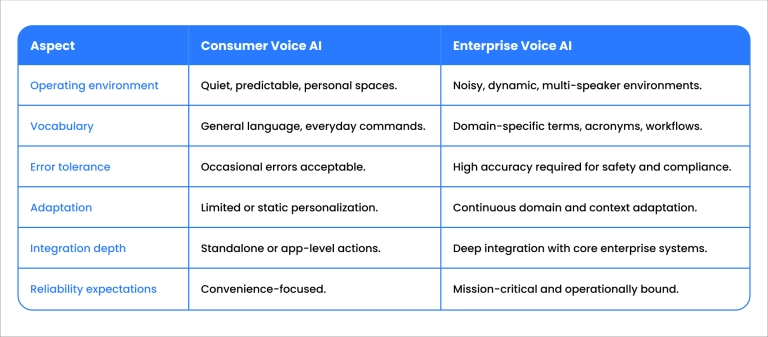

- How enterprise-grade voice AI systems differ from consumer voice assistants

- Technical, security, and integration challenges specific to regulated industries and mission-critical deployments

Read on to see how voice-enabled enterprise automation moves from concept to operational advantage in frontline settings.

The gap in frontline productivity

Frontline productivity rarely collapses because teams lack discipline or experience. It breaks when tools demand visual attention and manual input in environments where both are a liability. Voice AI interfaces surface this gap by removing screens from the interaction loop and exposing how much friction traditional systems introduce into routine tasks.

The operational pain points repeat across industries:

- Delayed data entry, when information is logged after the fact instead of at the moment of action

- Attention switching, forcing workers to look away from patients, equipment, or moving inventory

- Higher error rates, caused by rushed inputs or incomplete records

- Safety exposure, especially in settings where stopping or looking down creates risk

- Low system adoption, when tools slow people down instead of supporting their workflow

As organizations move beyond basic command-and-control voice interfaces toward voice-first voice-first agentic interfaces – systems that interpret intent, trigger multi-step actions, and guide next steps without constant prompts – many invest in AI/ML development services to align interaction models with real operational constraints.

AI voice agents are increasingly used to handle structured reporting, task confirmation, and system updates in motion-heavy roles, reducing latency while keeping workers focused on the physical task at hand.

Explore custom AI development services for secure, enterprise-grade automation solutions

Use cases: Voice efficiency in action

Voice interaction shows its real value when it disappears into the workflow and supports work that cannot be paused. Across frontline-heavy industries, voice-activated enterprise workflows reduce friction by letting systems adapt to human motion, not the other way around.

Healthcare: Clinical documentation & patient care

Clinicians use voice-activated clinical workflows to capture observations, confirm medication steps, and navigate electronic health records (EHR) without stepping away from patients. This reduces clinical documentation time by up to 50% and lowers cognitive load during critical moments, especially when speed and accuracy directly affect outcomes.

Logistics & warehousing: Hands-free inventory management

Warehouse staff rely on voice-directed picking systems – spoken instructions for picking, sorting, and inventory updates while staying mobile. These environments surface background noise speech recognition challenges, which enterprise-grade voice AI solutions address through domain tuning and noise-adaptive acoustic models designed for forklifts, conveyors, and open floors.

Field service & maintenance: Voice-enabled work orders

Technicians in the field use voice commands to log inspections, retrieve equipment manuals, and confirm task completion while working with tools or machinery.

Voice-activated field service workflows reduce delays caused by gloves, poor lighting, or unsafe conditions where screens are impractical.

Manufacturing & shop floor operations: Real-time quality control

On production lines, operators issue voice commands, receive alerts, and confirm quality checks without interrupting workflows. This voice-first manufacturing model supports consistent execution and faster issue response without introducing extra hardware complexity.

Retail & on-site operations: Voice-enabled inventory checks

In-store and on-site teams use voice interfaces to check stock levels, initiate replenishment, or coordinate tasks across locations. These scenarios increasingly rely on voice interfaces and AI-driven experiences, backed by scalable infrastructure, often implemented through cloud development to support real-time processing across distributed retail environments.

Technical challenges in enterprise environments

Voice AI systems behave very differently outside controlled consumer settings. On factory floors, in warehouses, or in busy clinical environments, speech input is distorted by machinery, overlapping conversations, and inconsistent acoustics.

Background noise speech recognition challenges become a core engineering problem rather than an edge case, requiring noise-robust acoustic models, directional microphone arrays, and continuous model fine-tuning.

Another critical layer is domain-specific language adaptation. Enterprise voice AI systems must understand industry-specific terminology – medical acronyms (NPO, PRN, BID), logistics codes (SKU, ASN, BOL), manufacturing terms (OEE, SPC, TPM) – that generic consumer voice models were never trained on.

Without domain adaptation, recognition accuracy drops quickly, leading to errors, repeated commands, and user frustration.

These differences explain why consumer-grade voice tools rarely scale into frontline operations without significant architectural changes. Enterprise voice AI deployments demand speech systems engineered for precision, resilience, and tight alignment with operational reality, not just conversational fluency.

Seamless integration & accuracy

Voice becomes truly useful in enterprise settings only when it operates as a native part of core systems, not a bolt-on layer. In healthcare and supply chain environments, voice interfaces with artificial intelligence connect directly to platforms such as electronic health records (EHRs) and warehouse management systems (WMSs), turning spoken input into structured records, status updates, or task confirmations in real time.

This integration relies on well-defined APIs, event-driven architectures, and strict data validation. Whether a nurse is updating a patient record or a warehouse worker is confirming a pick, the application of AI and voice technology must translate intent into precise system actions without adding latency or ambiguity. Even slight delays or misinterpretations can break trust and slow adoption.

Accuracy is reinforced through confidence scoring and feedback loops. Modern systems do not just process speech; they evaluate certainty and request clarification when confidence drops below a threshold (typically 85-90%).

This is where voice interfaces and generative AI add value by handling natural language variability while still enforcing structured outcomes, often deployed on secure platforms such as healthcare cloud solutions to support scalability and compliance.

Together, tight integration, low-latency processing, and confidence-aware responses ensure that voice-activated enterprise workflows remain fast, reliable, and trusted in high-stakes environments.

Security, privacy, and HIPAA compliance

Security becomes non-negotiable once voice AI systems handle clinical data, operational records, or regulated workflows. In these environments, voice interfaces and chatbots must apply the same controls as core business systems, including encryption in transit and at rest, strict identity management (role-based access control, multi-factor authentication), and detailed audit logs that track who accessed what and when.

One of the central design decisions is where speech processing occurs. Cloud-based voice processing enables scalability and centralized model management, while on-device speech inference reduces data exposure by keeping raw audio local.

Most production deployments of enterprise conversational AI use a hybrid approach, processing sensitive commands locally and sending only structured, non-identifiable outputs to backend systems.

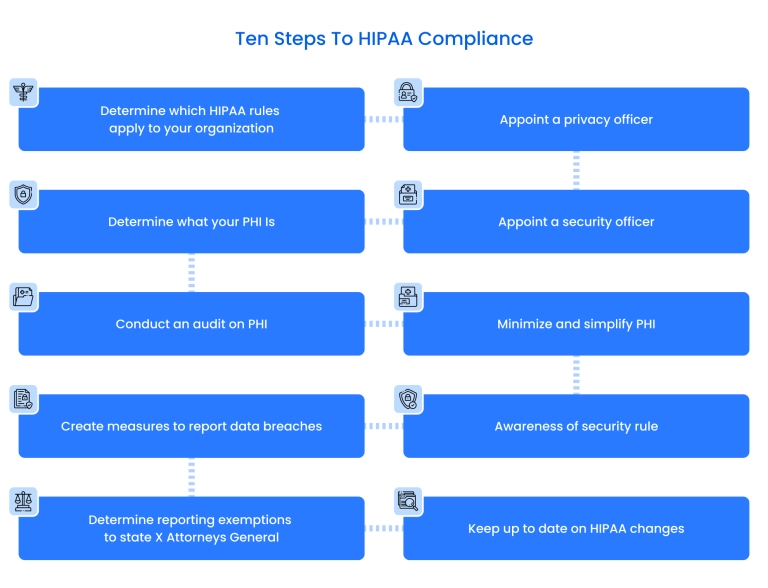

In healthcare, these architectures must align with HIPAA Security Rule requirements, which define safeguards for electronic protected health information (ePHI), including access control, transmission security, and integrity mechanisms.

Official guidance from the US Department of Health & Human Services outlines these requirements in detail and serves as a reference point for validating HIPAA-compliant voice-enabled systems.

Conclusion

Voice-activated enterprise workflows solve a concrete operational problem: enabling frontline teams to work faster and safer without stopping to interact with screens. When voice AI is deeply integrated into core business systems, it improves data quality, reduces cognitive load, and supports consistent execution in high-pressure environments.

At Binariks , we help organizations design and implement enterprise-grade voice automation solutions that fit real operational constraints, from system integration and domain-specific model training to security architecture and regulatory compliance.

If you are looking to automate frontline workflows with reliable, production-ready voice AI technology, now is the right time to start that conversation and turn voice into a measurable operational advantage.

Share