Global data is projected to reach around 181 zettabytes in 2025, growing annually at about 55%-65% each year. About 80 percent of that data is unstructured, which means companies struggle to process unstructured data effectively or use it in analytics.

Unstructured data processing is the process of making sense of unstructured data to gain a competitive advantage. In this article, we explain how unstructured data processing works using a real-world example from the insurance industry.

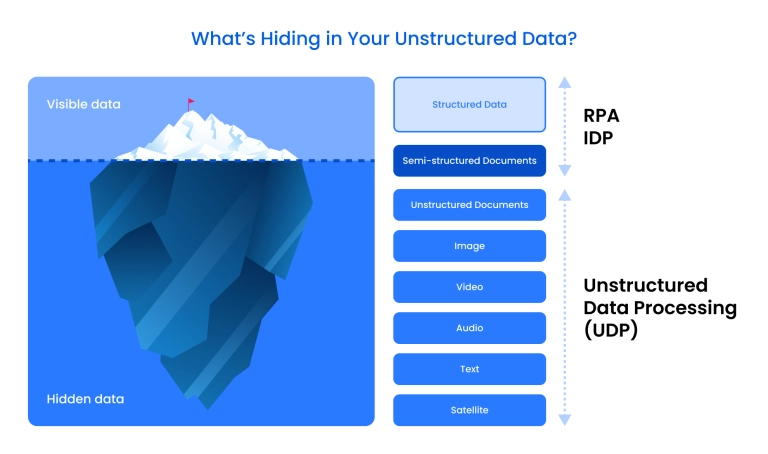

What is unstructured data?

Unstructured data is information that does not adhere to a predefined data model or format, so basically, data without organization. It cannot be easily stored in regular databases or analyzed with standard tools because it is not organized in rows or columns.

However, this data contains plenty of valuable insights for the company, so organizations must extract them to give the business a competitive advantage.

Types of unstructured data

Examples include:

- Emails – contain text, attachments, and metadata.

- PDF documents – mix text, images, and tables in irregular formats.

- Media files – such as photos, videos, and audio recordings.

- IoT data streams – sensor readings and logs in diverse, unformatted structures. Examples include data from smart thermostats, factory machines, and wearable devices such as fitness trackers.

- Social media posts

- Customer feedback

Unstructured data can be roughly divided into data generated by humans and data generated by machines.

Structured vs. unstructured data

What is unstructured data processing (UDP)?

Unstructured Data Processing (UDP) is the practice of processing information from data that lacks a predefined format. The goal is to transform unstructured raw data, such as multimedia files, into structured, actionable insights that have value for businesses.

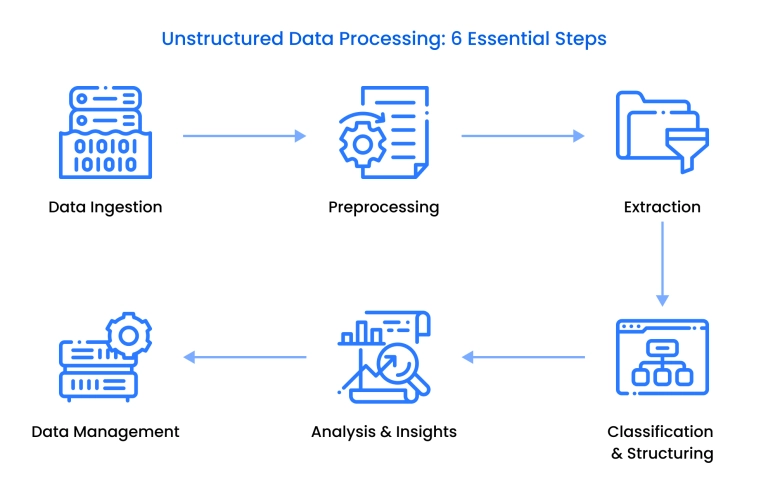

How does unstructured data processing work?

Processing unstructured data occurs in several stages. Here is a detailed look at each of them:

- Data ingestion

This involves the initial collection of data from multiple unstructured sources. Methods include web scraping, API integration, and file parsing. Intelligent document sourcing can extract unstructured data that is stored in legacy systems.

Teams may use tools like LlamaParse or build custom extractors for specific formats. The collected data is then stored in repositories in its fixed format.

- Preprocessing

This process involves cleaning and normalizing data (e.g., removing noise, duplicates, or irrelevant content) to prepare it for later analysis. For AI use cases, data is often chunked into smaller, semantically meaningful units and formatted as JSON at this stage for future cleaning. Embeddings may be generated to enable semantic search or retrieval in GenAI pipelines.

NLP and image recognition are also used here to distinguish between facts and opinions and extract text from large files. Preprocessing reduces noise and ensures quality before models automate unstructured data extraction downstream.

- Extraction

To extract information from unstructured data, we use techniques such as natural language processing (NLP), optical character recognition (OCR), or computer vision to identify and capture meaningful elements.

Newer solutions leverage multimodal AI models that combine text and vision to improve parsing accuracy over traditional IDP tools.

Extraction must be accurate and should occur at the right time.

- Classification & structuring

This involves categorizing and converting the extracted data into structured formats suitable for databases, machine learning workflows, or other systems that will analyze the data. The appropriate format is often rows and columns.

The process includes labelling, tagging, and aligning data with domain-specific taxonomies. These structured outputs enable teams to transform unstructured data truly.

Intelligent document processing, which utilizes NLP and machine learning, is also used at this stage. You can also prioritize specific data points that are most relevant to you, such as gender or age.

Outputs are prepared for:

- databases

- document stores

- vector search

- big data services and analytical engines

At this point, the organization's unstructured data stack begins to take shape.

- Analysis & insights

This process involves applying analytics or machine learning models to generate insights, detect trends, or automate decision-making. It should align with your organization's specific goals to boost sales and increase ROI.

This stage often uses Python-native processing engines (e.g., Polars, Daft) and stores results in vector databases or document stores for real-time access and AI-driven applications. Insights can also be visualized via charts or graphs.

- Data management

This stage focuses on storing, organizing, and governing unstructured data so that it can be accessed and retrieved. Teams rely on object storage (e.g., Amazon S3, Azure Blob), vector databases (e.g., Weaviate, Qdrant), and document databases (e.g., Couchbase, Firebase) to support AI workloads and for data infrastructure optimization .

New file formats like Lance, Nimble, and Vortex offer faster retrieval and better support for wide, AI-specific datasets compared to Parquet. Strong data governance is required to comply with regulations such as GDPR or HIPAA.

This layer often integrates with data science services to build downstream predictive or generative models.

Key challenges in processing unstructured data

Data volume and variety

Unstructured data is generated at a massive scale. The constant influx from multiple channels (internal systems, third-party sources, and customer interactions) can create issues with storage and indexing. Traditional systems are often unprepared to handle such diversity at speed and on a large scale.

Scalable cloud infrastructure (e.g., AWS S3, Azure Data Lake) combined with distributed processing frameworks, such as Apache Spark or Ray, is the solution here, along with data lakehouse architectures. This infrastructure helps handle large data volumes.

Inconsistent formats

Unlike structured data with defined schemas, unstructured inputs come in unpredictable formats, such as scanned invoices, handwritten notes, mixed-language documents, or multimedia files.

This inconsistency makes it hard to apply one-size-fits-all extraction tools and often requires case-specific preprocessing pipelines or format-specific AI models. A multi-layered extraction approach that combines OCR, NLP, and computer vision is required to process the inconsistent formatting.

Data quality and noise

Unstructured data is messy. It frequently includes irrelevant sections, duplicate content, formatting errors, or outdated information.

For example, a contract PDF might contain boilerplate text, margin notes, or embedded scans, all of which can dilute accuracy unless filtered out. Without strong preprocessing, downstream AI models risk being trained or evaluated on flawed inputs.

Scalability and performance

Real-time or near-real-time processing of large volumes, such as live chat logs or high-resolution video calls, requires not only computational power but also intelligent resource allocation. Scaling from pilot to production often exposes architectural flaws.

To solve this issue, use cloud-native, containerized architectures (e.g., Kubernetes, serverless, Kafka) for dynamic scaling. Stream processing tools like Flink or Spark Streaming ensure low-latency performance, while observability tools (e.g., Prometheus, Grafana) help monitor and optimize throughput.

Security and compliance

Unstructured data often includes sensitive or regulated content. If this data is not protected by proper access control and encryption, organizations risk compliance violations (such as GDPR and HIPAA) and reputational damage.

To solve this challenge, implement strong data governance and security frameworks with role-based access control (RBAC), encryption at rest and in transit, and regular audit logging. Utilize data loss prevention (DLP) tools and compliance automation platforms to identify sensitive information promptly and ensure alignment with standards such as GDPR, HIPAA, and SOC 2.

Business applications of unstructured data processing

Unstructured data management is beneficial for any business that can gain valuable insights from it. Industries that handle large volumes of unstructured data, with much of their critical information living outside of databases. Here are a few examples of how businesses apply unstructured data processing:

Insurance and financial services

- Streamlining how teams handle broker submissions and claim forms

- Pulling out key data from quotes, contracts, or compliance documents

- Flagging missing fields or inconsistencies before underwriting

- Summarizing past coverage or incident history for faster decision-making

- Evaluating credit risks and detecting fraud

- Adapting to industry regulations like the SEC, FINRA, and GDPR

- In our example at Binariks, a major insurer we worked with reduced quote processing from 15 days to just minutes using an AI-based document pipeline.

- Another client, a global risk management firm, reduced intake time by 90%, eliminated over 80% of manual work, and achieved audit-ready compliance logs by automating claim submissions in their Australian operations.

- Powering an AI solution for insurance underwriting

Healthcare

- Extracting information on medications and procedures from physician notes

- Cleaning up EHR exports for billing and clinical research

- Flagging patterns in unstructured patient records for early diagnosis

- Summarizing lab reports or radiology findings for easier follow-up

- Healthcare benefits massively from being one of the largest producers of data.

Manufacturing and IoT

- Analyzing sensor data and log files to detect equipment issues before failure

- Pulling maintenance history from work orders and inspection notes

- Reading diagrams or scanned manuals to support staff training and device troubleshooting

- Monitoring production lines using image or sound-based inputs

Legal and compliance

- Reviewing contracts to surface non-standard clauses or missing terms

- Tagging and organizing case files across different formats

- Building timelines from email threads and meeting notes

- Ensuring onboarding documents or NDAs are complete and up to date

What are the benefits of unstructured data processing?

Unstructured data processing helps to turn chaotic information into usable intelligence. It enables automation and provides usable analytics for a competitive advantage. Here are the benefits:

- Automating data extraction and classification reduces time spent on manual data processing. For example, in a healthcare study, a zero‑shot NLP tool processed unstructured text in a mean of ~12.8 seconds (versus ~101 seconds for human abstractors) while maintaining comparable accuracy. Human effort spent on a manual data extraction can be redirected elsewhere.

- Unstructured data processing enables better decision-making through enhanced insights. For example, you can discover that customer satisfaction dropped 15% after the latest UI update or that most service delays occur at the document review stage. All of this fuels the organization's data analytics strategy .

- UDP solutions are scalable and flexible: they can handle growing data volumes and adapt to new document types without re-engineering entire workflows.

- Automated tracking and audit trails help with regulatory compliance.

- Unstructured data processing saves costs: By reducing repetitive manual work and inefficient document handling, companies can reallocate resources to high-value tasks.

- Once unstructured data is standardized, it becomes a pipeline for innovation through predictive analytics and AI-driven insights.

Techniques and tools for unstructured data processing

Unstructured data processing is basically a blend of advanced AI technologies and scalable infrastructure.

Key unstructured data analysis techniques and technologies used throughout the process include:

- Artificial intelligence (AI)

AI forms the foundation of UDP. It is what helps analyze complex data patterns and automate their interpretation.

- Machine learning pipelines

In the context of unstructured data, ML pipelines help extract insights from messy formats by following a structured sequence of steps that process the data. ML pipelines used to process data are:

- NLP pipelines – for text classification, entity extraction, and summarization

- OCR and document pipelines – for scanned documents, forms, and handwriting

- Image and video pipelines – for visual inspection, detection, tagging

- Multimodal pipelines – combining text, image, or audio inputs

- RAG pipelines – for retrieval-augmented generation and semantic search

Some of these models warrant further study.

- Natural Language Processing (NLP)

NLP is a machine learning pipeline that enables computers to understand and extract meaning from human language across various types of text-based unstructured data, such as e-mails and social media posts.

Some core features of natural language processing include text classification, sentiment analysis, and information extraction. NLP identifies written information and translates it into a readable format.

- Computer vision

This technology interprets images, scanned documents, and handwritten text using techniques such as Optical Character Recognition (OCR). It correctly identifies objects, people, and other visual cues in an image.

- Cloud platforms (AWS, GCP, Azure)

Cloud is a scalable infrastructure for the storage and orchestration of high-volume, real-time data processing.

- Data integration and ETL tools

Solutions like Apache NiFi, Airflow, or Azure Data Factory help collect, clean, and move data between systems. Apache NiFi automates data movement, Apache Airflow extracts and manages complex data pipelines, while Azure Data Factory is a Microsoft tool for moving data in the cloud.

- Vector databases and RAG

These store semantic representations of text and enable GenAI systems to retrieve relevant context for reasoning tasks.

- Data visualization and analytics tools

Platforms like Power BI, Tableau, or Looker translate processed data into dashboards for decision-making and reporting.

Best practices for implementing UDP in your organization

1. Define clear business objectives

Start by identifying the pain points. Is the goal to achieve faster document turnaround, reduce manual work, something else, or both?

In one Binariks case, a global risk management firm needed to eliminate hours of manual transcription for case intake. Another insurance client aimed to reduce 15-day quote delays. These clear goals shaped both solutions from day one.

2. Start with high-value use cases

Identify areas where unstructured data creates the most friction or cost. These are the ones that will yield the most significant payoff.

For both our clients, the intake process (claims, quotes, and case requests) was the most manual and error-prone. Automating it created immediate ROI and proved the value of UDP.

3. Ensure data quality and consistency

The success of any AI-driven process depends on the quality of its inputs. Of course, effective data processing of unstructured data is intended to improve it, but the quality of the input also matters.

If raw documents contain OCR errors, duplicates, or irrelevant content (e.g., headers, signatures), even the best pipeline will extract noise.

Standardize where possible, remove duplicates, and validate inputs early.

In our insurance project, the system had to handle 20–30 document layouts across PDFs, Word files, and plain text. Preprocessing and format-aware pipelines brought consistency across the board.

4. Choose scalable, cloud-native infrastructure

Cloud platforms like AWS or Azure enable you to scale in a way that makes the most sense for your business while remaining affordable.

Both clients benefited from pay-per-use cloud architecture. In one case, the complete solution scaled for under $2,000 annually, without increasing headcount.

5. Combine AI with human oversight

Automation should support expert judgment without replacing it. Human-in-the-loop (HITL) mechanisms will help with system hallucinations and continuously improve model accuracy.

In the claims intake project, only 10–20% of documents were flagged for manual review. This allowed staff to focus on edge cases while trusting the system to handle the bulk of the work.

6. Prioritize security and compliance

Implement encryption, role-based access controls, and audit trails from the start.

The risk management client gained complete audit trails through RDS and CloudWatch, which was something they didn't have before, and this greatly helped their compliance.

7. Monitor and evaluate consistently

Treat your pipeline as a living system. Track performance and update regularly.

We provided leadership of our client companies with real-time dashboards and feedback loops to fine-tune accuracy and adapt as new document types were added.

8. Foster cross-functional collaboration

Bring together engineers, analysts, and subject matter experts to align outcomes with business needs.

In both projects, collaboration between ops, compliance, and data teams ensured the solution didn't just "work" but solved the right problem in the right way. A competent team is everything, and without it, technology is just not enough.

Our experience

At Binariks, we've successfully implemented Unstructured Data Processing (UDP) solutions for large-scale enterprises facing document chaos and operational delays. One of our most impactful projects involved a $10B insurance leader in the U.S. and U.K. markets, specialising in disability, life, accident, and critical illness insurance, along with other employee benefits.

The company received thousands of broker-submitted quote requests daily, arriving in unstructured formats (emails, PDFs, Excel sheets, Word documents, and scanned images). Manual data entry consumed up to 80% of underwriters' time, leading to 15-day processing delays and inconsistent reporting.

Our approach

We developed a comprehensive AI-powered document processing platform tailored to the client's complex data environment:

- Discovery & architecture

Our team of data scientists, Azure architects, and insurance experts began by identifying the lack of labelled datasets as a core limitation. To overcome this, we developed custom tools to curate balanced, high-quality training and testing samples based on real-world documents.

- Phased implementation

We started with a focused proof of concept to validate feasibility and value. From there, we transitioned to iterative delivery, including a Data Platform MVP, an AI Classification & Extraction MVP, and ultimately, a production-ready deployment. Each phase was designed to facilitate rapid feedback, mitigate risk, and enable continuous refinement.

- Intelligent document processing engine

We built a processing engine that combines Optical Character Recognition (OCR), Natural Language Processing (NLP), and GPT-4-based reasoning. This engine classifies and extracts key information from a wide variety of document types, routing low-confidence cases for human review to maintain accuracy.

- Centralized data platform

Our centralized platform, deployed on Azure, supports both batch and real-time ingestion workflows. It includes standardized data models that enable consistent processing and integration with analytics and compliance systems.

- AI-powered decision workflows

We implemented intelligent workflows to automate tasks such as submission triage, appetite fit checks, fraud detection, and risk enrichment. These workflows utilize Retrieval-Augmented Generation (RAG) and vector search to enable context-aware decisions by combining external and internal data sources.

Results

The solution reduced submission processing time from 15 days to minutes, achieving over 80% automation in data extraction and validation. It also provided:

- Real-time insights and consistent underwriting compliance

- Significant cost savings and improved hit/bind rates

- Scalable infrastructure for future AI and data-driven initiatives

- Enhanced compliance through transparent, auditable AI workflows

What began as an operational efficiency project evolved into a strategic digital transformation, positioning the insurer as a technology leader in its industry and setting the foundation for new AI-driven insurance products.

Conclusion

Unstructured data gives companies a competitive edge because it contains valuable insights that are often buried inside emails, PDFs, and other documents, which are overlooked due to a lack of a framework.

When organizations learn to utilize this information, they unlock context that drives better decisions. Unstructured Data Processing (UDP) enables this. In our insurance case, combining OCR, NLP, and multimodal AI transformed thousands of unstructured submissions into clean data and cut processing time from 15 days to minutes.

Ready to process your unstructured data at scale? Evaluate your data flow and identify where automation can replace manual effort; then discuss with Binariks how to make it possible.

Share