Feeling lost in the labyrinth of data engineering tools? This article equips you with a roadmap! This article explores the critical role of data engineering in today's data-driven landscape, outlines the key functionalities you should seek in data engineering tools, and unveils the top contenders to watch out for in 2026. We'll also peek behind the curtain at Binariks' favorite tools, offering insights into their practical applications.

Data engineering tools act as the bridge between raw data and actionable insights. Businesses are constantly bombarded with information, from customer interactions and transactions to social media activity. This data deluge holds immense potential for uncovering valuable insights, optimizing operations, and making informed decisions. However, raw data that is stored in isolated systems has immense untapped potential.

These tools for data engineers allow them to transform raw data into a usable and accessible format that is ready for analysis and strategic decision-making. By streamlining data ingestion, transformation, and management, data engineering tools are essential weapons in any business's arsenal.

Let's delve into the top data engineering tools to watch out for in 2026.

The role of data engineering in 2026

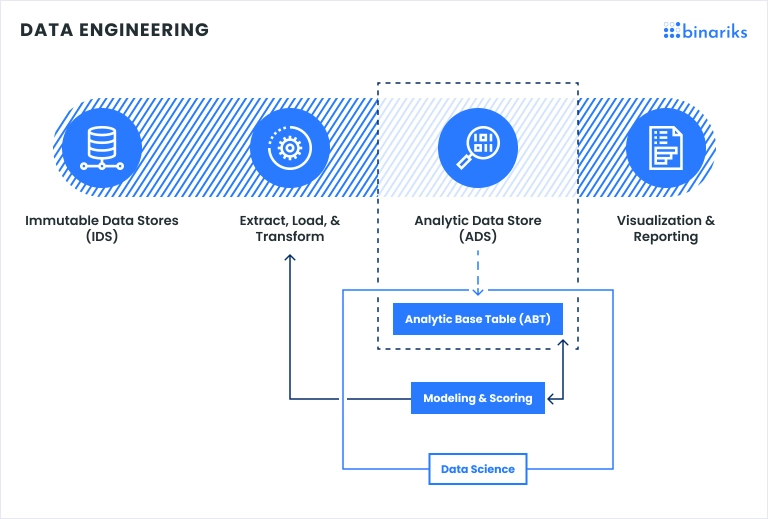

Data engineering is the backbone of any successful data-driven organization. It's the discipline responsible for transforming raw, often messy, data into a clean, structured, and readily available format for analysis.

Imagine a vast warehouse filled with unlabeled boxes. Data engineering acts as the organizer, sorting and labeling these boxes (data sets) so that anyone can easily find the specific information they need.

The impact of data engineering on businesses is nothing short of transformative:

- Informed decision-making: By making data readily available and organized, data engineering empowers businesses to make data-driven decisions. This can involve anything from optimizing marketing campaigns to streamlining product development based on real customer insights.

- Improved efficiency: Data engineering automates tedious tasks like data collection and transformation, freeing up valuable time and resources for other critical activities. Streamlined workflows lead to increased efficiency and cost savings.

- Enhanced innovation: Data engineering unlocks the potential for uncovering hidden patterns and trends within data. This empowers businesses to innovate by identifying new market opportunities and developing data-driven solutions.

Using the proper data engineering tools is crucial for maximizing these benefits. The wrong tools can lead to bottlenecks and data quality issues, ultimately hindering one's ability to extract value from data.

Key functional requirements for data engineering tools

Selecting the right tools for data engineering requires careful consideration of your specific needs and data landscape. Here are some key functionalities to prioritize:

- Data processing efficiency and speed: Your chosen tools should be adept at handling large data volumes swiftly. Look for solutions that offer parallel processing capabilities and optimize data pipelines for efficiency.

- Support for various data sources and formats: Modern businesses generate data from diverse sources. Your tools should seamlessly integrate with multiple databases, cloud storage solutions, and APIs to ingest data in various formats, including structured, semi-structured, and unstructured.

- Ensuring data security: Data security is paramount. Your data engineering tools should incorporate robust security features like access control, encryption, and data masking to protect sensitive information.

- Scalability and flexibility: Data volumes are constantly growing. Your chosen tools should scale effortlessly to accommodate increasing data demands while offering the flexibility to adapt to evolving business needs and integrate with new technologies.

- Data lineage and auditability: Maintain a clear understanding of how your data is transformed throughout the pipeline. Look for tools that provide comprehensive data lineage tracking and auditability features, allowing you to trace data origin and transformations and ensure data quality.

- Collaboration and ease of use: Data engineering often involves teamwork. Consider tools that offer user-friendly interfaces, promote cooperation between data engineers, analysts, and other stakeholders, and streamline communication throughout the data pipeline.

Lift your business to new heights with Binariks' AI, ML, and Data Science services

Top 10 data engineering tools to watch out in 2026

The data engineering landscape is teeming with innovative tools, each offering unique functionalities to tackle various aspects of the data pipeline. Here's a curated list of the ten best data engineering tools to watch in 2026 and a deeper dive into their features, advantages, and potential drawbacks.

1. Apache Spark

- Features: An open-source, unified analytics engine for large-scale data processing. Spark excels at handling both batch data processing (ingesting large datasets) and real-time streaming data. It also boasts built-in ML functionalities, making it a versatile platform for various data engineering tasks.

- Advantages: Highly scalable due to its distributed processing architecture, allowing it to handle massive datasets efficiently. Cost-effective due to its open-source nature. Vibrant developer community providing extensive support and a rich ecosystem of libraries and tools for specific data processing needs.

- Disadvantages: Setting up and managing Spark clusters can be complex, requiring some expertise in distributed systems administration. Utilizing Spark's full potential often necessitates significant programming knowledge in Scala, Java, or Python.

2. Apache Airflow

- Features: An open-source workflow management platform specifically designed to orchestrate data pipelines. Airflow excels at scheduling, automating, and monitoring complex data workflows, ensuring tasks run in the correct order and dependencies are met. Its user-friendly web interface allows for visual workflow creation and monitoring.

- Advantages: A user-friendly interface with drag-and-drop functionality simplifies workflow creation. It facilitates scheduling and monitoring of data pipelines, ensuring smooth operation and timely data delivery. Also, it promotes code reusability through modular components, improving development efficiency.

- Disadvantages: Limited out-of-the-box integrations with certain data sources, requiring additional configuration for specific connectors. While the UI simplifies workflow creation, some tasks might still require Python scripting knowledge for advanced customization.

3. Snowflake

- Features: Cloud-based data warehouse solution offering high performance and scalability for data storage and querying. Snowflake's unique architecture separates compute and storage resources, allowing for independent scaling of each based on your needs. This translates to fast query performance even on massive datasets.

- Advantages: It is easy to set up and use due to its cloud-based nature, eliminating the need for infrastructure management and ongoing maintenance. Serverless architecture removes the burden of server provisioning and scaling. Secure data sharing capabilities enable collaboration within and beyond your organization.

- Disadvantages: Due to its pay-as-you-go pricing model, it can be expensive for very large datasets, especially with frequent queries. Limited customization options compared to on-premise data warehouse solutions, as you are reliant on Snowflake's pre-built features and configurations.

4. Apache Kafka

- Features: Kafka is an open-source distributed data engineering platform designed for handling high-velocity, real-time data feeds. It acts as a central hub for ingesting and distributing real-time data streams from various sources, such as applications, sensors, and social media feeds.

- Advantages: Its highly scalable architecture allows it to efficiently handle massive volumes of real-time data. A fault-tolerant design ensures data delivery even in case of server failures. It enables real-time data processing and analytics, providing valuable insights as data is generated.

- Disadvantages: Requires expertise in distributed systems management due to its complex architecture. Setting up and maintaining a large-scale Kafka deployment can be challenging, especially for organizations without prior experience with distributed systems.

5. Apache Hadoop

- Features: Data engineers use tools like this open-source framework for distributed storage and processing of large datasets across clusters of computers. Hadoop's core components include YARN (Yet Another Resource Negotiator) for managing resources and HDFS (Hadoop Distributed File System) for storing data across nodes in a cluster.

- Advantages: It is a cost-effective solution for storing and processing massive datasets due to its open-source nature. Offers a mature ecosystem of tools and libraries for various data processing tasks, providing flexibility and customization options.

- Disadvantages: Complex to set up and manage, requiring significant expertise in distributed systems administration. On-premise deployments necessitate substantial hardware investment and ongoing maintenance. Scalability can become cumbersome as data volumes grow, potentially requiring additional infrastructure resources.

6. dbt Cloud

- Features: One of the cloud-based data engineering platforms specifically designed for streamlining data transformation workflows. dbt Cloud utilizes SQL code to automate data transformations within your data warehouse, ensuring data quality and consistency throughout the data pipeline. It also offers version control for data pipelines, enabling collaboration and tracking changes.

- Advantages: Version control ensures traceability of changes made to data transformations, minimizing errors and promoting collaboration among data teams. It is written in familiar SQL language, lowering the barrier to entry for data analysts and engineers already comfortable with SQL. It enforces data quality and consistency through built-in testing frameworks.

- Disadvantages: Limited to data transformation tasks within the data warehouse, not suitable for broader data engineering needs like data ingestion or real-time processing. Requires familiarity with SQL coding for building data transformation pipelines.

7. Presto (or Apache Hive)

- Features: An open-source SQL query engine specifically designed to query data stored in distributed file systems like HDFS. Presto allows you to run ad-hoc SQL queries against massive datasets stored in data lakes or Hadoop clusters, enabling interactive data exploration and analysis.

- Advantages: Delivers fast query performance on large datasets stored in distributed file systems, facilitating interactive data exploration. Supports ad-hoc analysis, allowing data analysts to ask unscripted questions of the data. Integrates with various data warehouses and data lakes, providing flexibility in data source access.

- Disadvantages: It has limited functionality compared to full-fledged data warehouses, lacking features like data lineage tracking and advanced security controls. Requires additional configuration and optimization for optimal performance on large datasets.

8. Kubernetes

- Features: Kubernetes is an open-source container orchestration platform designed to automate deployment, scaling, and management of containerized applications. It excels at managing containerized data engineering workflows, ensuring efficient resource allocation and scalability.

- Advantages: It enables containerization of data engineering workflows, promoting portability across cloud environments and on-premise deployments. Also, Kubernetes simplifies scaling and resource management for data engineering workloads. It optimizes resource usage and streamlines deployment and management of complex data pipelines using containerized components.

- Disadvantages: Setting up and managing Kubernetes clusters can be complex, requiring expertise in containerization technologies and distributed systems administration. The learning curve for Kubernetes can be steep, especially for organizations unfamiliar with containerization concepts.

9. Prometheus

- Features: Prometheus is an open-source monitoring system designed for collecting, aggregating, and visualizing metrics from various data sources. It excels at monitoring the health and performance of data pipelines, ensuring smooth operation and timely identification of potential issues.

- Advantages: Offers a flexible data model for collecting metrics from diverse sources, including applications, infrastructure, and data pipelines. Powerful visualization tools provide real-time insights into data pipeline performance. Integrates with alerting systems for proactive notification of potential problems.

- Disadvantages: Setting up and maintaining a comprehensive monitoring system with Prometheus can be resource-intensive, requiring dedicated effort. The learning curve associated with configuring dashboards and alert rules for optimal monitoring.

10. Airbyte

- Features: Airbyte is another open-source piece of data engineering software, a cloud-native data integration platform designed for building and managing data pipelines. It offers a low-code/no-code approach to data integration, simplifying the process of connecting to various data sources and destinations.

- Advantages: Its user-friendly interface with pre-built connectors streamlines data integration from various sources. The low-code/no-code approach makes it accessible to data engineers of all skill levels. The open-source nature fosters a vibrant developer community and promotes customization options.

- Disadvantages: Although pre-built connectors are available, custom coding may still be required for complex data transformations. Additionally, compared to some established data integration tools, this tool has limited community support. As a relatively new player, the long-term stability and roadmap might be less established than those of more mature solutions.

This overview of data engineer tools in 2026 equips you with a more precise understanding of the things available. Remember, the ideal choice depends on your specific needs, budget, and technical expertise. Evaluate each tool's functionalities, advantages, and potential drawbacks to select the ones that best empower your team to transform raw data into the strategic asset that fuels your business success.

Favorite data engineering tools in Binariks

While the previous section explored some of the most popular data engineering tools, our data-driven journey continues. Here, we will introduce you to Binariks' team favorites, including Snowflake and dbt, which have already been covered but in more detail by our experts.

Snowflake

Snowflake is an OLAP-oriented database with a bunch of cool features. For example, Elastic Scaling (for computing), Dynamic Masking Policy (often the problem arises of hiding PII/PHI data from people who shouldn't see them).

In terms of architecture, computing lives its own life separately from storage — which is excellent for scaling. It's cloud-agnostic — meaning if we encounter problems with one cloud, we can migrate to another and use the same Snowflake, Snowpipe, and external tables. It has many use cases, but it is typically deployed for analytics — Data Warehouse.

dbt

dbt is a tool that, in classical terms, such as ETL/ELT, corresponds to the "T" (transform). It's a robust framework for writing SQL + Jinja = a kind of dynamic SQL. This tool emerged around 2017-2018 as a Python library. Currently, there are two main versions — dbt core and dbt Cloud. The latter is a service that helps manage dbt. In dbt Cloud, there is a scheduler, dbt documentation, and the ability to develop directly in the browser (writing SQL or macros), so you can do everything in the browser.

Not all features are available in dbt core, but it's free and community-driven. Among the exciting features in dbt are built-in data tests (unique, not_null, accepted values, referential integrity) for yml files and the ability to write your tests. It's also worth noting documentation and data lineage, active community (+Slack), and many dbt libraries. When a business user needs to find out where a particular column came from and what it means, they can go to the user-friendly site where everything is very conveniently located (there is a search).

There are many adapters — i.e., connections to databases (Snowflake, RedShift, Synapse, BigQuery, PostgreSQL, Databricks, Teradata, etc.). Thus, in theory, you can migrate to another tool, but you need to rewrite the SQL logic a bit due to dialect differences.

Great Expectations

Great Expectations is a tool for writing advanced tests. There are two products: GE Open Source and GE Cloud. The principle is also very simple: you run a Jupyter Notebook and specify tests (expectations) that you want to see on a particular table.

The tool is not very popular because there is a dbt-expectations library that covers 80% of the functionality. However, it can be useful to have sources that are not used in dbt.

Flyway

Flyway is a tool to manage a database using version control systems (VCS) and migrations. You establish a JDBC connection to your database, create migrations (SQL files, such as ALTER TABLE), and run them. During the first run, a metadata table is created to track which migrations have been applied (e.g., v1, v2). Then your statements are executed (there's also the option to develop repeatable statements, which are executed with every migration version).

It is a handy tool; at Binariks, we use it on OLTP databases to track the state of the database. Flyway has many counterparts, such as Schemachange, Liquibase, and others, but they all serve the purpose of database management.

Terraform

Terraform is more of a DevOps thing. In a nutshell, it's a tool that helps manage infrastructure with code. Instead of clicking through a UI, DevOps engineers write code to deploy resources, for example, in the cloud. There are many alternatives, but Terraform is very popular.

Airflow/Astronomer Airflow

Airflow is the most popular ETL/ELT tool. Although it's often criticized for being difficult to set up and challenging to write custom workflows, it remains the leader in its field. It is an open-source product that helps orchestrate many systems using concepts such as DAG, Task, Operator, Sensor, Hook, etc.

Different engineers prefer to use Airflow differently. Some use it purely as an orchestration tool, meaning they trigger tasks in other systems using the compute of those systems. Others utilize both Airflow's orchestration and compute capabilities.

Since it's an open-source project, deploying and maintaining it in the cloud requires a lot of resources. The specialists who contributed the most to the open-source repository decided to create their own managed Airflow solution, which is how Astronomer Airflow came about.

Additionally, some cloud providers offer their own managed Airflow services (GCP, AWS).

Docker

Docker is a tool for virtualization. It encompasses a wide array of concepts, but we primarily use it for development and CI/CD. It's common for different people on a project to use different operating systems – Mac/Linux/Windows. With Docker, you can spin up a small "computer" with all the dependencies you need, ensuring consistent performance across different systems.

Empowering your business with the right data engineering tools is just the first step. Binariks offers more than just a toolbox; we provide a partnership built on expertise and a commitment to your success. We work collaboratively with you to understand your unique challenges and goals and design and implement data engineering solutions tailored to your specific needs.

Unlock your data's potential: discover Binariks' Big Data and analytics services today!

Final thoughts

This comprehensive guide has unveiled the power of data engineering and explored a range of powerful tools to consider for your data pipelines. Remember, there is no one and only best data engineering tool. Carefully evaluate your specific needs, budget, and technical expertise when selecting the tools that best suit your data landscape.

And, of course, partnering with Binariks' experienced data engineering team can empower you to navigate the complexities of data engineering and unlock the true potential of your data. Our specialists leverage robust data engineering technologies and combine them with knowledge to craft customized solutions that propel your business forward.

Choose the right tools and partners to work effectively with your data. Contact Binariks today and embark on the path to data-driven success.

FAQ

Share