Everyone knows that LLMs are reshaping industries like insurance and healthcare with faster research and the ability to automate basic tasks .

Still, there comes a time when using out-of-the-box models trained on general internet data is no longer feasible because general models do not have access to information specific to the company. For example, insurers need more accurate ways to process complex documents according to the internal logic of the organization.

The next step in the company's AI journey is looking into RAG and fine-tuning the two models of tailoring AI according to the company's specific data and needs.

In this article, we compare and contrast these models and demonstrate how they work in insurance.

What is RAG (Retrieval-Augmented Generation)?

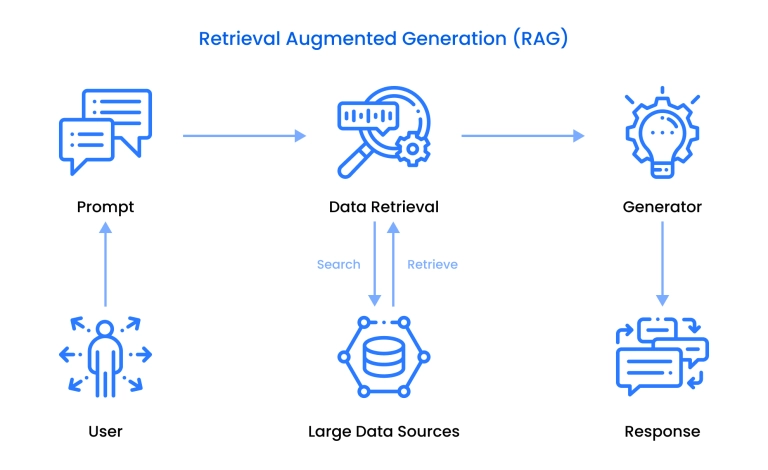

RAG (Retrieval-Augmented Generation) is a method that connects AI models to an external database so that they can use sources beyond the point of their training to give accurate, updated information.

RAG consists of two main components:

- Retrieval – the system looks up relevant information from external sources (e.g., databases or knowledge bases).

- Generation – the AI then uses that retrieved information to create a response.

RAG changes how AI works by letting models fetch information exactly at the moment of a query. Instead of relying only on past training, the model first searches connected databases for relevant data and then uses them to ground its answer in accurate, up-to-date information. After fetching the data, AI feeds it back to the LLM to provide the answer.

Here are some simple examples of how RAG is used in insurance:

- Healthcare: A doctor asks about dosage adjustments; RAG pulls the latest guidelines and patient data.

- Legal: RAG finds liability clauses in a 200-page contract and links them to case law.

- Customer support: A chatbot retrieves the exact policy clause on lost luggage for a traveler.

RAG for insurance

RAG is great for insurance when decisions depend on retrieving the exact information, such as a customer record or an up-to-date clause. Here are some common examples of how RAG for insurance is used:

Claims adjustment

A customer submits a claim for storm damage. Instead of manually searching the policy, RAG retrieves the exact coverage clause, compares it to the claim details, and flags whether the damage is covered, providing a citation to the policy text.

Customer service

A policyholder asks in chat: "Does my plan cover emergency medical treatment abroad?" RAG for document processing searches their specific policy document and responds with the exact section.

Contract validation

When reviewing a new reinsurance treaty, RAG scans the document, cross-checks each clause against regulatory requirements, and generates a report highlighting discrepancies.

Fraud investigation

An insurer receives multiple suspicious injury claims. RAG pulls data from internal claims history and external fraud databases, surfacing patterns like repeated claims from the same address or overlapping accident dates. The same approach is used for risk assessment. RAG is capable of analyzing the factors that underwriters tend to miss.

Underwriting

An underwriter is assessing a new commercial property. RAG retrieves relevant building codes and other factors, presenting them alongside the application. RAG document processing allows underwriters to really focus on high-risk cases. The algorithms of RAG also help to eliminate bias.

Examples of RAG for insurance by domain:

- In property insurance, RAG is excellent at analyzing risks associated with individuals. It pulls not only generic risk factors, but also things like real-time property data and historical claims.

- In health insurance, databases used in RAG provide access to factors like full health records and mortality data.

What is fine-tuning?

Fine-tuning is a method of improving an AI model by training it further on a specific set of data to better communicate the intent.

It is used when a base model already knows general language patterns. Fine-tuning adapts it to a narrower domain (e.g., finance, healthcare, law) by showing many examples from that field. It helps the model use the exact rules it needs to use and follow the required style. The additional data set is embedded in the model's architecture.

Here are some examples:

- Banking: Fine-tuning the loan with loan approval rules to follow strict credit evaluation workflows.

- Healthcare: Adapting a model with hospital discharge summaries so it uses correct medical terminology and structure. Fine-tuning is also an excellent approach for tasks related to imaging, such as magnetic resonance imaging (MRI).

- Customer support: Teaching the model to always respond in a brand's voice.

Fine-tuning in insurance

Fine-tuning for insurance works best when the company needs the AI to always respond consistently with the same terminology and style, and follow consistent rules. Here are typical examples:

Claims letters

Fine-tuned models can generate denial or approval letters in the insurer's exact tone and format.

Underwriting rules

A model fine-tuned on an insurer's internal risk assessment criteria can consistently apply those rules when evaluating applications without deviations.

Policy summaries

Fine-tuned AI can create simplified summaries of policies in the company's preferred style.

Customer service scripts

Fine-tuning for document processing models trained on call center transcripts can provide agents with suggested replies that match the insurer's approved communication style.

Regulatory disclosures

Fine-tuned AI can automatically include the proper disclaimers and mandatory wording regulators require.

Examples of fine-tuning for insurance by domain:

- In health insurance, fine-tuning is used for claims approvals/denials, especially when regulatory disclosures (HIPAA , GDPR, etc.) are a significant factor in crafting the message.

- In auto insurance , fine-tuning works for accident claim letters, repair authorizations, and policy summaries.

- In life insurance, fine-tuning for document processing is required for things like policy documents and disclosures around exclusions (suicide clauses, contestability periods), which cannot vary in wording.

RAG vs fine-tuning – key differences

| Factor | RAG (Retrieval-Augmented Generation) | Fine-Tuning |

| Flexibility | Extremely flexible. You can connect it to many data sources (databases, PDFs, knowledge bases, APIs). If the knowledge base changes, the AI instantly adapts. Great for fast-moving industries. | Less flexible. Once fine-tuned, the model "absorbs" the data but can’t easily switch domains. Works best when terminology and tasks don’t change often. |

| Cost | Lower cost because you don’t retrain the model. The expense is mostly in building/maintaining the retrieval system and keeping data sources organized. | Higher cost because fine-tuning requires preparing quality datasets, running training jobs, and repeating the process whenever information changes. |

| Speed of Deployment | Very quick. Once retrieval is set up, the AI can start using new knowledge immediately. Adding a new data source can take hours. | Slower. Training takes time, and validation is required to ensure no errors were introduced. Deployment cycles can stretch into weeks or months. |

| Data Freshness | Strong advantage: RAG always pulls the latest data. For insurance, it can retrieve the newest regulations, market updates, or customer claims on the spot. | Becomes outdated unless retrained. A fine-tuned model trained last year on policy rules won't "know" about new compliance changes until re-updated. |

| Regulatory Compliance | Easier to trace. Since RAG pulls answers directly from approved sources, it's simpler to log where the information came from. This transparency is crucial in regulated industries like insurance. | Harder to audit. Fine-tuned models generate answers from internal weights, so it's difficult to prove which source informed the output. |

| Domain Accuracy | Very good at blending general reasoning with up-to-date facts. However, it may phrase answers in a generic way unless prompts are carefully designed. | Excellent domain precision. Fine-tuned models "speak the language" of the field, use the right terminology, and follow internal style/tone more consistently. |

| Maintenance | Requires maintaining clean, well-structured data sources. If the retrieval layer is weak, performance drops. But no retraining needed. | Requires periodic re-training when company rules, legal frameworks, or product lines evolve, making it heavier to maintain over time. |

| Performance on Complex Reasoning | Strong when the task needs live access to large and varied knowledge (e.g., explaining how different policy clauses interact with a customer's case). | Strong when reasoning is bounded to a very narrow domain (e.g., underwriting calculations based on fixed rules). |

| Best Use Cases |

|

|

When to use fine-tuning and RAG

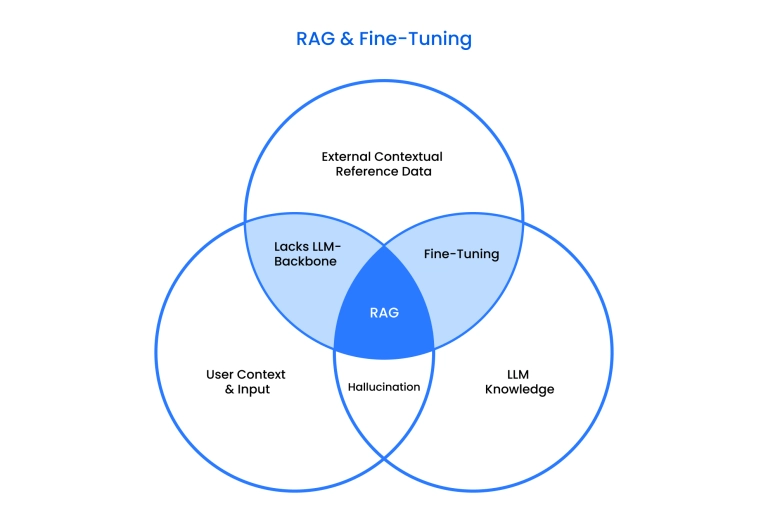

RAG is generally better than fine-tuning when you want to bring LLM-specific information to an external database. Fine-tuning is used when you want that information to be part of your system.

The difference between RAG and fine-tuning is that RAG pulls in fresh, external data at query time, while fine-tuning stores fixed knowledge and style directly in the model.

For example, if you are an electronics wholesaler, you will use fine-tuning to make sure that your salespeople always use the same scripts for warranties and handling customers because the value in this case is the consistency of the replies. Fine-tuning is better than RAG in this case.

However, it is best to use RAG when handling current inventory and stock availability because this information is constantly changing, and data should be handled at scale, meaning that it is best to have this data retrieved from an external source.

Here is the general guideline on when to use what:

Use fine-tuning when:

- You need consistent, repeatable outputs, such as generating reports, summaries, or responses in the same format every time. You also need full control over the style and content of these outputs.

- The tasks you work on rely on fixed rules and style guides that do not change often, like legal documents and medical reports. The terminology on these tasks does not change often.

- There is a high volume of tasks, but they are standardized (documents, invoices, etc.).

- The model has to run in restricted environments (offline, mobile, or on-premises) where it can't access external databases, so all knowledge must be embedded. This could happen when access to the cloud is restricted, like in the military or government organizations. It could also occur if regulations are too strict, like when dealing with hospital patient information.

- You have resources to train significant initial compute resources at the start. A fine-tuning model relies on more components you must build, such as a GPU.

Use RAG when:

- You need answers based on fresh or case-specific data. Your task depends on market updates, recent regulations, etc.

- You need transparency and traceability, like citations to the exact source.

- You do not need complete control over the output that the system brings.

- Your domain has vast or rapidly changing knowledge that can't be realistically baked into a model, like current scientific research, news, etc.

- You want to deploy quickly without large training datasets. RAG can immediately start on tasks, while fine-tuning has to undergo training.

- Your budget is limited, and a future strategic advantage is not enough of a factor for you to invest significant resources upfront. RAG architecture implementation is simpler, and therefore less expensive

So, RAG or fine-tuning? You don't always have to choose.

Combine fine-tuning and RAG when:

- You need both deep specialization and current information.

The model must follow strict rules and bring in the latest facts. Think of a customer service assistant who follows the company's tone of voice, but also has to retrieve customer information. Note that a hybrid approach is the most expensive of the three variants but is the best at anticipating the company's needs.

Many industries rely on consistency and the latest information, so careful thinking is involved in deciding what approach to use and when to combine them. Insurance is an industry like that.

Which approach works best for insurance document processing?

There is a place for both RAG and fine-tuning in insurance, as well as for the hybrid model. The choice depends on the insurer's goals and data landscape.

Here is what the LLM RAG vs. fine-tuning choice looks like, specifically in the insurance document processing :

- RAG (Retrieval-Augmented Generation) is best for a quick start. Insurers can connect RAG to existing policy databases immediately without a training dataset. RAG provides up-to-date knowledge through the latest regulations and market data. It is ideal for dynamic scenarios like assisting customers with policy-specific inquiries.

- Fine-tuning is best for large insurers with extensive internal archives and well-documented workflows. Large insurers also have resources to set up fine-tuning models. It works on standardized outputs and is best when repetition and consistency matter more than freshness, e.g., generating thousands of uniform customer communications monthly.

- A hybrid approach is often the most effective choice. By combining fine-tuning's consistency with RAG's freshness, insurers get a precise and adaptable system. Example is a compliance platform fine-tuned to structure reports in the insurer's format but powered by RAG to check against live policy and legal databases.

Build production-ready RAG and fine-tuned AI for regulated industries

Case example – AI for claims processing

Let's look at a real-world example to see how Generative AI development services build RAG vs fine-tuning in practice.

One global insurer partnered with Binariks to build a RAG-powered contract validation platform that helped them transform their slow manual review process into a fast and efficient one.

The client is a global leader in insurance and reinsurance, employing around 5,000 staff worldwide. They are positioned as a market leader in risk management, underwriting, and corporate insurance solutions.

Their issue was the manual validation of contracts between insurers and reinsurers. The teams had to manually interpret complex, unstructured treaty language and match it against compliance frameworks such as Solvency II.

For the client, this resulted in:

- Significant delays in underwriting and contract approval.

- High risk of oversight or regulatory non-compliance.

- Rising operational costs are tied to manual legal review.

Binariks proposed a Retrieval-Augmented Generation (RAG) architecture enhanced with advanced prompt engineering and reflection agents. This approach was selected because it adapts quickly to new frameworks and scales to handle 10K contracts per month.

The solution we chose included:

- Contract ingestion & parsing through OCR pipelines that converted scanned treaties into structured, machine-readable text.

- RAG-based validation with multi-layered RAG cross-referenced clauses, business rules, and regulations.

- Chain-of-Thought prompting and agentic AI are used to enhance reasoning.

- An interactive dashboard built in ReactJS with instant clause analysis and risk flagging

- An enterprise infrastructure with containerized FastAPI services deployed on Microsoft Azure, integrated with Azure Blob Storage, Key Vault, and DevOps pipelines.

- Continuous monitoring with LangSmith.

The improved RAG document processing has been very successful for our client. Underwriters actively use it as a daily tool for contract validation. Here are our client results in numbers:

- 80% faster processing of contracts (dropped from days to hours)

- 90%+ accuracy (through reducing false positives and manual rework of compliance checks).

- $1.5M annual savings on operational costs. These were cut by eliminating repetitive manual reviews and reducing compliance-related risks.

- Achieved 100% audit readiness

- Scaled to 10,000+ contracts monthly, an enterprise-level workload.

- 30% productivity boost for underwriters and legal teams.

This case demonstrates how the RAG pipeline is the best solution for regulated insurance contracts. Our team went beyond a standard RAG solution, delivering a compliance-grade platform with reasoning agents and scalable architecture.

Is it possible to combine RAG and fine-tuning

Many of the most effective enterprise AI systems use a hybrid approach that blends both methods.

Together, this creates AI that is both stable and standardized (thanks to fine-tuning) and dynamic and up to date (thanks to RAG). For insurance, this means letters and reports always follow strict formats, while answers about coverage or regulations are up to date.

A hybrid solution blends fine-tuning and RAG into one workflow.

In practice, this means building a fine-tuned base model with an RAG layer on top, and an orchestration layer that brings the model together. It requires the team that has both the database expertise required to build RAG and experience with training pipelines, GPU, and ML engineering.

The team tailors the model to understand the insurer's unique language and processes. It's then connected to a live data source (a vector database, for example) to retrieve real-time policy, claims, and regulatory information. A coordinating system manages the final output by deciding when to use the custom internal training, when to inject the retrieved facts, and how to blend them into one coherent answer.

Conclusion

The choice between RAG (freshness, scale, and traceability) and Fine-Tuning (consistency, style, and fixed rules) must align with your specific business priority.

While RAG provides real-time data access and Fine-Tuning guarantees uniform outputs, the Hybrid Model is often the most powerful fit for insurance, delivering the strict specialisation and up-to-date information the industry demands.

Ready to build a system that meets all your needs for automation in insurance?

Partner with Binariks to define and implement your optimal RAG, Fine-Tuning, or Hybrid AI strategy.

FAQ

Share