Building AI-agent systems today often feels like patching fragile APIs, unclear interfaces, and inconsistent context sharing together. The Model Context Protocol (MCP) offers a unified standard that finally makes integration between models and agents seamless. Whether you're developing SaaS solutions, scaling insurance platforms, or building healthcare and fintech apps, MCP could be the missing link your architecture needs.

In this article, we'll break down the foundations of MCP and explore how it transforms fragmented AI integrations into robust, scalable systems that deliver real business value.

What is a Model Context Protocol (MCP)?

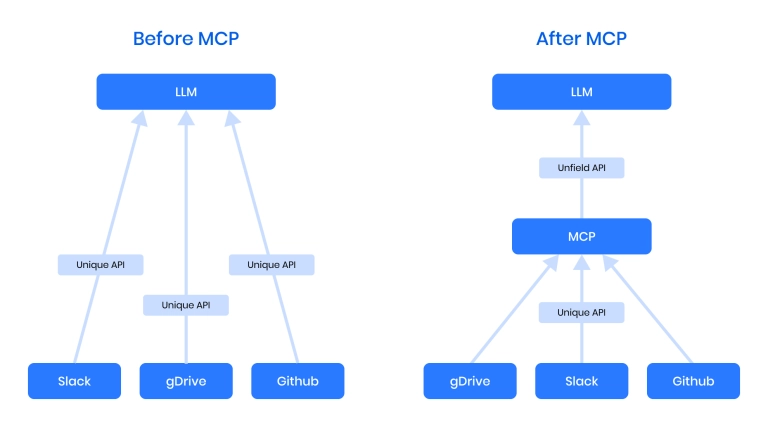

Integrating AI agents into real-world systems has historically been messy and inconsistent. The Model Context Protocol (MCP) provides a standard method for AI models to interact with tools and data in a way that is both secure and scalable, removing the need for custom integrations. This clearly highlights why model context protocol is becoming essential for companies aiming to operationalize AI efficiently across different industries.

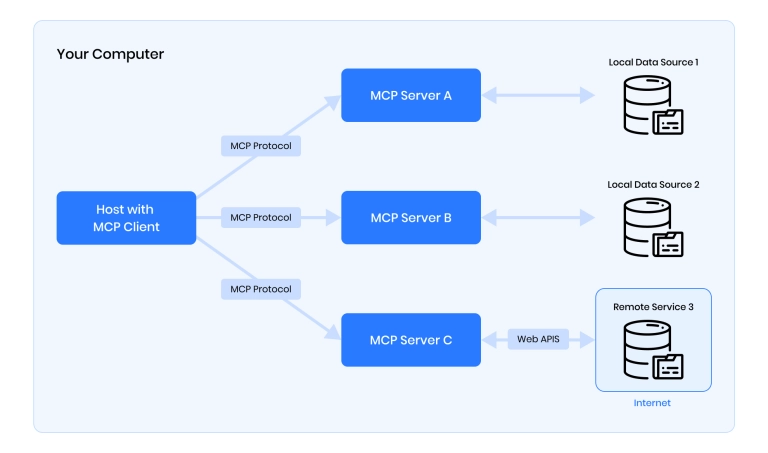

At its core, MCP (Model Context Protocol) defines a consistent way for AI models to interact with tools, data, APIs, and external systems. Instead of relying on ad hoc API calls or tool-specific integrations, MCP allows models to fetch information, execute actions, and maintain context over multiple interactions—without confusion.

This structured communication layer transforms isolated AI models into adaptive, environment-aware agents, following the same logic behind building adaptive AI systems that continuously learn and adjust to dynamic environments.

Why current AI-agent integration is painful

Despite significant progress in AI development, integrating agents into real-world systems is still inefficient and error-prone. Each project often requires building custom connections, fragile APIs, and manual context management.

Without a universal protocol, developers must reinvent solutions for every integration, resulting in bloated prompt engineering, limited interoperability, and scaling challenges that grow with system complexity. This is where MCP for AI agents offers a much-needed breakthrough by introducing standardization at the interaction layer.

To understand why this is such a big deal, consider the current pain points in popular AI ecosystems:

- Unique integrations for every tool: Frameworks like LangChain require custom chains for each external data source or API. This makes the system brittle and hard to maintain as every new tool adds extra complexity instead of integrating smoothly through standardized MCP integrations.

- Heavy reliance on prompt engineering: Projects like AutoGPT show how difficult it is to manage long, complex prompts just to guide agents through multi-step tasks. Without a clear server-client structure, AI agents must "guess" how to interact with tools instead of using structured requests enabled by MCP servers.

- Lack of standardized context sharing: Most AI agents lose valuable information between steps because there's no unified way to maintain state and context across interactions. Developers are forced to manually stitch context together, increasing the risk of errors and inconsistent behavior.

MCP solves all these issues by providing a structured, scalable way for AI models to discover, interact with, and reason over external resources—making AI systems more reliable and production-ready from day one.

How MCP standardizes agent interfaces and context

One of the biggest breakthroughs with MCP is that it formalizes how AI agents communicate with their environment. Instead of hardcoding API calls or stuffing everything into long prompts, MCP defines a clean interface between the model’s logic and external systems. This separation makes building autonomous agents that interact with tools, databases, APIs, or other services dramatically easier without confusion or manual hacks. Although MCP adoption challenges exist, the clarity and structure MCP provides set a new standard for intelligent, scalable agent design.

Through standardizing AI interactions, MCP introduces several key elements that make agent behavior more reliable, interoperable, and easy to maintain. Many of these concepts align with modern AI/ML development practices, where structured, modular systems outperform ad hoc solutions.

Key elements MCP introduces:

- Separation of model logic and environment

MCP draws a hard line between the model's decision-making and the environment it interacts with. This reduces entanglement and allows agents to reason clearly without needing to "guess" how tools work.

- Context documents

Instead of relying on loose prompts, MCP uses formal context documents that contain structured information the model can access or update, ensuring state consistency across actions.

- Action schemas

Models don't mindlessly call APIs—they follow predefined action schemas that clearly describe the available operations, expected parameters, and possible outcomes. This greatly improves agent predictability and reduces errors.

- Resource discovery and metadata

MCP-enabled agents can dynamically discover available tools, databases, and functions by querying metadata, making systems more adaptable and easier to extend.

- Interoperability across systems

MCP isn't tied to one vendor or model. It defines a universal language for models to communicate with any environment, enabling cross-platform and cross-model collaboration without constant rewrites.

By implementing these patterns, companies can create future-proof AI systems that scale more cleanly and operate with far greater reliability.

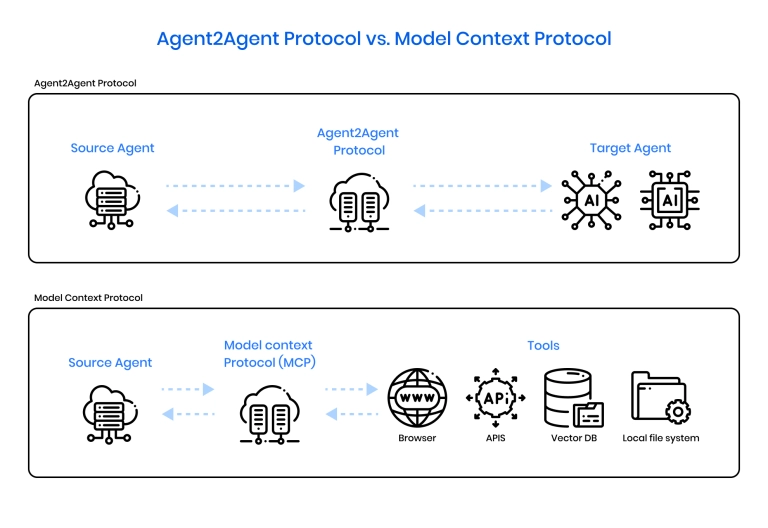

MCP vs A2A: Competing visions for agent interoperability

As AI systems evolve, the industry faces a race between two emerging standards: Google's A2A and Anthropic's MCP. While both aim to make agent-based architectures scalable and efficient, their focus differs greatly.

MCP structures how agents operate within complex systems, while A2A standardizes how agents communicate across systems. Understanding the nuances between them is critical when designing next-generation AI applications.

Standardized protocols like MCP are crucial for organizing internal agent orchestration, while A2A focuses more on creating a universal vocabulary for independent agents to interact across platforms. As the Google A2A announcement explains, the vision is to let developers "compose different agents into dynamic collaborations" without heavy custom integration work.

Both standards have their place, and in many real-world systems, MCP and A2A can even complement each other. Here are more details.

How MCP works inside complex systems

- Purpose: Standardizes how multiple agents interact inside a single system;

- Analogy: Similar to SaaS models today — users call a unified API while the complex logic happens under the surface;

- Customization: Developers manage internal agent communication manually if needed;

- Use case: Best for complex internal workflows where multiple agents collaborate invisibly behind one exposed endpoint;

- Example: A SaaS app offering a single API but internally coordinating multiple AI modules.

MCP is transforming AI agents by enabling developers to handle system complexity through a unified framework.

How A2A enables granular agent collaboration

- Purpose: Standardizes how individual agents interact across different systems;

- Analogy: Like connecting independent microservices — each agent openly declares what it can accept or output;

- Customization: Minimal — agents share metadata and schemas to enable seamless integration without extra glue code;

- Use case: Best when building flexible, modular AI systems composed of independently developed agents;

- Example: Different agents (search, summarize, recommend) composed dynamically across organizations or projects.

As Google describes it, A2A "enables open agent ecosystems where individual capabilities can be mixed and matched freely."

Quick comparative table: MCP vs A2A

While standardized protocols like MCP organize internal complexity, A2A unlocks broader collaboration between agents across different environments. Depending on the system scale and design goals, companies may use one or combine both approaches to build resilient, flexible AI architectures.

Real-world benefits for SaaS, insurance, healthcare & fintech

The Model Context Protocol (MCP) isn't just a theoretical improvement—it directly addresses real-world challenges in industries where security, scalability, and complexity are critical. Here's how it creates tangible advantages across key sectors.

SaaS: Simplified scaling and integration

SaaS platforms often need to connect multiple internal modules while exposing a clean external API. MCP allows SaaS providers to orchestrate complex agent interactions behind the scenes, offering users a seamless experience without revealing system complexity. This makes scaling and maintaining multi-agent services far more efficient.

Insurance: Smarter claims and risk processing

Insurance workflows involve high-volume data, document analysis, and decision-making. MCP enables agents to manage context-rich tasks like claims intake, fraud detection, and policy updates more reliably, leading to faster processing times and fewer manual interventions.

Healthcare: Secure, context-aware AI applications

Healthcare demands strict security, auditability, and accuracy. With MCP structuring agent interactions, AI tools can access patient data, assist in diagnosis, and automate administrative tasks while maintaining strict context control and compliance—reducing operational risks.

Fintech: Reliable multi-agent coordination

Fintech systems often rely on dynamic workflows across authentication, transaction monitoring, and customer service. MCP allows fintech platforms to build AI agents that securely collaborate, access external services, and adapt to fast-changing regulations without constant reprogramming.

How Binariks helps companies adopt MCP-based agents

Binariks acts as a strategic technology partner for companies looking to integrate LLMs and AI agents into their products using the Model Context Protocol for AI agents. We help organizations design scalable system architectures, implement MCP SDKs, create robust AI agents, and build secure, production-ready infrastructures.

Our expertise ensures that your AI agents are modular, interoperable, and MCP-compliant, making them easier to reuse, extend, or integrate across different systems. By partnering with Binariks, companies can fast-track development, minimize integration risks, and accelerate their go-to-market strategs with future-proof AI solutions.

AI that works for your business – scalable, secure, and high-performing solutions tailored to your goals

Final thoughts

Model Context Protocol (MCP) solves real integration problems companies face when building AI-driven systems. It standardizes how agents interact with tools, manage context, and scale across industries like SaaS, insurance, healthcare, and fintech.

Binariks supports businesses at every stage of MCP adoption—from system design and SDK implementation to secure infrastructure and modular AI agent development. We help companies move faster, reduce complexity, and deliver production-ready AI solutions.

Share