Generic large language models (LLMs) – AI systems trained on broad internet datasets – offer broad capability, but regulated industries require systems that go deeper: models that understand industry-specific terminology, operate within strict compliance boundaries (HIPAA, GDPR, SOX), and behave predictably under regulatory scrutiny.

This is the value of domain-specific LLMs – AI models fine-tuned on curated, expert-validated datasets that reflect the language, logic, and regulatory constraints of industries like healthcare, finance, and insurance. Domain-specific AI systems ground outputs in trusted, auditable sources – electronic medical records (EMRs), policy libraries, regulatory rulebooks – instead of broad internet data, ensuring outputs are accurate and verifiable.

The problem is simple: generic LLMs may sound confident, but they lack controlled knowledge boundaries, auditability, and consistent reasoning across edge cases.

In regulated industries – healthcare, financial services, insurance – these gaps translate into operational risk: incorrect medical suggestions carry liability, misinterpreted policy clauses create legal exposure, fabricated financial logic triggers compliance violations.

This article explains why organizations are moving from general-purpose AI assistants to domain-specialized systems built for accuracy, safety, and governance.

What you'll learn in this guide:

- Why generic LLMs fall short in regulated domains

- What defines domain-specific LLMs and how they differ from general-purpose models

- Key components of the Knowledge Intelligence Layer

- How domain-specific AI supports compliance and governance

- Practical enterprise benefits supported by real examples and data

- A roadmap for building domain-specific AI systems

- Real use cases across healthcare, insurance, and finance

If your goal is to deploy AI that is safe, reliable, and regulation-ready, read this guide and find the path forward.

Why generic LLMs are not enough for regulated industries

Generic models like ChatGPT, Claude, or Gemini, are powerful general-purpose AI systems, but they are not designed for environments where every output must be accurate, explainable, auditable, and compliant.

Regulated industries – healthcare, financial services, insurance – rely on precise terminology, strict governance, and domain-specific logic that general-purpose models simply do not internalize. They lack guardrails, cannot guarantee source traceability, and tend to hallucinate (generate plausible but incorrect outputs) when confronted with niche or highly regulated workflows.

This is why enterprises increasingly choose custom small language models and domain-grounded architectures built through specialized AI development rather than relying on broad, unbounded LLMs.

Key reasons why generic LLMs fall short

- No grounding in regulated knowledge

Generic models are trained on public internet data, not on clinical guidelines (UpToDate, NICE, AMA standards), insurance policy structures (ISO forms, state regulations), or financial regulations (Basel III, Dodd-Frank, MiFID II). Without a domain-controlled semantic layer, they cannot reliably distinguish correct information from confident-sounding errors.

- Hallucinations become compliance risks

In regulated sectors, incorrect medical suggestions, misinterpreted policy clauses, or fabricated financial logic are not harmless – they carry legal liability (malpractice exposure, regulatory fines) and real-world impact.

- Lack of auditability and traceability

Enterprises must know WHY a model produced an answer to satisfy regulatory requirements (FDA 21 CFR Part 11, SOX Section 404, GDPR Article 22). Generic LLMs cannot expose reasoning paths or cite verifiable sources, which makes them incompatible with audit and regulatory requirements.

- Inability to handle domain-specific terminology and edge cases

Clinical abbreviations, actuarial language, underwriting conditions, and regulatory exceptions require models trained on curated internal knowledge – something generic LLMs simply do not have.

- No built-in compliance controls

Regulated workflows require filters, privacy layers (de-identification, anonymization), PHI/PII protections (HIPAA Safe Harbor, GDPR pseudonymization), and enforced boundaries (access controls, data residency). Out-of-the-box LLMs lack these mechanisms and cannot be safely deployed without extensive reengineering.

Generic LLMs are excellent general assistants, but regulated industries require precision, governance, and verifiable reasoning – capabilities delivered only by domain-specific models and enterprise-grade AI architectures.

Need enterprise Generative AI? We build secure, governed, production-ready LLM systems

What are domain-specific LLMs and SLMs?

Domain-specific LLMs and small language models (SLMs) are purpose-built AI models trained on curated, expert-validated datasets that reflect the language, logic, and regulatory constraints of a specific industry.

Instead of learning from broad, noisy internet data, they absorb structured medical guidelines, financial regulations, claims histories, audit frameworks, underwriting logic, and other knowledge that generic models cannot reliably interpret. Domain-specific models outperform general-purpose LLMs on tasks requiring specialized vocabulary, structured reasoning, and industry-contextual understanding.

These models sit at the core of modern enterprise AI because they behave like specialists, not generalists. They reason using industry rules, reduce hallucination risk, adhere to compliance, and integrate into internal systems via secure pipelines.

In regulated environments, this makes domain-specific LLMs and SLMs not just preferable but essential. They can also be developed and deployed as part of robust Generative AI development programs tailored to enterprise needs.

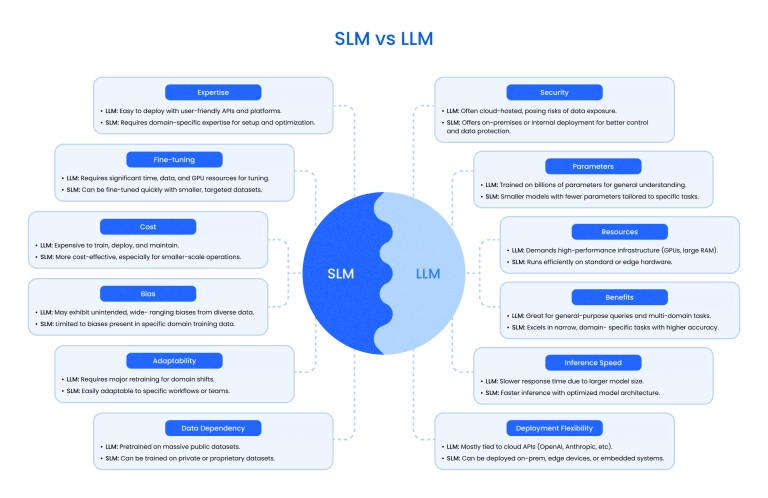

A helpful way to visualize the difference is the comparison below. It shows how SLMs prioritize accuracy, governance, deployment flexibility, and cost-efficiency, while generic LLMs focus on scale and broad general-purpose capability. In high-risk industries, precision and control matter more than sheer size.

Domain-specific LLMs and SLMs transform AI from a versatile assistant into a trustworthy specialist equipped to operate safely in regulated industries. They provide accuracy, transparency, and compliance that generic LLMs cannot match, making them the foundation for any serious enterprise AI strategy.

The knowledge intelligence layer: Core components

The Knowledge Intelligence Layer is the architectural foundation that turns enterprise AI into a system that behaves predictably, safely, and context-aware. Instead of letting models improvise, this layer structures how information is retrieved, validated, reasoned over, and governed. It is the difference between an AI that “generates text” and an AI that can support clinical decisions, interpret insurance policies, or handle financial compliance at scale.

Semantic layer

The semantic layer defines the domain’s terminology, relationships, business rules, and ontologies. It eliminates ambiguity by teaching the system how concepts connect inside a specific industry. This is what makes large models behave consistently across use cases that require high precision and domain accuracy.

Example: In healthcare, the semantic layer maps "MI" → "myocardial infarction" → ICD-10 code I21.9, linking clinical terminology to billing codes and treatment protocols.

Expert knowledge capture

This component encodes subject-matter expertise: medical guidelines (NCCN oncology protocols), underwriting logic (risk scoring algorithms), audit procedures (SOX internal controls), decision trees (claims adjudication rules), and policy structures (coverage exclusions, limits). It transforms institutional knowledge into machine-interpretable logic so the system reasons the way professionals do, not just statistically predicts text.

RAG (Retrieval-Augmented Generation)

RAG allows the model to pull information from approved, auditable sources like clinical guidelines, claims archives, and financial regulations, rather than relying on internal parameters alone. This prevents hallucinations and ensures outputs remain grounded in verifiable knowledge. You can explore our detailed breakdown of RAG implementation .

Knowledge graphs

Knowledge graphs represent entities and their relationships within a domain, supporting multi-step reasoning and context-aware insights. They are especially powerful in workflows involving patient histories, insurance coverage rules, or financial transaction networks.

Governance & compliance layer

This layer controls access, privacy, auditability, and explainability. It enforces standards like HIPAA, GDPR, PCI DSS, and sector-specific regulatory requirements, ensuring the system remains safe and compliant throughout its lifecycle. This is also where enterprises validate domain-specific AI models before deployment.

Feedback & continuous learning loop

The system collects expert corrections, real-world outcomes, and performance metrics. Over time, this loop improves accuracy, prevents model drift, and helps organizations iteratively create a domain-specific LLM that reflects evolving regulations and best practices.

Integration layer

The integration layer connects the entire architecture to EMRs, claims engines, policy systems, financial ledgers, risk-scoring tools, and compliance dashboards. It ensures domain intelligence is embedded into real operational workflows.

Together, these components form the Knowledge Intelligence Layer that makes enterprise AI reliable, explainable, and regulator-ready. It is the core of any domain-focused AI platform and the foundation enterprises need to deploy safe, precise, and industry-aligned AI systems at scale.

Compliance enablement: How domain-specific AI ensures safety and governance

Enterprises in healthcare, insurance, and finance cannot rely on generic models that improvise or generate unverifiable outputs. Domain-specific AI is built not only for accuracy but also for regulatory alignment: every component is designed to meet compliance standards (HIPAA, SOX, GDPR), support audits, enforce data boundaries, and ensure traceable decision-making.

Instead of "best guess" responses, these systems produce outputs grounded in approved policies, clinical guidelines, or financial regulations.

How domain-specific AI enforces compliance

- Strict data boundaries: Models operate only on vetted, enterprise-controlled datasets. This prevents exposure to unverified public data and ensures compliance with HIPAA, GDPR, PCI DSS, and SOC 2 requirements.

- Full auditability and lineage: Every decision is traceable – the system records which data were retrieved, which rules applied, and how the reasoning was formed. Regulators increasingly expect this level of transparency.

- Policy-embedded reasoning: Domain models incorporate industry rules directly into their logic: medical coding standards, claims adjudication rules, lending criteria, compliance workflows, and more. This prevents outputs that conflict with legal requirements.

- Real-time risk controls: Before responding, the AI validates outputs against predefined safety constraints. This reduces hallucinations and ensures decisions remain within regulatory boundaries.

- On-premises or VPC deployment: Sensitive industries can run AI within their own infrastructures, eliminating cross-border data exposure and complying with strict jurisdictional rules.

- Human-in-the-loop governance: High-risk decisions, like diagnostic support, financial approvals, and insurance determinations, can be configured to require human review, creating a controlled oversight layer.

The goal is not just to prevent mistakes but to create an AI system regulators can trust. This is where LLM domain-specific knowledge becomes essential: it limits variability, ensures interpretability, and aligns every output with industry standards.

Domain-specific AI doesn't simply "avoid risk"; it operationalizes compliance. Enterprises gain the benefits of automation without sacrificing safety, auditability, or regulatory trust.

Gain a competitive edge with our comprehensive AI guide

Get the knowledge you need to lead with Generative AI

Key benefits of domain-specific AI for enterprises

Domain-specific AI gives regulated industries something generic models cannot deliver: precision, safety, and measurable operational impact. These systems outperform broad LLMs because they reason over validated knowledge, follow industry rules, and behave consistently across edge cases. Below are the benefits enterprises see when adopting domain-aligned AI, supported by real data from credible sources.

Practical benefits

- Higher accuracy on specialized tasks

McKinsey confirms that generative AI delivers substantial improvements in expert domains, stating, "Generative AI could enable automation of activities that absorb 60-70% of employees' time today."

- Reduced hallucinations and safer responses

Domain-specific models minimize fabricated outputs by relying on controlled enterprise knowledge rather than open-web data.

As Amazon notes about Bedrock Guardrails, "Automated Reasoning checks… validate the accuracy of content generated by foundation models against domain knowledge… delivering up to 99% verification accuracy." This shows why regulated industries need AI that can be formally checked, not guessed.

- Higher efficiency in industry-specific tasks

Tailored models excel at complex tasks like interpreting regulations, reviewing medical notes, analyzing claims, or mapping policies. They produce more consistent results and automate tasks that generic LLMs struggle with or require heavy human oversight to correct.

- Better alignment with enterprise priorities

When AI is trained on an organization's actual documents, rules, and operational logic, it becomes tightly aligned with business objectives. This alignment produces more relevant outputs and reduces friction during adoption.

- More efficient scaling and lower compute needs

Enterprises increasingly adopt SLMs because they deliver higher accuracy on focused workloads, deploy faster, and operate at a discounted cost compared to massive general models. They are easier to govern, simpler to host in controlled environments, and often deliver better results where domain rules and terminology matter. As noted in the study on small model performance , "our fine-tuned SLM achieves exceptional performance… significantly outperforming all baseline models, including ChatGPT-CoT."

This makes domain-tuned systems a more practical, precise, and cost-efficient choice for regulated industries that cannot rely on broad, probabilistic models.

- Improved decision consistency across teams

Domain AI uses standardized terminology, rules, and verified reference sources. This reduces variability in medical coding, legal interpretation, or risk evaluation. It also produces reliable examples of domain-specific LLM outputs that employees can review and trust.

How to build a domain-specific AI system: Roadmap

Building a domain-specific AI system is not a single model training exercise. It is an architectural shift that aligns data, knowledge, governance, and workflows around safe, precise, domain-grounded intelligence. Below is a practical roadmap enterprises can follow to move from experimentation to a fully operational, compliant platform.

Phase 1: Discovery and domain scoping

This step defines the problem space, evaluates regulatory constraints, and maps out where generic models fail. Teams identify domain ontologies, controlled vocabularies, high-value use cases, and the quality of available enterprise data. The objective is to create a clear gap analysis between current-state capabilities and the requirements for a domain-specific LLM.

Phase 2: Data foundation and knowledge engineering

Enterprises curate structured and unstructured datasets, build taxonomies, and formalize domain logic.

This includes cleaning historical records (de-duplication, normalization), encoding policies (business rules, regulatory constraints), linking data sources (EHR, claims systems, policy databases), and preparing the semantic structures that will later power retrieval, reasoning, and compliance layers. High-quality knowledge work at this phase determines the long-term accuracy of the system.

Phase 3: Model selection and architecture design

Teams choose between small language models (SLMs), fine-tuned LLMs, or hybrid architectures. The design also incorporates retrieval systems, the semantic layer, and guardrails that enforce regulatory constraints. The goal here is to create a domain-specific LLM that is tightly aligned to business rules, terminology, and risk expectations rather than relying on generic behavior.

Phase 4: Training, fine-tuning, and validation

Models are fine-tuned on domain datasets, expert-labeled examples, and compliance-grounded documents. Evaluation goes beyond accuracy metrics to include explainability (can the model cite sources?), auditability (is reasoning traceable?), bias testing (does it perform consistently across demographics?), and safety constraints (does it refuse harmful requests?).

Human SMEs validate outputs, ensuring the model is consistent with real operational logic, not just statistically correct text.

Phase 5: Deployment and integration

The domain-specific AI system is embedded into enterprise workflows through APIs, orchestration layers, or customer-facing applications.

This phase ensures compatibility with existing infrastructure (EHR systems, claims platforms, core banking), security policies (zero-trust architecture, data encryption), and system monitoring (performance metrics, error logging). Enterprises often initially deploy in controlled environments where outputs can be supervised before scaling.

Phase 6: Governance, monitoring, and continuous optimization

This final phase builds long-term resilience. Enterprises establish monitoring pipelines, data drift detection (identifying when input distributions change), versioning practices (model lineage, rollback capabilities), and governance frameworks that ensure outputs remain legally compliant and operationally reliable.

Continuous updates to domain knowledge, taxonomies, and fine-tuned data keep the model aligned with evolving regulations and business needs.

Real-world use cases in regulated industries

Domain-specific AI delivers the most value where decisions depend on domain-specific knowledge, strict compliance rules, and high-stakes accuracy. Below are three core sectors where specialized LLMs already outperform generic systems.

Healthcare: Clinical decision support & administrative automation

Domain-tuned models support clinical reasoning, summarize EMR data (patient histories, lab results, medication lists), validate against clinical guidelines (NCCN protocols, AHA recommendations), and assist in decision support workflows (differential diagnosis, treatment planning).

They can interpret imaging reports (radiology findings, pathology results), flag contraindications (drug-drug interactions, allergy alerts), and generate evidence-linked recommendations. In real deployments, providers use SLMs to automate chart reviews (extracting clinical entities, generating progress notes), accelerate prior authorization (validating medical necessity), and reduce administrative overload while staying aligned with HIPAA and medical standards.

Insurance: Claims processing & policy interpretation

SLMs enhance claims processing by interpreting documents (FNOL reports, repair estimates, medical records), detecting inconsistencies, and automating coverage checks.

Models trained on policy language outperform general LLMs when evaluating exclusions (what's not covered), assessing damage narratives (claim validity), or supporting fraud detection (anomaly patterns). Enterprises also deploy them for policy interpretation AI, enabling adjusters to query complex policy clauses with accurate, audit-ready outputs.

Finance: Risk scoring, KYC/AML, and compliance automation

Banks and fintechs leverage domain-specific AI for risk scoring, KYC validation, and compliance automation. Models tuned to financial regulations can detect unusual behavior in transaction streams, generate audit documentation, and evaluate customer risk far more reliably than generic models.

Domain AI also improves credit analysis by incorporating structured financial indicators (debt-to-income ratio, cash flow coverage) and rule-based regulatory criteria (Basel III capital requirements, Dodd-Frank stress tests).

Final thoughts

Enterprises in regulated industries are learning that generic LLMs cannot deliver the reliability, explainability, or compliance their operations require. Moving toward domain-specific AI is not only a technological upgrade – it is a governance strategy.

These systems reason over validated knowledge, adhere to policy constraints, and produce outputs that experts can trust. Healthcare providers, insurers, and financial institutions that embrace this shift gain safer automation, stronger decision support, and AI that aligns with real-world regulatory demands.

If your organization is evaluating how to design or operationalize a domain-specific LLM, Binariks can help you build a secure, compliant, end-to-end AI foundation. Our teams work with enterprises to shape knowledge architectures, engineer semantic layers, and deploy production-grade AI that aligns with industry standards rather than bending them. Ready to explore what domain-grounded intelligence looks like in practice? Let's build it together.

Share