AI readiness assessment is a structured evaluation methodology that determines whether an organization's data infrastructure, process maturity, technical architecture, and workforce capabilities can support production-grade AI systems.

Many companies rush into AI pilots without this foundational assessment, resulting in failed proof-of-concepts, wasted budgets, and automation initiatives that never scale beyond experimentation.

IBM's Global AI Adoption Index confirms this pattern: organizations that systematically evaluate readiness barriers before deployment achieve significantly higher returns on AI investments than those that skip this validation phase.

After reading this guide, you'll know how to:

- Identify which processes have the highest ROI for AI-driven automation

- Evaluate your data, infrastructure, and team capabilities realistically

- Use an evidence-based framework to avoid failed AI pilots and wasted investment

Ready to dive in? Read on and get started with defining your AI readiness and unlocking automation with real return.

What is AI readiness?

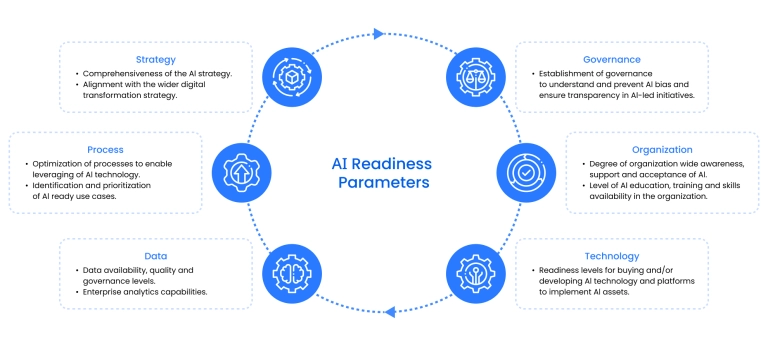

AI readiness represents an organization's measured capacity across four interdependent dimensions:

- data foundations (quality, accessibility, governance)

- process maturity (standardization, documentation, repeatability)

- technical infrastructure (APIs, integration patterns, scalability)

- organizational capability (skills, change management, operational adoption)

AI readiness differs from general technology readiness by specifically measuring whether data pipelines, model deployment workflows, and human-AI collaboration patterns can sustain continuous model retraining, drift monitoring, and integration with operational systems – requirements absent from traditional software projects.

A truly AI-ready organization has clean, accessible data, documented processes, secure integration pathways, and teams prepared for new workflows. However, most companies discover these gaps too late.

Research by Qlik, a global leader in data integration, reveals the consequences of deploying AI without systematic readiness assessment: 81% of AI professionals report that their organizations still face significant data quality issues, yet 85% believe leadership is not adequately addressing these problems.

Data, analytics, and AI professionals recognize that most companies are investing in AI without prioritizing the foundational data infrastructure that determines whether models will function reliably in production.

This pattern leads to predictable failures: inconsistent data, unclear ownership, brittle legacy systems, and AI pilots that collapse before reaching production. A structured readiness approach eliminates that risk and helps identify where external partners such as specialized AI consulting companies can accelerate progress.

Key dimensions of AI readiness

The AI readiness framework below helps summarize the key dimensions organizations must evaluate before moving into automation.

- Data foundations → Process maturity (causal relationship): Standardized processes generate consistent, high-quality data; conversely, poor process documentation creates data fragmentation that blocks automation.

- Process maturity → technical infrastructure (enabling relationship): Well-documented workflows define clear integration requirements, API contracts, and event schemas that infrastructure teams must support.

- Technical infrastructure → organizational readiness (dependency relationship): Modern deployment pipelines enable faster experimentation cycles, which accelerate workforce learning and adoption confidence.

- Strategic alignment (governance relationship): Acts as the coordinating layer across all four dimensions, ensuring investments align with measurable business outcomes rather than technology experimentation.

AI readiness spans these four interconnected dimensions, and companies that reach production with AI consistently demonstrate maturity across all of them – not in isolation, but as a reinforcing system. Here's how each component works in practice:

Data foundations: Quality, completeness, and governance

Reliable AI starts with reliable data. Companies typically underestimate how fragmented or inconsistent their information really is. This is where data readiness for AI becomes critical, assessing whether data is complete, accurate, accessible, and governed.

Example: A logistics provider planned to automate ETA predictions but discovered 22% of route data lacked GPS logs. A readiness review exposed the issue early and redirected resources toward fixing ingestion pipelines instead of building a failing model.

Process maturity: Standardized and measurable workflows

Automation succeeds when workflows are stable, documented, and repeatable. Teams with strong process discipline can adopt AI without breaking operations. In this context, AI data readiness describes whether a process generates consistent input data, follows a clear path, and avoids exception-heavy steps.

Example: A healthcare insurer cut claim-handling time after standardizing forms and removing manual branching logic that previously made automation impossible.

Technical infrastructure: APIs, integrations, and scalability

Your architecture determines how fast AI solutions can be deployed and how reliably they run at scale. Modern infrastructure – APIs, cloud workloads, event pipelines – allows AI components to connect to operational systems without disruption. Many organizations use an AI readiness assessment framework to evaluate integration maturity, security controls, and deployment readiness.

Example: A retail company modernized its legacy order-management system with event streaming, enabling real-time fraud detection instead of daily batch checks.

Organizational readiness: Skills, culture, and change enablement

Even high-quality models fail if teams are not prepared for new workflows. Strong organizational readiness means leadership alignment, clear ownership, and a workforce willing and trained to use AI tools. Mature companies use an AI readiness framework to assess skills, communication practices, and operational support.

Example: A customer-support team adopting an AI assistant achieved fast adoption only after training agents on new triage rules and updating KPIs accordingly.

Strategic alignment and prioritization

Readiness also requires clarity on why AI is being deployed. Without a structured assessment for AI readiness, companies risk investing in low-impact experiments instead of ROI-driven automation. Strategic alignment ensures use cases support measurable business goals – faster resolution time, cost reduction, higher throughput, or improved compliance.

Example: A manufacturing firm identified predictive maintenance as its top initiative only after evaluating cost of downtime, data availability, and automation feasibility side by side.

AI readiness comes down to whether your data, processes, infrastructure, and teams can support AI that actually reaches production. High-quality data, standardized workflows, modern integration capabilities, and a prepared workforce are the core enablers. Companies that evaluate these dimensions early avoid the common traps: failed pilots, unexpected data gaps, and automation efforts that never scale.

How to conduct an AI readiness assessment: Step-by-step framework

A structured assessment helps companies avoid failed pilots and focus resources on automation that delivers measurable ROI. Below is a practical, CTO-friendly framework designed to identify strengths, expose hidden blockers, and build a scalable AI roadmap.

Step 1: Identify high-ROI automation opportunities through process scoring

Before evaluating maturity, establish which operational processes qualify as viable automation candidates based on quantifiable business impact. Many companies rely on an internal discovery workshop supported by an AI readiness checklist to filter out low-value ideas.

This discovery phase should apply a process scoring methodology that filters opportunities using three weighted criteria:

- annual manual effort (labor hours × average hourly cost)

- process stability (exception rate, variability in execution paths)

- measurable business KPIs (cycle time reduction, error rate improvement, cost avoidance).

Automation Viability Score = (Annual Manual Cost × 0.4) + (Process Stability Score × 0.3) + (KPI Impact Score × 0.3)

Where:

- Annual Manual Cost = Hours per execution × Annual volume × Hourly labor rate

- Process Stability Score = 100 - (Exception rate % + Variance in execution time %)

- KPI Impact Score = Weighted sum of improvements across speed, accuracy, compliance, throughput

Actions:

- Map candidate processes across operations, finance, customer service, supply chain, and engineering departments

- Quantify their annual manual effort using time-motion analysis or workflow tracking data

- Calculate current error rates, cycle times, and cost-per-transaction baselines

- Identify processes where delays create cascading business impact (SLA penalties, customer churn, regulatory risk)

Questions to answer:

- Which processes consume >X annual manual hours (minimum threshold for ROI-positive automation)?

Note: Automation viability thresholds vary significantly by industry, geography, and labor costs.

A healthcare process consuming 800 hours/year of specialist time ($120/hr = $96K annually) may justify automation, while an administrative process consuming 800 hours at $25/hr ($20K annually) likely doesn't. Contact Binariks for a customized ROI assessment based on your specific cost structure and operational context.

- Can success be measured through objective KPIs (processing time, accuracy rate, cost per transaction) rather than subjective quality assessments?

- Is the process standardized with a manageable exception rate for AI? Highly repetitive processes (invoice processing, data entry) should have <10% exceptions, while semi-structured workflows (customer triage, document classification) can tolerate 20–30% exceptions if AI correctly identifies and escalates edge cases.

- Does the process generate structured or semi-structured data that models can learn from, or is it entirely unstructured (requiring more complex NLP/computer vision approaches)?

Step 2: Analyze data & infrastructure readiness

The second step clarifies what is AI readiness from a technical standpoint: whether data, systems, and integrations can support automation. Most AI failures are rooted in poor data quality or brittle legacy systems.

Actions:

- Audit data sources for accuracy, completeness, accessibility, and governance.

- Evaluate integrations (APIs, pipelines, cloud readiness).

- Review security and compliance constraints.

Questions to answer:

- Is the required data complete and consistently formatted?

- Do we have API or event-based access to source systems?

- Is cloud infrastructure mature enough for model hosting and scaling?

Step 3: Assess feasibility (technology, skills, data)

Once opportunities and data conditions are clear, evaluate whether automation is feasible with current tools and capabilities. This stage ties into broader business AI readiness, ensuring the company has the skills and architecture to deliver results.

Actions:

- Assess internal engineering, ML, and DevOps capabilities.

- Compare in-house vs. external delivery options.

- Estimate complexity, risk, and time-to-value.

Questions to answer:

- Do we have the skill set to build and maintain the solution?

- Is the process dependent on legacy systems that limit automation?

- What blockers could prevent deployment within 3–6 months?

Step 4: Prioritize based on effort vs. ROI

Not every feasible idea should be executed first. This prioritization step sorts opportunities by value, complexity, cost, and strategic impact. Companies also consider sector-specific value, such as claims automation referenced in our breakdown of AI in insurance .

Actions:

- Score each use case by ROI potential, complexity, and dependencies.

- Identify quick wins that generate early momentum.

- Build a 3-tier roadmap: quick wins, strategic projects, future candidates.

Questions to answer:

- Which initiatives deliver ROI within 3–9 months?

- Which require major refactoring or multi-team dependency?

- What will create the biggest operational or financial impact?

Step 5: Address people & culture readiness

The final step focuses on organizational AI readiness, the human elements that make or break adoption. Even well-designed systems fail when employees resist change or aren’t properly trained.

Actions:

- Assess change-management maturity and communication practices.

- Identify role impacts, training needs, and new workflows.

- Create alignment with leadership and operational teams.

Questions to answer:

- Do employees understand how AI will support their work?

- Are KPIs, incentives, or workflows changing?

- Does leadership sponsor and communicate the initiative consistently?

A step-by-step assessment helps companies avoid guesswork and build AI initiatives with real business impact. By evaluating processes, data, feasibility, ROI, and organizational readiness, teams create a roadmap that reduces risk and turns AI from experimentation into measurable value.

AI readiness checklist for identifying high-ROI automation opportunities

Here is our version of a checklist to help companies avoid automating the wrong processes and expose blockers early. Each question should be answered "YES" or "NO", with "no" signalling a risk that must be addressed before moving forward. The checklist aligns with modern AI readiness strategies used by enterprises that successfully scale AI.

Note: This checklist provides directional self-assessment guidance, not definitive readiness scores.

AI automation viability is highly context-dependent – influenced by industry regulations, organizational maturity, labor costs, strategic priorities, and technical infrastructure. The scoring ranges below reflect common patterns across mid-market enterprises but should not be treated as universal rules.

For a comprehensive, customized AI readiness assessment, contact Binariks to evaluate your specific operational context, cost structure, and strategic objectives.

1. Business & use case clarity

Your organization should know why it wants AI and what value it expects.

- Is there a clearly defined business problem with measurable impact?

- Do we understand how automation will reduce cost, improve speed, or increase accuracy?

- Have we quantified the expected ROI using real operational metrics?

- Is the process stable, repetitive, and high-volume enough to automate?

- Are stakeholders aligned on the expected outcome and success criteria?

2. Data availability & quality

Data is the deciding factor in whether automation works or fails during implementation.

- Do we have complete historical data for the targeted process?

- Is the data accurate, consistent, and free from major gaps?

- Is data accessible via APIs, pipelines, or controlled exports?

- Are ownership and governance rules clearly defined?

- Do we have documentation for data sources, fields, and quality checks?

3. Technical feasibility & integration

A good use case must fit your architecture, not fight it.

- Are core systems capable of integrating with AI models or automation tools?

- Do we have secure environments (cloud/on-prem) for model deployment?

- Is the integration effort realistic for a pilot deployment within a 3-6 month window? Note that production-scale integration typically requires 6-12 months, depending on system complexity and organizational change management requirements.

- Can we maintain the solution without deep refactoring of legacy systems?

- Do we have monitoring, logging, and rollback capabilities?

4. Skills, people, and adoption readiness

Companies often underestimate human-side risks, the cornerstone of real AI readiness evaluation.

- Do we have internal owners who will maintain and evolve the solution?

- Are employees prepared for process changes introduced by automation?

- Do teams have relevant skills (ML, data engineering, DevOps), or do we need external support?

- Are incentives, KPIs, or workflows aligned with the new AI-powered process?

- Do leaders actively support the change, training, and adoption effort?

5. Compliance, security, and operational risks

Automation must meet your industry’s standards.

- Does the initiative meet regulatory and audit requirements?

- Have we evaluated model risk, bias, and failure-mode scenarios?

- Do we have a cybersecurity strategy for data access and model usage?

- Are retention, monitoring, and incident-response procedures defined?

- Do we have a continuity plan if the AI system fails?

6. Expected ROI & prioritization

High-ROI automation requires a realistic cost-benefit view and strategic sequencing.

- Can we estimate time-to-value based on real operational data?

- Does the initiative reduce measurable operational cost or cycle time?

- Do we have a clear comparison against other potential use cases?

- Does this initiative qualify as a quick win or a strategic investment?

- Can external partners or a dedicated team model accelerate delivery?

Checklist scoring and risk classification

Each dimension within the readiness checklist represents a distinct risk category with different remediation timelines and cost implications. Understanding these classifications helps prioritize investments:

Risk entity types:

| Risk Category | Blocker Severity |

| Data Availability & Quality | Critical – AI cannot function without viable training data |

| Technical Feasibility & Integration | High – Models cannot deploy without integration pathways |

| Business & Use Case Clarity | Medium – Causes scope creep and unclear success criteria |

| Skills, People, Adoption | Medium – Solutions fail post-deployment without operational support (High severity if your organization has zero ML/data engineering expertise internally and no external partners engaged) |

| Compliance, Security, Risk | High – Regulated industries cannot deploy without clearance |

| Expected ROI & Prioritization | Low – Affects sequencing, not viability |

*Interpretation framework: "NO" answers in Critical or High severity categories must be resolved before proceeding to proof-of-concept development. Medium and Low severity gaps can often be addressed in parallel with initial pilots, though they increase project risk and timeline variability.

How to interpret the checklist results

This checklist uses a two-stage interpretation:

Stage 1: Identify critical blockers that must be resolved before proceeding

Stage 2: Calculate overall readiness score to prioritize initiatives

Stage 1

Before counting total "Yes" answers, evaluate each risk category against its severity threshold:

- CRITICAL Blockers (Must be 100% "Yes" to proceed):

Data Availability & Quality (5 questions)

All 5 must be "Yes" → If ANY "No": STOP. Fix data issues before continuing.

Why: AI models trained on incomplete, inaccurate, or inaccessible data will fail in production – regardless of other strengths.

- HIGH-Risk Blockers (Must be ≥80% "Yes"):

Technical Feasibility & Integration (5 questions)

≥4/5 "Yes" required → If ≤3/5: HIGH RISK. Address integration gaps or simplify automation scope.

Why: Models that can't integrate with production systems remain lab experiments.

Compliance, Security, Risk (5 questions)

≥4/5 "Yes" required (regulated industries) → If ≤3/5: STOP. Resolve compliance before proceeding.

Why: Deploying non-compliant AI creates legal/regulatory exposure exceeding any efficiency gains.

- MEDIUM-Risk Areas (Should be ≥60% “Yes”, can address in parallel):

Business & Use Case Clarity (5 questions)

≥3/5 "Yes" recommended → If ≤2/5: Causes scope creep, unclear success criteria

Mitigation: Define clear business sponsor, success metrics, and use case boundaries before pilot

Skills, People, Adoption (5 questions)

≥3/5 "Yes" recommended → If ≤2/5: Solutions fail post-deployment due to lack of operational support

Mitigation: Engage external AI development partners, implement training programs, or hire key roles

- LOW-Risk (Affects prioritization, not viability):

Expected ROI & Prioritization (5 questions)

≥3/5 "Yes" recommended → If ≤2/5: Deprioritize vs. higher-ROI use cases (don't kill project)

Mitigation: Adjust sequencing–pursue as "strategic investment" after quick wins deliver momentum

Stage 2: Overall Readiness Score (If stage 1 passes)

If no Critical or High blockers exist, calculate total "Yes" answers:

- 25–30 "Yes" → HIGH READINESS: Pilot-ready

Strong position to automate. The use case has clear business value, accessible data, technical feasibility, and leadership support.

Action: Move use case into pilot phase with defined success metrics and production deployment path.

- 20–24 "Yes" → MEDIUM READINESS: Address gaps, then pilot

Potential exists, but medium-risk gaps (business clarity, skills, ROI justification) will cause delays or inflate costs.

Action: Create a 4–8 week remediation plan addressing specific "No" answers, then proceed to pilot.

- 15–19 "Yes" → LOW READINESS: Significant remediation required

Multiple gaps across categories indicate foundational work needed before automation becomes viable.

Action: Plan a 3–6 month preparation phase (data cleanup, workflow documentation, capability building) before reconsidering.

- <15 “Yes” → NOT READY: Foundational improvements needed

Attempting automation now will result in failed pilot, unclear outcomes, and wasted budget.

Action: Pause the AI initiative. Focus on establishing data governance, process documentation, and technical infrastructure.

Note: Context Matters

This scoring framework provides directional guidance, not definitive go/no-go decisions. Industry context, organizational maturity, and strategic priorities all influence interpretation:

- Regulated industries (healthcare, finance): Compliance "No" answers are showstoppers–even one unresolved issue can block deployment.

- Early-stage AI adopters: Lower scores (18–20 "Yes") may still justify pilots if the organization is intentionally building foundational capabilities.

- Mission-critical processes: Higher confidence thresholds are required (often 28–30 "Yes") versus experimental use cases (22–25 may be acceptable).

Contact Binariks to move from self-assessment to actionable AI strategy with measurable outcomes.

Identifying automation opportunities with high ROI

AI delivers the highest ROI in processes that are high-volume, repetitive, measurable, and tied directly to operational cost.

The strongest opportunities sit at the intersection of three criteria: significant manual effort, clear business KPIs, and easy access to usable data. When these conditions align, automation pays back quickly because it removes structural friction in workflows that touch many employees or customers.

Teams also see strong returns when AI accelerates document-heavy or exception-prone processes such as email triage, claims handling, underwriting support, invoice matching, and customer-support routing. These are repeatable, time-sensitive tasks where even modest accuracy improvements translate into measurable financial impact.

McKinsey's latest survey highlights where companies already see the strongest AI-driven returns, which closely aligns with the types of processes worth prioritizing for automation:

Use this lightweight calculation to compare automation candidates:

ROI = (Annual value created − Investment Cost) ÷ Investment Cost × 100

Where investment cost includes:

- Development costs (amortized over 3-year lifecycle): Model development, data engineering, integration work, testing

- Infrastructure costs: Cloud compute, storage, API usage, monitoring tools

- Operational costs: Model retraining, monitoring, incident response, ongoing maintenance

- Organizational costs: Training, change management, process redesign

Annual value created encompasses:

- Direct labor savings: (Manual hours eliminated × Loaded hourly rate)

- Error reduction value: (Error cost × Error rate reduction %)

- Cycle time improvement: (Transactions per year × Value per hour saved)

- Avoided penalties: SLA compliance improvements, regulatory fine avoidance

- Throughput gains: Revenue from increased processing capacity

This formula prevents teams from chasing trendy use cases with weak business impact.

After having evaluated on the qualitative aspects, it is vital that a ROI calculation is done on the selected use case and the vendor. Any ROI calculation has fundamentally two aspects to look at:

- What are my costs to implement?

- What value would it generate?

At it's core, ROI is 100% if all of my costs can be recovered. Often a timeframe is put for achieving 100% (break-even point) and with most automation projects, clients typically look to get a return on their investment by Year 1 or Year 2.

How Binariks supports your AI readiness journey

Binariks helps companies move from uncertainty to a clear, production-ready AI roadmap. It starts with an in-depth readiness review that looks at data quality, process maturity, technical infrastructure, and organizational alignment.

This gives teams a practical view of where automation can generate measurable ROI and what must be fixed before implementation.

Every organization's AI readiness is contextual. Binariks conducts comprehensive AI readiness assessments that:

- Map your specific operational processes to automation opportunities

- Evaluate data quality, infrastructure maturity, and skill gaps against industry benchmarks

- Provide prioritized roadmaps with remediation plans, timelines, and ROI projections tailored to your cost structure

Once the assessment defines priorities, Binariks helps you select the strongest opportunities, validate feasibility, and model expected returns.

From there, the team delivers end-to-end implementation–data pipelines, model development, system integration, and production deployment – so AI becomes a dependable operational capability rather than a one-off pilot.

By combining engineering expertise with industry insight, Binariks helps organizations adopt AI with confidence and scale it sustainably across the business.

Conclusion

AI becomes truly valuable when companies know where to apply it, how to prepare for it, and how to scale it.

A structured readiness assessment helps avoid costly missteps, identify high-ROI use cases, and build a roadmap grounded in tangible business outcomes. Teams that take this approach move faster, reduce risk, and see clearer payback from automation.

If your organization is preparing for AI adoption, Binariks can help you assess readiness, select the right opportunities, and deliver solutions built to operate at scale.

Let's turn your automation potential into meaningful results!

Share