As artificial intelligence becomes essential to business strategy, accountability and ethical leadership translate into trust, resilience, and growth.

This article gives you practical ways to build, run, and measure AI governance – from foundational principles to real-life results that help your business innovate safely.

Here's what you'll find:

- How great governance builds trust and supports business goals

- Core ethical principles for enterprise AI

- A step-by-step guide to practical governance

- Global standards and regulatory updates you need to know

- Tools, metrics, and case studies that prove real-world impact

What makes AI governance work?

Worried about risky AI decisions or unclear compliance rules? You're not alone. Trust in AI starts with clear, simple rules you and your team understand. An AI governance framework isn't extra paperwork; it's your blueprint for building systems everyone can rely on.

What is an AI governance framework?

Think of your governance framework as a rulebook. It outlines all the policies and processes guiding how your company builds, runs, and manages AI.

For big organizations, having this structure in place is a must. It shields your business from legal trouble and builds customer confidence.

A strong framework answers:

- Who takes responsibility for key decisions

- How records and documentation are managed

- How impacts are tracked, from launch through every AI update

Ethics you can stand behind

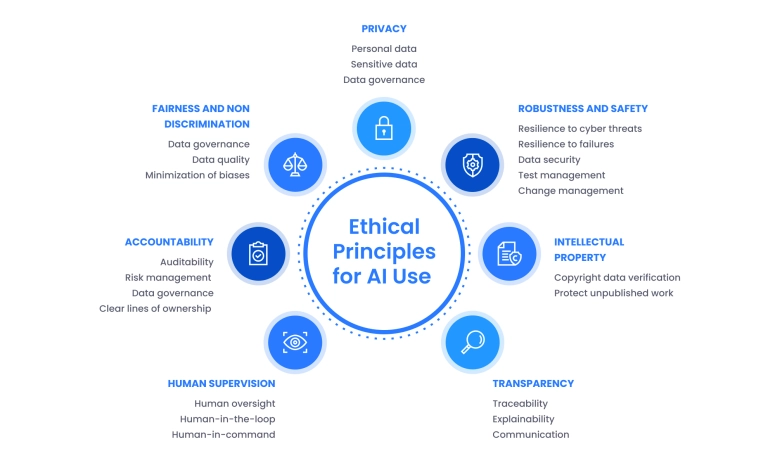

If you want AI that your team and customers can trust, these ethical principles guide you:

Compliance builds trust

A well-planned AI compliance framework not only passes audits but makes partnerships easier.

Companies with clear governance earn trust from users and regulators, building a reputation for ethical decision-making. It's more than ticking boxes – it's the foundation for long-term growth. Binariks is with you every step, helping turn these principles into action.

From theory to practice: Operationalizing AI governance

Wondering how to transform good intentions into working systems? You're not alone.

Many organizations get stuck at the planning stage. But with the right approach, responsible AI becomes part of what you do daily. Here's how we help you build effective governance that grows with technology and regulation.

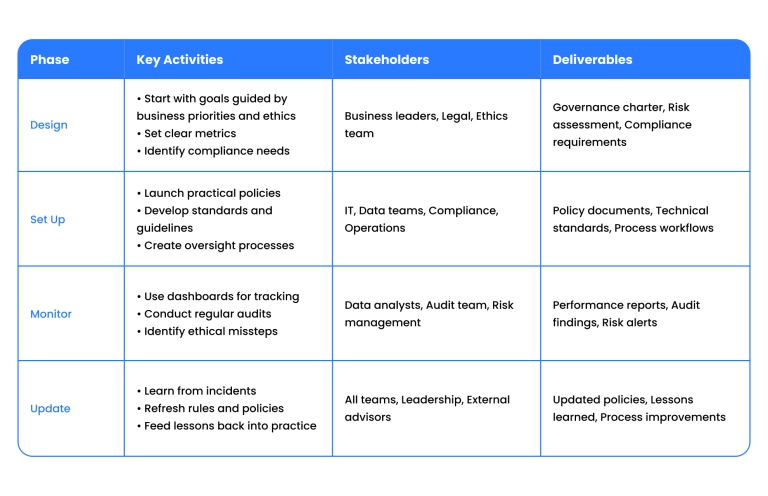

Steps to build your governance framework

Creating and maintaining an enterprise AI program takes real teamwork. Here's our step-by-step strategy:

Key roles: Who's in charge?

Accountability matters. Real people, not faceless committees, own outcomes. Build your structure with:

- Governance councils: Senior leaders set strategy and sign off on policies.

- Cross-disciplinary teams: IT, data, compliance, and business experts work together.

- Ethics boards: Outside advisors tackle sensitive cases, helping you stay grounded.

Putting governance to work

Operationalizing governance means turning plans into repeatable processes. We'll help you assemble your toolkit, making everything from model management to incident response clear and actionable.

- Policies: Define what's okay and what isn't

- Standards: Simple, clear rules for documentation and testing

- Lifecycle management: Track every model from design to retirement

- Risk checks: Catch bias, privacy, or reliability issues before they spread

- Incident response: Fast, step-by-step plans for handling problems

Integration: Governance that powers strategy

When governance connects with your wider business plan and data management, you build trust across every department. We work with leaders to weave responsible AI into the fabric of your business, making ethical decision-making part of your daily routine.

Ready to move forward? We'll guide your team from planning to real results so that you can focus on innovation with confidence.

AI regulation and standards: What you need to know

You want AI that follows the rules and avoids costly surprises. But laws and standards change fast. We help you keep up, so your business stays ahead.

Legal and regulatory overview

Europe leads with the EU AI Act, which lays out strict transparency and risk management demands.

The UK's Pro-Innovation Framework offers some flexibility without ignoring ethics. In the US, federal orders set national priorities for AI, but local state laws (like California's privacy rules) also shape what's allowed.

In the United States, the regulatory landscape is complex and multi-layered. Federal orders set national priorities for AI, while various federal agencies are developing sector-specific guidance.

Additionally, local state laws (like California's privacy rules) also shape what's allowed.

US businesses should pay particular attention to emerging federal requirements and state-level legislation that may impact AI deployment and monitoring.

Every year brings new updates – staying on top of them isn't optional if you want your AI to succeed.

Going beyond laws: Formal standards

For companies seeking concrete proof that their AI is trustworthy, standards like ISO 42001 and IEEE 7000 make a real difference:

- ISO 42001: Offers a framework for managing AI and demonstrating compliance. If you want to show regulators you mean business, this cert can help.

- IEEE 7000: Focuses on building ethical values into the design plan, boosting your reputation for fairness and responsibility.

Together, these frameworks structure your compliance efforts and simplify reporting.

Global principles driving change

International codes like the OECD AI Principles demand fairness and transparency worldwide, influencing local regulators.

UNESCO's standards push a human-rights-first angle, while the G7's code calls for voluntary commitments to responsible practices, setting higher expectations.

Tools and metrics

Worried about hidden bias or unchecked errors in your AI? Don't just hope for the best – get the right tools and clear metrics to measure what matters.

Tech for oversight and transparency

Modern platforms monitor AI behavior, flagging problems before they spiral. Bias detection software scans for fairness issues, while audit trails keep a record of every change.

With these tools, you can always answer the tough questions: "Who did what, and when?" Transparency you can count on.

KPIs for ethics and compliance

Policies need proof. Here are the metrics leading teams track:

- Number of bias incidents identified and fixed

- Percentage of models meeting fairness standards

- Successful compliance audits per quarter

- Time taken to resolve flagged issues

In today's environment, these KPIs show real accountability and keep your AI programs honest.

What's the return on governance?

Implementing strong governance costs time and money, but the payoff is real. Firms adopting frameworks saw a 22% reduction in regulatory penalties over just two years. Cutting mistakes and speeding up approvals saves money and stress.

Small businesses gain, too. If you run an SME, start by mapping out risks and choosing tools that fit your budget – you'll see results.

Ready to put accountability and transparency at the heart of your AI program? Binariks helps you build, track, and grow every step of the way.

Looking ahead: Trends and future directions

Proactive beats reactive

Waiting for a problem isn’t a strategy. Forward-thinking businesses use custom AI governance tailored to their industry.

Healthcare teams prioritize patient well-being, while finance groups emphasize transparency. The best results come from tackling real risks before they become news headlines.

Roadmapping from 2025 Onward

AI laws and standards will keep shifting. Building a roadmap makes change more straightforward to handle. With practical milestones, you can adjust policies as new tech and rules roll out.

Global partnerships

Unified standards take teamwork. International collaboration makes it easier for businesses to deliver trusted AI and for users to feel safe. Expect more partnerships and shared ideas – less confusion, and more confidence.

Diversity, inclusion, and the next wave

Including different perspectives during AI development helps avoid blind spots and bias. Early talk of self-governing AI systems managing their own ethics signals a possible shift in how companies approach long-term responsibility.

Final thoughts

AI governance isn't a box to tick – it's the foundation for trustworthy systems that drive sustainable growth while protecting your stakeholders.

With proven principles, the right tools, and expert guidance, you can confidently balance innovation with accountability as regulations evolve.

Binariks helps organizations transform governance frameworks from requirements into competitive advantages. Our enterprise AI implementation expertise ensures your AI initiatives deliver measurable results while maintaining the highest ethical standards – making responsible AI a cornerstone of your business strategy.

Share